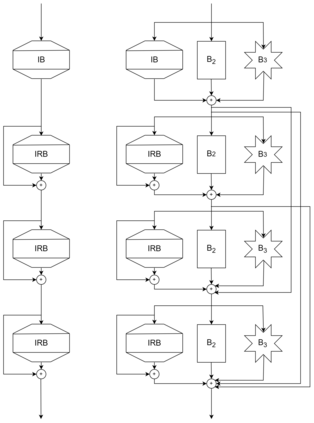

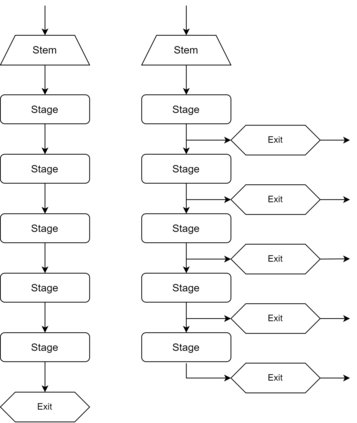

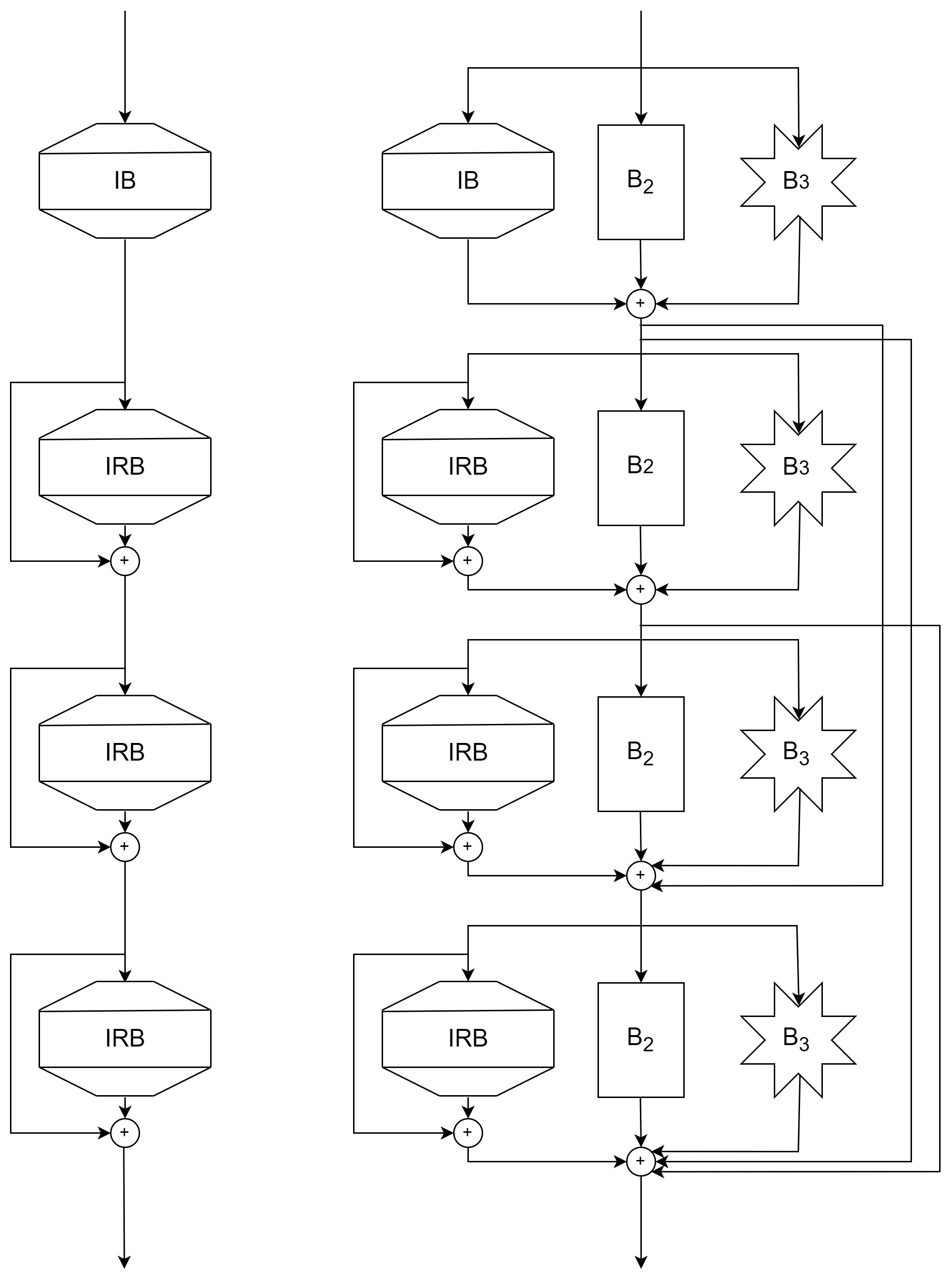

The use of Neural Architecture Search (NAS) techniques to automate the design of neural networks has become increasingly popular in recent years. The proliferation of devices with different hardware characteristics using such neural networks, as well as the need to reduce the power consumption for their search, has led to the realisation of Once-For-All (OFA), an eco-friendly algorithm characterised by the ability to generate easily adaptable models through a single learning process. In order to improve this paradigm and develop high-performance yet eco-friendly NAS techniques, this paper presents OFAv2, the extension of OFA aimed at improving its performance while maintaining the same ecological advantage. The algorithm is improved from an architectural point of view by including early exits, parallel blocks and dense skip connections. The training process is extended by two new phases called Elastic Level and Elastic Height. A new Knowledge Distillation technique is presented to handle multi-output networks, and finally a new strategy for dynamic teacher network selection is proposed. These modifications allow OFAv2 to improve its accuracy performance on the Tiny ImageNet dataset by up to 12.07% compared to the original version of OFA, while maintaining the algorithm flexibility and advantages.

翻译:近些年来,使用神经结构搜索(NAS)技术使神经网络设计自动化的做法越来越受欢迎。使用这种神经网络的硬件特性不同的装置扩散,以及需要减少搜索所需的能量消耗,已经实现了“一劳永逸”(OFA),这是一种生态友好的算法,其特点是能够通过单一的学习过程生成容易调整的模型。为了改进这一范式并开发高性能但又有利于生态的NAS技术,本文件介绍了OFA2, 扩大OFA的目的是在保持同样的生态优势的同时改进其性能。算法从建筑学角度改进了,包括早期输出、平行区块和密集跳过连接。培训过程通过两个新的阶段得到扩展,即“一劳永逸”(OFA)和“一劳永续”(Evalic Height),提出了新的知识蒸馏技术,以处理多功能网络,最后提出了动态教师网络选择的新战略。这些修改使OFAV2提高了其小图像网络数据集的准确性性能,比原始版本高12.07 %,同时保持灵活性和优势。