disentangled-representation-papers

https://github.com/sootlasten/disentangled-representation-papers

This is a curated list of papers on disentangled (and an occasional "conventional") representation learning. Within each year, the papers are ordered from newest to oldest. I've scored the importance/quality of each paper (in my own personal opinion) on a scale of 1 to 3, as indicated by the number of stars in front of each entry in the list. If stars are replaced by a question mark, then it represents a paper I haven't fully read yet, in which case I'm unable to judge its quality.

2018

? Learning Deep Representations by Mutual Information Estimation and Maximization (Aug, Hjelm et. al.) [paper]

? Life-Long Disentangled Representation Learning with Cross-Domain Latent Homologies (Aug, Achille et. al.) [paper]

? Insights on Representational Similarity in Neural Networks with Canonical Correlation (Jun, Morcos et. al.) [paper]

** Sequential Attend, Infer, Repeat: Generative Modelling of Moving Objects (Jun, Kosiorek et. al.) [paper]

*** Neural Scene Representation and Rendering (Jun, Eslami et. al.) [paper]

? Image-to-image translation for cross-domain disentanglement (May, Gonzalez-Garcia et. al.) [paper]

* Learning Disentangled Joint Continuous and Discrete Representations (May, Dupont) [paper] [code]

? DGPose: Disentangled Semi-supervised Deep Generative Models for Human Body Analysis (Apr, Bem et. al.) [paper]

? Structured Disentangled Representations (Apr, Esmaeili et. al.) [paper]

** Understanding disentangling in β-VAE (Apr, Burgess et. al.) [paper]

? On the importance of single directions for generalization (Mar, Morcos et. al.) [paper]

** Unsupervised Representation Learning by Predicting Image Rotations (Mar, Gidaris et. al.) [paper]

? Disentangled Sequential Autoencoder (Mar, Li & Mandt) [paper]

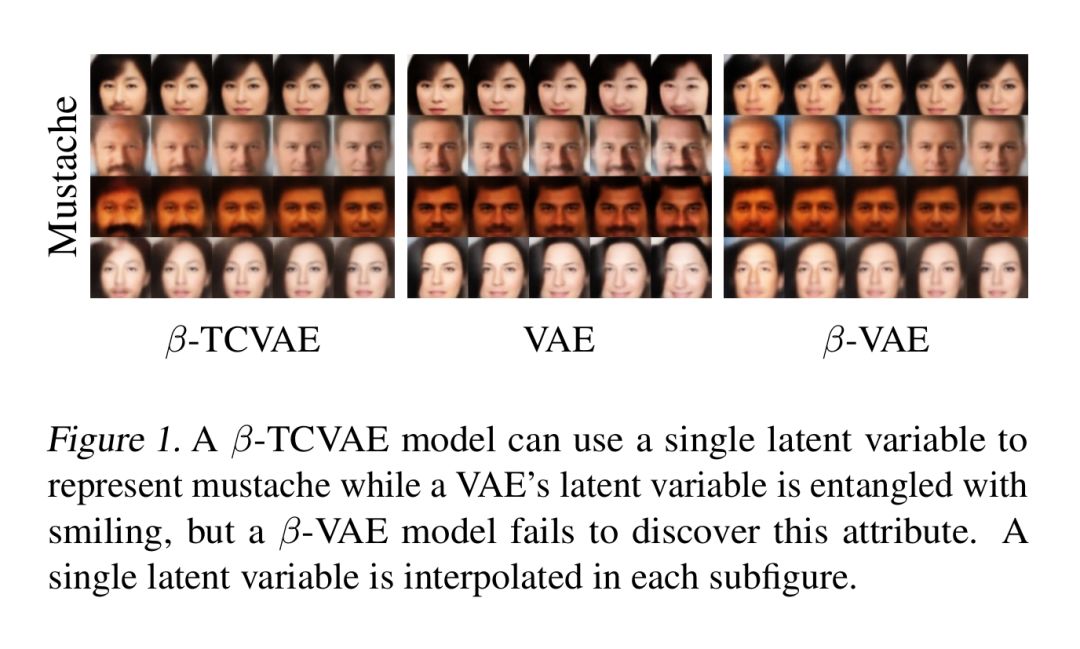

*** Isolating Sources of Disentanglement in Variational Autoencoders (Mar, Chen et. al.) [paper] [code]

** Disentangling by Factorising (Feb, Kim & Mnih) [paper]

** Disentangling the Independently Controllable Factors of Variation by Interacting with the World (Feb, Bengio's group) [paper]

? On the Latent Space of Wasserstein Auto-Encoders (Feb, Rubenstein et. al.) [paper]

? Auto-Encoding Total Correlation Explanation (Feb, Gao et. al.) [paper]

? Fixing a Broken ELBO (Feb, Alemi et. al.) [paper]

* Learning Disentangled Representations with Wasserstein Auto-Encoders (Feb, Rubenstein et. al.) [paper]

? Rethinking Style and Content Disentanglement in Variational Autoencoders (Feb, Shu et. al.) [paper]

? A Framework for the Quantitative Evaluation of Disentangled Representations (Feb, Eastwood & Williams) [paper]

2017

? The β-VAE's Implicit Prior (Dec, Hoffman et. al.) [paper]

** The Multi-Entity Variational Autoencoder (Dec, Nash et. al.) [paper]

? Learning Independent Causal Mechanisms (Dec, Parascandolo et. al.) [paper]

? Variational Inference of Disentangled Latent Concepts from Unlabeled Observations (Nov, Kumar et. al.) [paper]

* Neural Discrete Representation Learning (Nov, Oord et. al.) [paper]

? Disentangled Representations via Synergy Minimization (Oct, Steeg et. al.) [paper]

? Unsupervised Learning of Disentangled and Interpretable Representations from Sequential Data (Sep, Hsu et. al.) [paper] [code]

* Experiments on the Consciousness Prior (Sep, Bengio & Fedus) [paper]

** The Consciousness Prior (Sep, Bengio) [paper]

? Disentangling Motion, Foreground and Background Features in Videos (Jul, Lin. et. al.) [paper]

* SCAN: Learning Hierarchical Compositional Visual Concepts (Jul, Higgins. et. al.) [paper]

*** DARLA: Improving Zero-Shot Transfer in Reinforcement Learning (Jul, Higgins et. al.) [paper]

** Unsupervised Learning via Total Correlation Explanation (Jun, Ver Steeg) [paper] [code]

? PixelGAN Autoencoders (Jun, Makhzani & Frey) [paper]

? Emergence of Invariance and Disentanglement in Deep Representations (Jun, Achille & Soatto) [paper]

** A Simple Neural Network Module for Relational Reasoning (Jun, Santoro et. al.) [paper]

? Learning Disentangled Representations with Semi-Supervised Deep Generative Models (Jun, Siddharth, et al.) [paper]

? Unsupervised Learning of Disentangled Representations from Video (May, Denton & Birodkar) [paper]

2016

** Deep Variational Information Bottleneck (Dec, Alemi et. al.) [paper]

*** β-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework (Nov, Higgins et. al.) [paper] [code]

? Disentangling factors of variation in deep representations using adversarial training (Nov, Mathieu et. al.) [paper]

** Information Dropout: Learning Optimal Representations Through Noisy Computation (Nov, Achille & Soatto) [paper]

** InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets (Jun, Chen et. al.) [paper]

*** Attend, Infer, Repeat: Fast Scene Understanding with Generative Models (Mar, Eslami et. al.) [paper]

*** Building Machines That Learn and Think Like People (Apr, Lake et. al.) [paper]

* Understanding Visual Concepts with Continuation Learning (Feb, Whitney et. al.) [paper]

? Disentangled Representations in Neural Models (Feb, Whitney) [paper]

Older work

** Deep Convolutional Inverse Graphics Network (2015, Kulkarni et. al.) [paper]

? Learning to Disentangle Factors of Variation with Manifold Interaction (2014, Reed et. al.) [paper]

*** Representation Learning: A Review and New Perspectives (2013, Bengio et. al.) [paper]

? Disentangling Factors of Variation via Generative Entangling (2012, Desjardinis et. al.) [paper]

*** Transforming Auto-encoders (2011, Hinton et. al.) [paper]

** Learning Factorial Codes By Predictability Minimization (1992, Schmidhuber) [paper]

*** Self-Organization in a Perceptual Network (1988, Linsker) [paper]

Talks

Building Machines that Learn & Think Like People (2018, Tenenbaum) [youtube]

From Deep Learning of Disentangled Representations to Higher-level Cognition (2018, Bengio) [youtube]

What is wrong with convolutional neural nets? (2017, Hinton) [youtube]