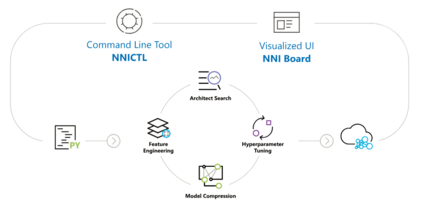

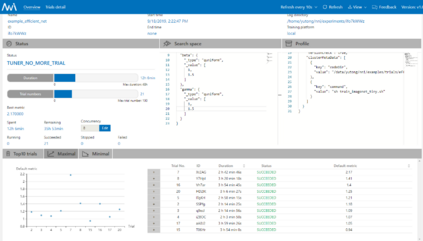

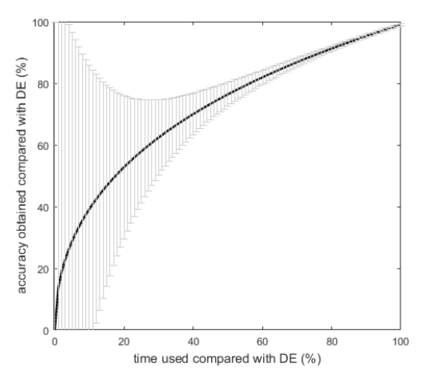

Since deep neural networks were developed, they have made huge contributions to everyday lives. Machine learning provides more rational advice than humans are capable of in almost every aspect of daily life. However, despite this achievement, the design and training of neural networks are still challenging and unpredictable procedures. To lower the technical thresholds for common users, automated hyper-parameter optimization (HPO) has become a popular topic in both academic and industrial areas. This paper provides a review of the most essential topics on HPO. The first section introduces the key hyper-parameters related to model training and structure, and discusses their importance and methods to define the value range. Then, the research focuses on major optimization algorithms and their applicability, covering their efficiency and accuracy especially for deep learning networks. This study next reviews major services and toolkits for HPO, comparing their support for state-of-the-art searching algorithms, feasibility with major deep learning frameworks, and extensibility for new modules designed by users. The paper concludes with problems that exist when HPO is applied to deep learning, a comparison between optimization algorithms, and prominent approaches for model evaluation with limited computational resources.

翻译:自深层神经网络开发以来,它们为日常生活作出了巨大贡献;机器学习比人类几乎在日常生活的每一个方面都能够提供更合理的建议;然而,尽管取得了这一成就,神经网络的设计和培训仍然具有挑战性和不可预测的程序;为降低普通用户的技术门槛,自动化超参数优化已成为学术和工业领域一个受欢迎的主题;本文件审查了关于人类生命动力的最基本的主题;第一部分介绍了与模型培训和结构有关的关键超参数,并讨论了它们的重要性和界定价值范围的方法;随后,研究侧重于主要优化算法及其适用性,包括它们的效率和准确性,特别是深层学习网络;本研究报告接下来将审查人类生命动力网络的主要服务和工具包,比较它们对最新搜索算法的支持,与主要深层学习框架的可行性,以及用户设计的新模块的可推广性;本文件最后,当人类生命动力应用于深层学习时,存在着一些问题,对优化算法与有限的计算资源进行显著的模型评价方法进行比较。