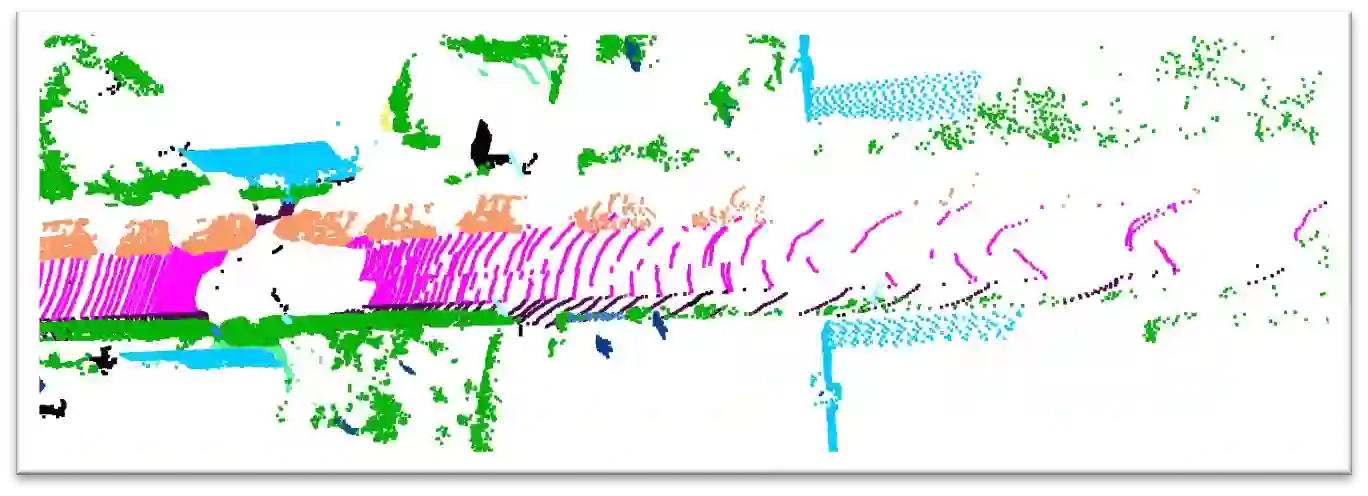

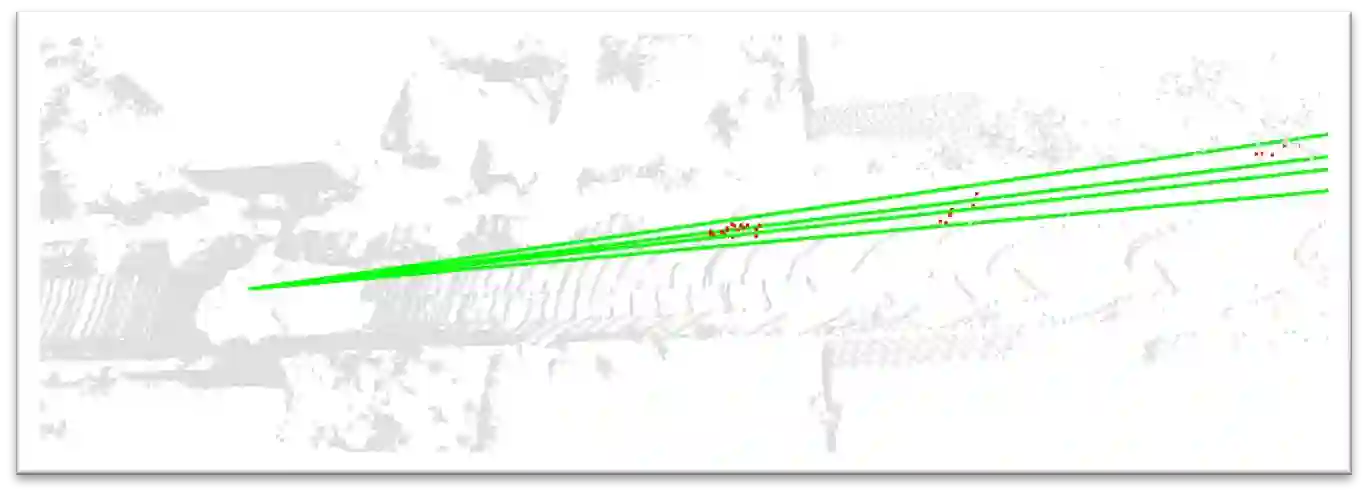

LiDAR-based 3D point cloud recognition has benefited various applications. Without specially considering the LiDAR point distribution, most current methods suffer from information disconnection and limited receptive field, especially for the sparse distant points. In this work, we study the varying-sparsity distribution of LiDAR points and present SphereFormer to directly aggregate information from dense close points to the sparse distant ones. We design radial window self-attention that partitions the space into multiple non-overlapping narrow and long windows. It overcomes the disconnection issue and enlarges the receptive field smoothly and dramatically, which significantly boosts the performance of sparse distant points. Moreover, to fit the narrow and long windows, we propose exponential splitting to yield fine-grained position encoding and dynamic feature selection to increase model representation ability. Notably, our method ranks 1st on both nuScenes and SemanticKITTI semantic segmentation benchmarks with 81.9% and 74.8% mIoU, respectively. Also, we achieve the 3rd place on nuScenes object detection benchmark with 72.8% NDS and 68.5% mAP. Code is available at https://github.com/dvlab-research/SphereFormer.git.

翻译:LiDAR-Based 3D 点云识别已受益于各种应用。然而,大多数当前方法没有特别考虑 LiDAR 点分布,导致信息断开和有限的感受野,尤其是对于稀疏的远距离点。在本文中,我们研究了 LiDAR 点的不同稀疏度分布,并提出了 SphereFormer 的方法来直接聚合来自密集近距离点到稀疏远距离点的信息。我们设计了径向窗口自注意力机制,将空间划分为多个非重叠的狭窄和长窗口。它克服了断开问题,并显着提高了稀疏远距离点的性能,并平滑和显著地扩大了感受野。此外,为了适应这些狭窄和长窗口,我们提出了指数分裂方法,以得到精细的位置编码和动态特征选择,从而提高了模型的表示能力。值得注意的是,我们的方法在 nuScenes 和 SemanticKITTI 语义分割基准上均排名第一,分别为 81.9% 和 74.8% 的 mIoU。此外,我们在 nuScenes 物体检测基准上获得第三名,NDS 为 72.8%,mAP 为 68.5%。代码可在 https://github.com/dvlab-research/SphereFormer.git 找到。