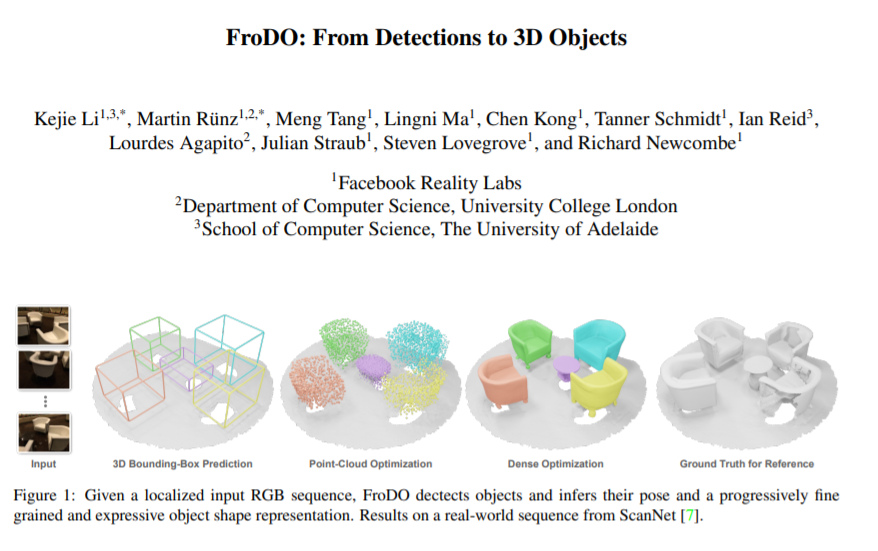

面向对象的映射对于场景理解非常重要,因为它们共同捕获几何和语义,允许对对象进行单独的实例化和有意义的推理。我们介绍了FroDO,这是一种从RGB视频中精确重建物体实例的方法,它以一种由粗到细的方式推断出物体的位置、姿态和形状。FroDO的关键是将对象形状嵌入到一个新的学习空间中,允许在稀疏点云和稠密DeepSDF解码之间进行无缝切换。给定一个局部的RGB帧的输入序列,FroDO首先聚合2D检测,为每个对象实例化一个分类感知的3D包围框。在利用稀疏和稠密形状表示进一步优化形状和姿态之前,使用编码器网络对形状代码进行回归。优化使用多视图几何,光度和剪影损失。我们对真实世界的数据集进行评估,包括Pix3D、Redwood-OS和ScanNet,用于单视图、多视图和多对象重建。

成为VIP会员查看完整内容

相关内容

CVPR is the premier annual computer vision event comprising the main conference and several co-located workshops and short courses. With its high quality and low cost, it provides an exceptional value for students, academics and industry researchers.

CVPR 2020 will take place at The Washington State Convention Center in Seattle, WA, from June 16 to June 20, 2020.

http://cvpr2020.thecvf.com/

专知会员服务

39+阅读 · 2020年3月19日

专知会员服务

136+阅读 · 2020年3月8日

专知会员服务

27+阅读 · 2020年1月17日

Arxiv

8+阅读 · 2018年2月21日

Arxiv

6+阅读 · 2018年1月28日