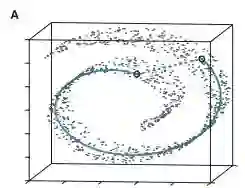

It is well established that training deep neural networks gives useful representations that capture essential features of the inputs. However, these representations are poorly understood in theory and practice. In the context of supervised learning an important question is whether these representations capture features informative for classification, while filtering out non-informative noisy ones. We explore a formalization of this question by considering a generative process where each class is associated with a high-dimensional manifold and different classes define different manifolds. Under this model, each input is produced using two latent vectors: (i) a "manifold identifier" $\gamma$ and; (ii)~a "transformation parameter" $\theta$ that shifts examples along the surface of a manifold. E.g., $\gamma$ might represent a canonical image of a dog, and $\theta$ might stand for variations in pose, background or lighting. We provide theoretical and empirical evidence that neural representations can be viewed as LSH-like functions that map each input to an embedding that is a function of solely the informative $\gamma$ and invariant to $\theta$, effectively recovering the manifold identifier $\gamma$. An important consequence of this behavior is one-shot learning to unseen classes.

翻译:众所周知,培训深层神经网络提供了有用的表述,反映了投入的基本特征。然而,这些表述在理论和实践上没有得到很好的理解。在受监督的学习中,一个重要问题是,这些表述在过滤非信息性噪音的同时,是否包含用于分类的信息性特征,同时过滤非信息性噪音。我们探讨这一问题的正规化,方法是考虑一个基因化过程,其中每个阶级都与高维多元体相关,而不同的阶级定义不同的多元体。在这个模型下,每种输入都使用两种潜在矢量生成:(一) 一个“配置识别器” $\gamma$;(二) 一个“转换参数 $\theta$ ”,将示例沿一个多面表面移动。例如,$\gamma$ 可能代表一只狗的罐状形象,而$\theta$ 可能支持面、背景或照明的变异形。我们提供理论和实验证据,即神经表达方式可以被视为LSH类功能,将每一种输入都显示一个仅由信息性的 $\gamma$ 和 $\\\ta$ $ exvaant lection asimal as real asue asom asue real real asign real asom im asign asign asucal.