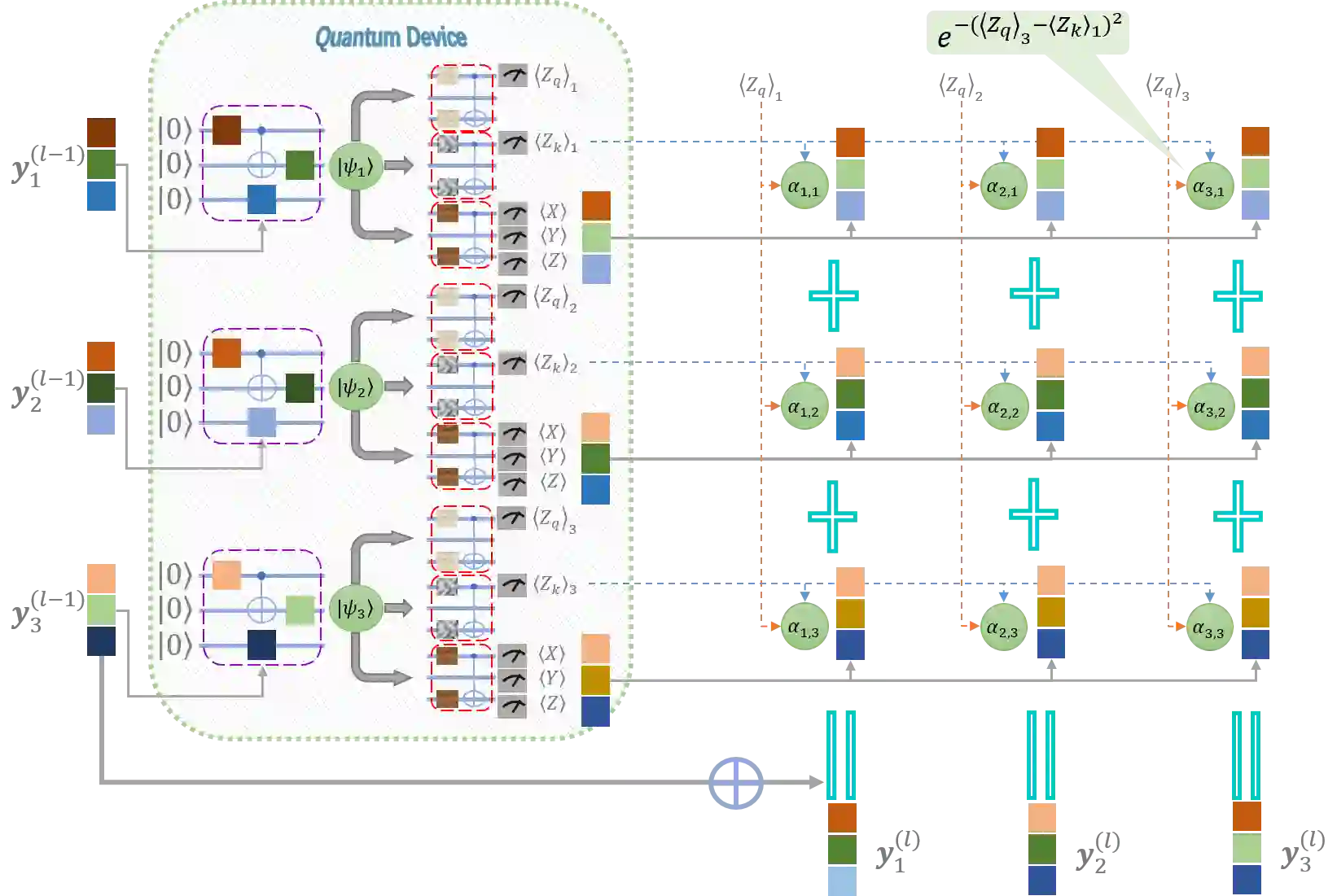

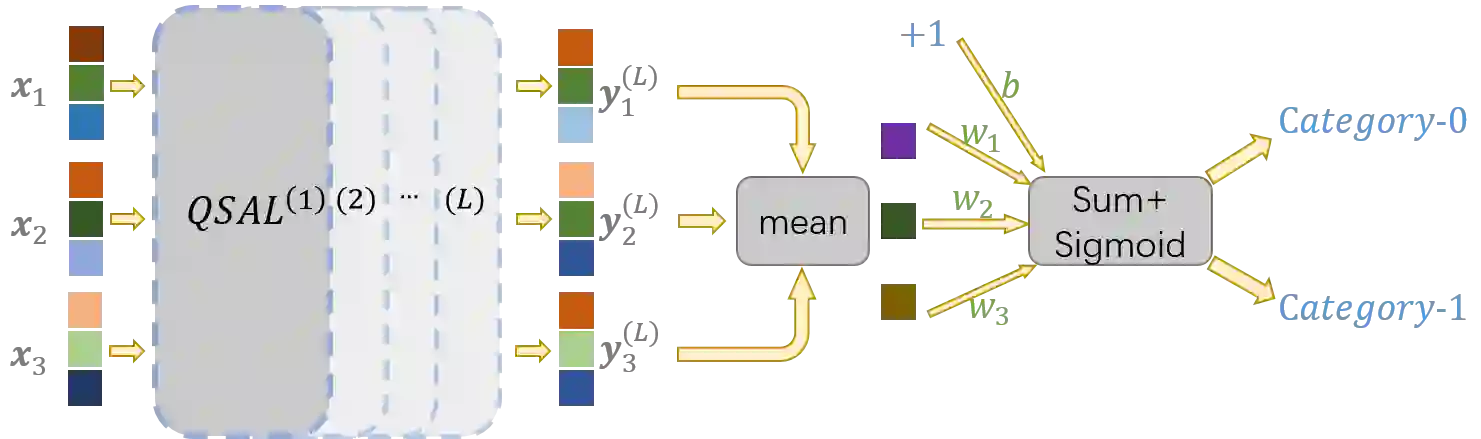

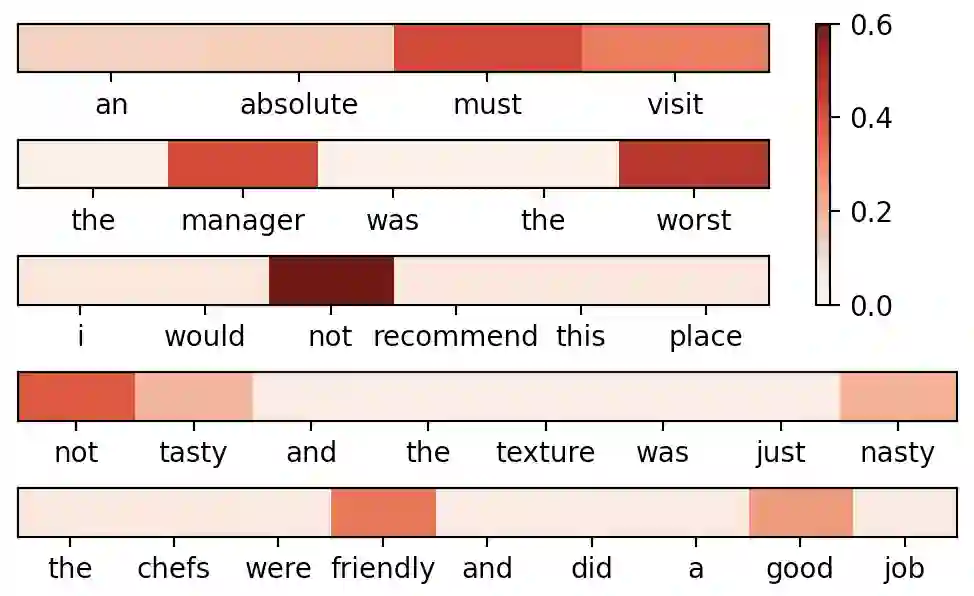

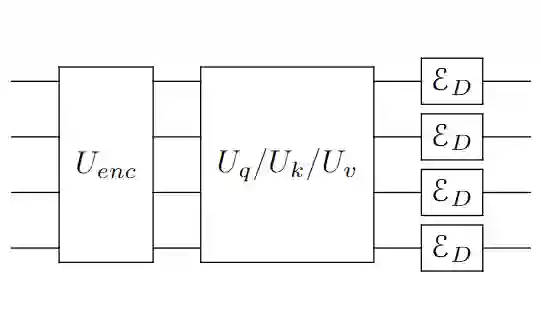

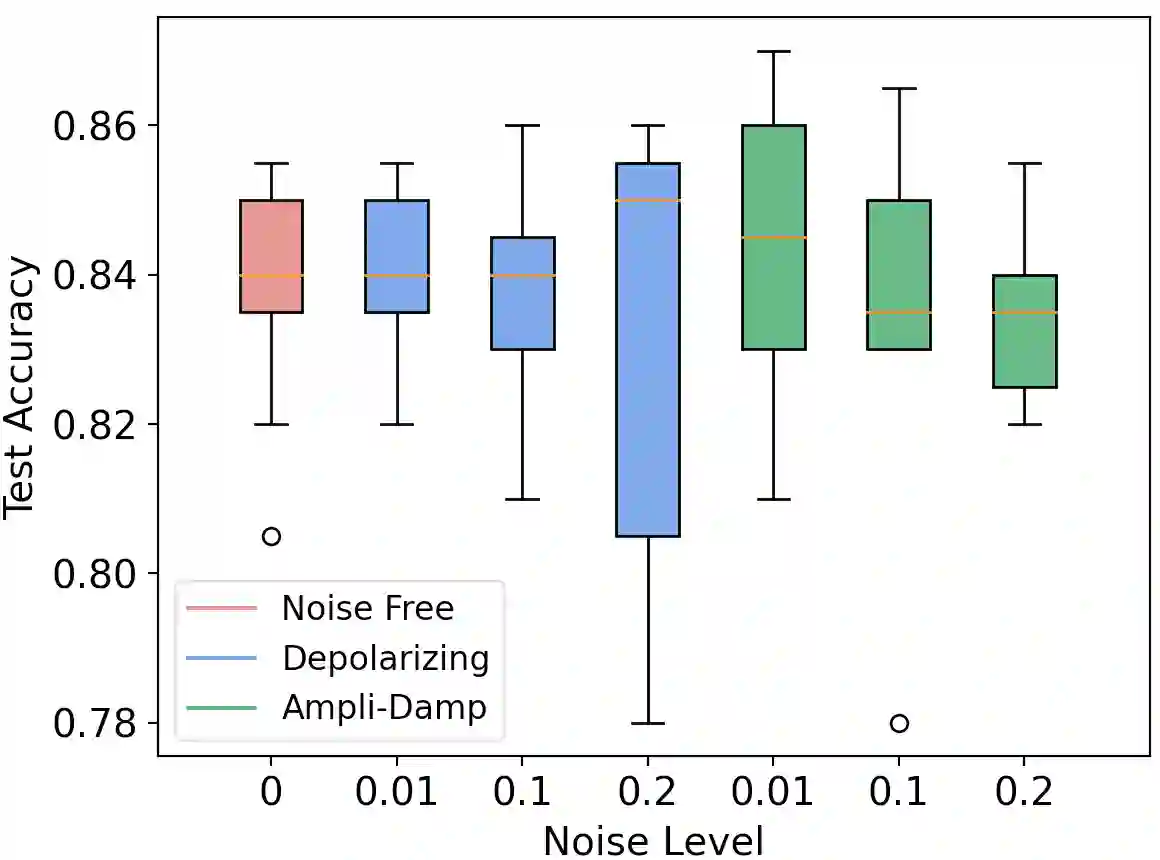

An emerging direction of quantum computing is to establish meaningful quantum applications in various fields of artificial intelligence, including natural language processing (NLP). Although some efforts based on syntactic analysis have opened the door to research in Quantum NLP (QNLP), limitations such as heavy syntactic preprocessing and syntax-dependent network architecture make them impracticable on larger and real-world data sets. In this paper, we propose a new simple network architecture, called the quantum self-attention neural network (QSANN), which can make up for these limitations. Specifically, we introduce the self-attention mechanism into quantum neural networks and then utilize a Gaussian projected quantum self-attention serving as a sensible quantum version of self-attention. As a result, QSANN is effective and scalable on larger data sets and has the desirable property of being implementable on near-term quantum devices. In particular, our QSANN outperforms the best existing QNLP model based on syntactic analysis as well as a simple classical self-attention neural network in numerical experiments of text classification tasks on public data sets. We further show that our method exhibits robustness to low-level quantum noises.

翻译:量子计算的新方向是在人工智能的各个领域,包括自然语言处理(NLP)建立有意义的量子应用。虽然基于合成分析的一些努力为Qantum NLP(QNLP)的研究打开了大门,但诸如重合成预处理和依赖语法的网络结构等限制使它们无法用于大型和现实世界数据集。在本文件中,我们提议一个新的简单网络结构,称为量子自留神经网络(QSANN),它可以弥补这些限制。具体地说,我们将自留机制引入量子神经网络,然后利用高斯预测的量子自留作为自留的明智量子版本。结果之一是,QSANN对大型数据集是有效且可伸缩的,并且具有在近期量子装置上可以执行的可取属性。特别是,我们的QSANNN超越了基于合成分析的最佳现有QNLP模型, 以及一个简单的古典自留神经网络预测的量子自留量量子系统,作为自留自留自留自留的明智的自留量子版本,作为自留自留自留自留自留自留自留自留自留的自留的自留的自留自留自留的自留的自留的自留自留自留自留的自留自留的自留自留自留之本。结果,作为自留的自留自留自留自留自留自留自留自留自留自留自留自留的自留自留自留自留自留自留自留自留的自留的自留自留自留自留自留的自留的自留的自留自留自留自留的自留自留自留自留的自留的自留自留的自留的自留的自留的自留自留自留自留自留自留的自留自留自留自留自留自留的自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留自留的自留自留自留