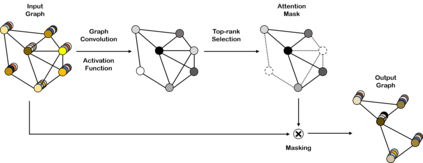

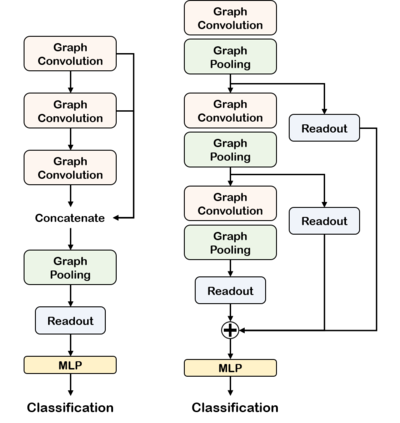

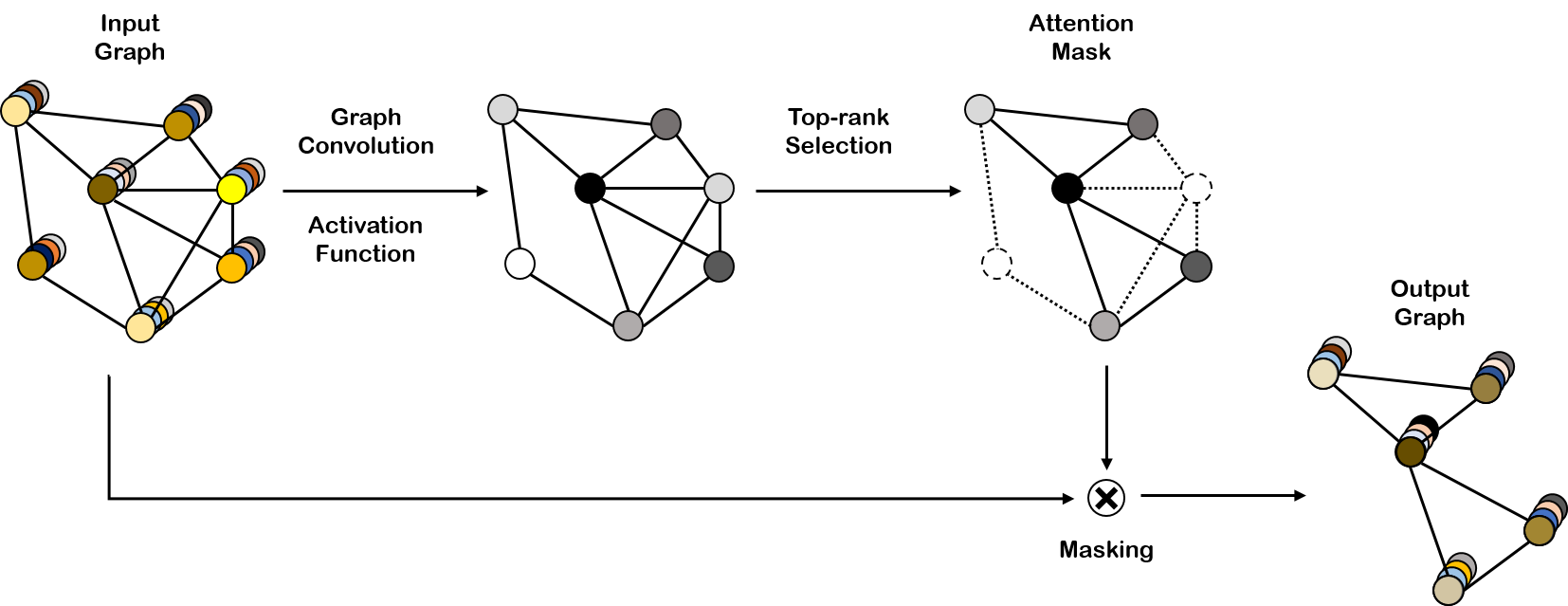

Advanced methods of applying deep learning to structured data such as graphs have been proposed in recent years. In particular, studies have focused on generalizing convolutional neural networks to graph data, which includes redefining the convolution and the downsampling (pooling) operations for graphs. The method of generalizing the convolution operation to graphs has been proven to improve performance and is widely used. However, the method of applying downsampling to graphs is still difficult to perform and has room for improvement. In this paper, we propose a graph pooling method based on self-attention. Self-attention using graph convolution allows our pooling method to consider both node features and graph topology. To ensure a fair comparison, the same training procedures and model architectures were used for the existing pooling methods and our method. The experimental results demonstrate that our method achieves superior graph classification performance on the benchmark datasets using a reasonable number of parameters.

翻译:近些年来,人们提出了对图表等结构化数据应用深层次学习的先进方法,特别是,研究的重点是将进化神经网络普遍化,以图解数据,包括重新定义图表的进化和下抽样(合并)操作。将进化作业普遍化为图表的方法已证明提高了性能并被广泛使用。但是,对图表应用下游抽样的方法仍然难以实施,而且有改进的余地。在本文中,我们提出了一个基于自我注意的图形集合方法。使用图解组合的自学方法使我们的集合方法既考虑节点特征,又考虑图表表层学。为了确保进行公平的比较,对现有的集成方法和我们的方法采用了同样的培训程序和模型结构。实验结果表明,我们的方法在基准数据集上利用合理数量的参数实现了较高的图表分类性能。