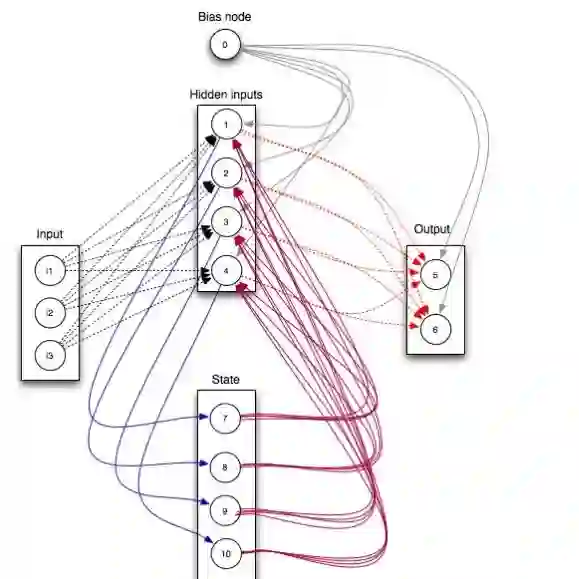

A goal of unsupervised machine learning is to disentangle representations of complex high-dimensional data, allowing for interpreting the significant latent factors of variation in the data as well as for manipulating them to generate new data with desirable features. These methods often rely on an adversarial scheme, in which representations are tuned to avoid discriminators from being able to reconstruct specific data information (labels). We propose a simple, effective way of disentangling representations without any need to train adversarial discriminators, and apply our approach to Restricted Boltzmann Machines (RBM), one of the simplest representation-based generative models. Our approach relies on the introduction of adequate constraints on the weights during training, which allows us to concentrate information about labels on a small subset of latent variables. The effectiveness of the approach is illustrated on the MNIST dataset, the two-dimensional Ising model, and taxonomy of protein families. In addition, we show how our framework allows for computing the cost, in terms of log-likelihood of the data, associated to the disentanglement of their representations.

翻译:未经监督的机器学习的一个目标是解开复杂高维数据的表达方式,以便解释数据变化的重要潜在因素,并对其进行操纵以产生具有理想特征的新数据。这些方法往往依靠对抗性办法,即调整代表方式,以避免歧视者能够重建具体的数据信息(标签)。我们提出一种简单的、有效的拆解表达方式,无需训练对抗性歧视者,并采用我们的方法,即采用最简单、基于代表性的基因化模型之一,即限制Boltzmann机器(RBM)。我们的方法依赖于在培训期间对权重施加适当的限制,从而使我们能够将关于少量潜在变量的标签集中到一小组上。该方法的有效性在MNIST数据集、二维ISS模型和蛋白质家庭的分类上加以说明。此外,我们展示了我们的框架如何允许计算与数据脱钩相关的成本,即数据的日志相似性。