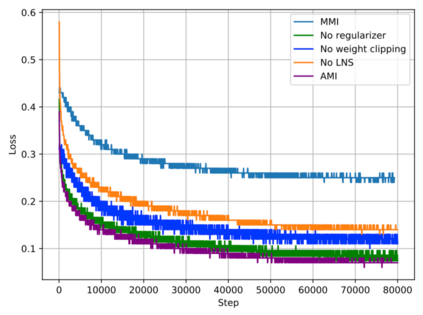

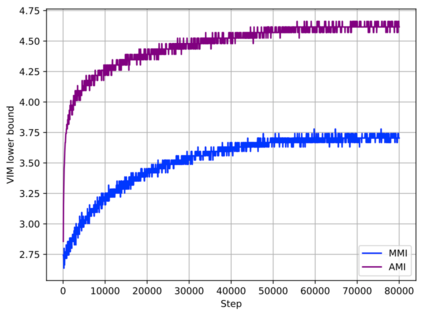

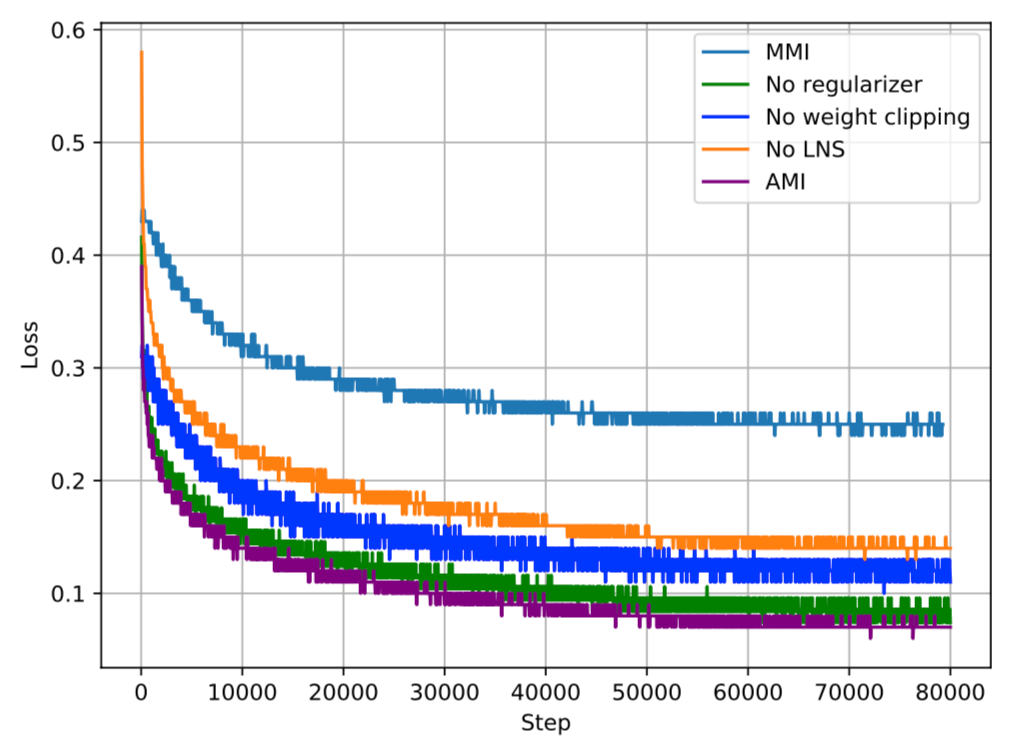

Recent advances in maximizing mutual information (MI) between the source and target have demonstrated its effectiveness in text generation. However, previous works paid little attention to modeling the backward network of MI (i.e., dependency from the target to the source), which is crucial to the tightness of the variational information maximization lower bound. In this paper, we propose Adversarial Mutual Information (AMI): a text generation framework which is formed as a novel saddle point (min-max) optimization aiming to identify joint interactions between the source and target. Within this framework, the forward and backward networks are able to iteratively promote or demote each other's generated instances by comparing the real and synthetic data distributions. We also develop a latent noise sampling strategy that leverages random variations at the high-level semantic space to enhance the long term dependency in the generation process. Extensive experiments based on different text generation tasks demonstrate that the proposed AMI framework can significantly outperform several strong baselines, and we also show that AMI has potential to lead to a tighter lower bound of maximum mutual information for the variational information maximization problem.

翻译:在最大程度实现源和目标之间相互信息(MI)方面最近取得的进展表明,在文本生成方面,它取得了成效;然而,以前的工作很少注意模拟MI的后向网络(即从目标到源的依赖性),而后向网络对于变异信息最大化较低约束度的紧紧性至关重要。在本文件中,我们提议了双向相互信息(AMI):一个文本生成框架,它是一个新颖的支撑点(最小-最大)优化,目的是确定源和目标之间的联合互动。在此框架内,前向和后向网络能够通过比较真实和合成数据分布来反复促进或演示彼此生成的事例。我们还制定了一种潜在噪音取样战略,利用高层次语系空间随机变异来增强生成过程中的长期依赖性。基于不同文本生成任务的广泛实验表明,拟议的AMI框架可以大大超出几个强有力的基线。我们还表明,AMI有可能使变异信息最大化问题的最大相互信息更加集中。