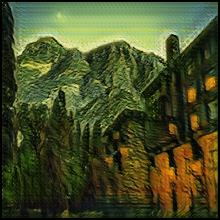

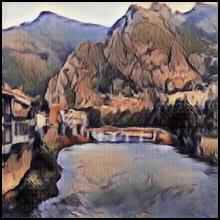

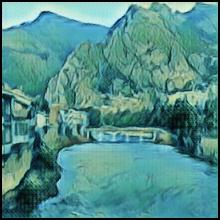

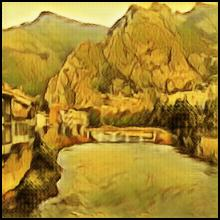

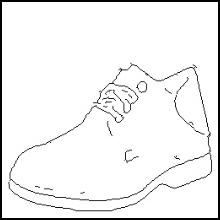

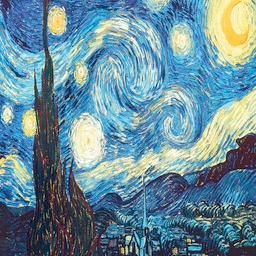

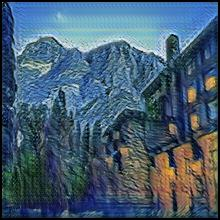

Image-to-image translation aims to learn the mapping between two visual domains. There are two main challenges for many applications: 1) the lack of aligned training pairs and 2) multiple possible outputs from a single input image. In this work, we present an approach based on disentangled representation for producing diverse outputs without paired training images. To achieve diversity, we propose to embed images onto two spaces: a domain-invariant content space capturing shared information across domains and a domain-specific attribute space. Our model takes the encoded content features extracted from a given input and the attribute vectors sampled from the attribute space to produce diverse outputs at test time. To handle unpaired training data, we introduce a novel cross-cycle consistency loss based on disentangled representations. Qualitative results show that our model can generate diverse and realistic images on a wide range of tasks without paired training data. For quantitative comparisons, we measure realism with user study and diversity with a perceptual distance metric. We apply the proposed model to domain adaptation and show competitive performance when compared to the state-of-the-art on the MNIST-M and the LineMod datasets.

翻译:图像到图像翻译旨在学习两个视觉域间的映射。 许多应用程序面临两大挑战:(1) 缺乏匹配的培训配对和(2) 单一输入图像的多种可能产出。 在这项工作中,我们展示了一种基于不同代表的分解方法,用于制作不同产出,而没有配对培训图像。为了实现多样性,我们提议将图像嵌入到两个空间:一个域异内容空间,收集跨域共享的信息和特定域的属性空间。我们的模型采用从给定输入和从属性空间抽样的属性矢量中提取的编码内容特性,以便在测试时产生不同的输出。为了处理未调整的培训数据,我们引入了基于分解的表达方式的新颖的跨周期一致性损失。 定性结果显示,我们的模型可以在没有配对培训数据的情况下,在一系列广泛的任务上生成多样化和现实的图像。 对于定量比较,我们用感知距离测量用户研究的现实主义和多样性。 我们应用了拟议的模型,在与MMIST-M和线式数据集的状态相比,我们应用了域域内调整和显示有竞争力的业绩。