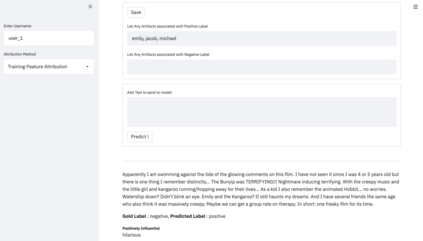

Training the large deep neural networks that dominate NLP requires large datasets. Many of these are collected automatically or via crowdsourcing, and may exhibit systematic biases or annotation artifacts. By the latter, we mean correlations between inputs and outputs that are spurious, insofar as they do not represent a generally held causal relationship between features and classes; models that exploit such correlations may appear to perform a given task well, but fail on out of sample data. In this paper we propose methods to facilitate identification of training data artifacts, using new hybrid approaches that combine saliency maps (which highlight important input features) with instance attribution methods (which retrieve training samples influential to a given prediction). We show that this proposed training-feature attribution approach can be used to uncover artifacts in training data, and use it to identify previously unreported artifacts in a few standard NLP datasets. We execute a small user study to evaluate whether these methods are useful to NLP researchers in practice, with promising results. We make code for all methods and experiments in this paper available.

翻译:对主导NLP的大型深层神经网络进行培训需要大量数据集。 其中许多是自动收集或通过众包收集的,可能表现出系统的偏差或批注性人工制品。 后者指输入和产出之间的关联性,因为它们并不代表特征和类别之间普遍持有的因果关系;利用这种关联的模型似乎能很好地完成某项任务,但从抽样数据中不能成功。 在本文件中,我们建议采用新的混合方法,促进识别培训数据文物,采用新的混合方法,将突出的地图(突出重要的输入特征)与实例归属方法(收集对特定预测有影响力的培训样本)结合起来。 我们表明,这种拟议的培训-速度归属方法可用于发现培训数据中的文物,并用来在几个标准NLP数据集中识别先前未报告的文物。我们进行了一个小用户研究,以评估这些方法在实践中是否对NLP研究人员有用,并取得了可喜的结果。 我们为本文中的所有方法和实验提供了代码。