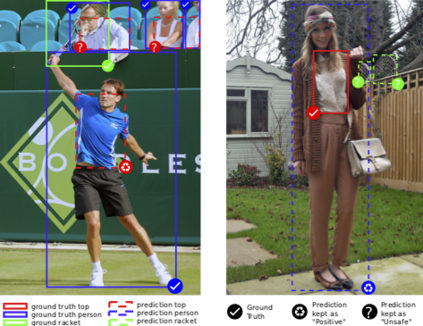

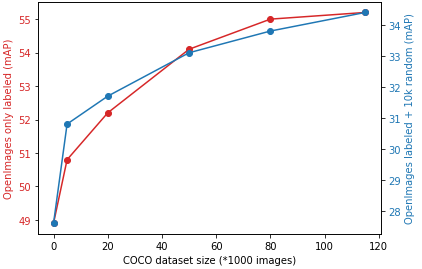

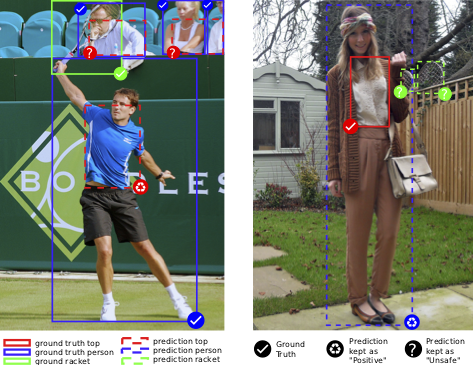

Object detectors tend to perform poorly in new or open domains, and require exhaustive yet costly annotations from fully labeled datasets. We aim at benefiting from several datasets with different categories but without additional labelling, not only to increase the number of categories detected, but also to take advantage from transfer learning and to enhance domain independence. Our dataset merging procedure starts with training several initial Faster R-CNN on the different datasets while considering the complementary datasets' images for domain adaptation. Similarly to self-training methods, the predictions of these initial detectors mitigate the missing annotations on the complementary datasets. The final OMNIA Faster R-CNN is trained with all categories on the union of the datasets enriched by predictions. The joint training handles unsafe targets with a new classification loss called SoftSig in a softly supervised way. Experimental results show that in the case of fashion detection for images in the wild, merging Modanet with COCO increases the final performance from 45.5% to 57.4%. Applying our soft distillation to the task of detection with domain shift on Cityscapes enables to beat the state-of-the-art by 5.3 points. We hope that our methodology could unlock object detection for real-world applications without immense datasets.

翻译:在新的或开放的域域中,物体探测器往往表现不佳,需要完全贴上标签的数据集提供详尽而昂贵的说明。我们的目标是从不同类别但又没有附加标签的若干数据集中受益,这不仅是为了增加所检测的类别数目,而且是为了利用转移学习的优势和增强域独立性。我们的数据集合并程序首先培训了几个关于不同数据集的初始快速R-CNN,同时考虑补充数据集的图像以进行域适应。与自我培训方法一样,这些初始探测器的预测减轻了补充数据集中缺失的注释。最终的OMNIA快速 R-CNN与所有类别的数据集结合培训。联合培训处理不安全的目标,以软监督的方式处理称为SoftSig的新分类损失。实验结果显示,在对野外图像进行时装探测时,将Modanet与CO的图像合并将最终性能从45.5%提高到57.4%。我们软性蒸馏将我们的软性蒸馏用于探测任务,在城市景区域图上进行域变换,从而能够击败真实的状态探测方法。我们可能以5.3点击败真实的数据。