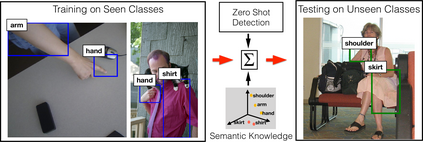

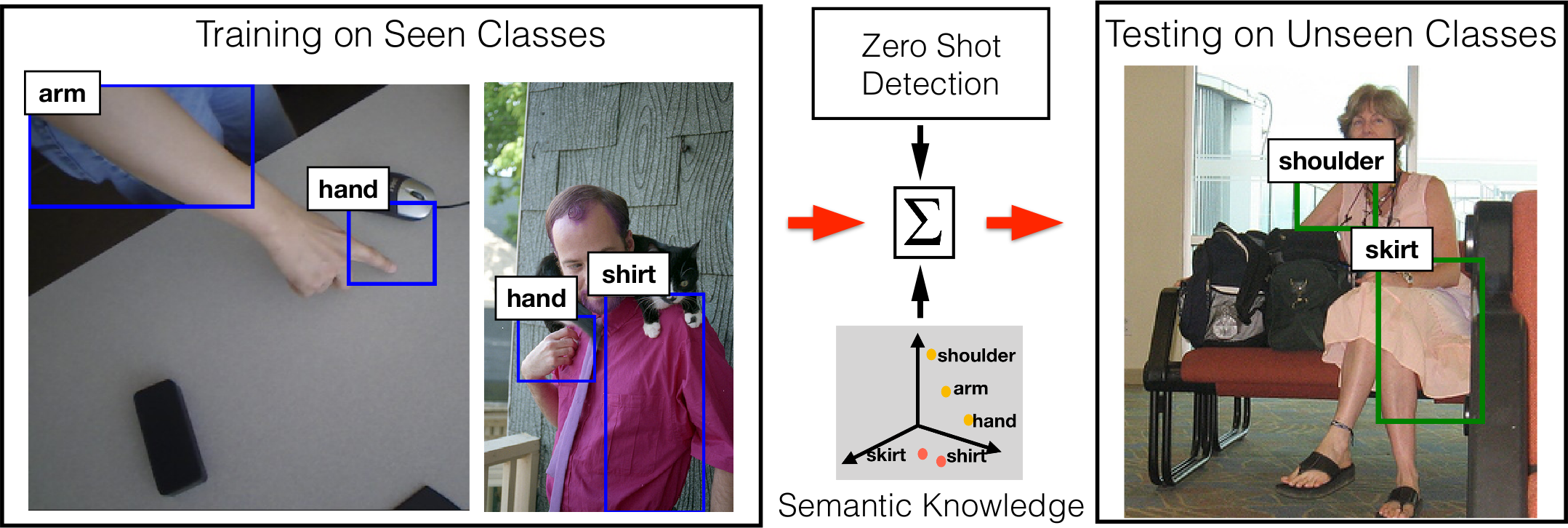

We introduce and tackle the problem of zero-shot object detection (ZSD), which aims to detect object classes which are not observed during training. We work with a challenging set of object classes, not restricting ourselves to similar and/or fine-grained categories as in prior works on zero-shot classification. We present a principled approach by first adapting visual-semantic embeddings for ZSD. We then discuss the problems associated with selecting a background class and motivate two background-aware approaches for learning robust detectors. One of these models uses a fixed background class and the other is based on iterative latent assignments. We also outline the challenge associated with using a limited number of training classes and propose a solution based on dense sampling of the semantic label space using auxiliary data with a large number of categories. We propose novel splits of two standard detection datasets - MSCOCO and VisualGenome, and present extensive empirical results in both the traditional and generalized zero-shot settings to highlight the benefits of the proposed methods. We provide useful insights into the algorithm and conclude by posing some open questions to encourage further research.

翻译:我们介绍并解决零射物体探测问题,目的是探测培训期间未观察到的物体类别; 我们与一组具有挑战性的物体类别合作,不局限于以前零射分类工作中的类似和(或)微粒类别; 我们提出一种原则性办法,首先调整ZSD的视觉和成文嵌入; 然后我们讨论与选择一个背景类有关的问题,并激励两种背景认识学习强力探测器的方法; 其中一种模式使用固定背景类,另一种则基于迭代潜在任务; 我们还概述了与使用数量有限的训练类有关的挑战,并提出了一个基于大量类别辅助数据密集取样语义标签空间的解决办法; 我们提出两个标准探测数据集(MCCO和VisionGenome)的新分类,并介绍传统和普遍零射环境的广泛经验结果,以突出拟议方法的益处; 我们提供了对算法的有用见解,并最后提出一些公开问题,以鼓励进一步的研究。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem