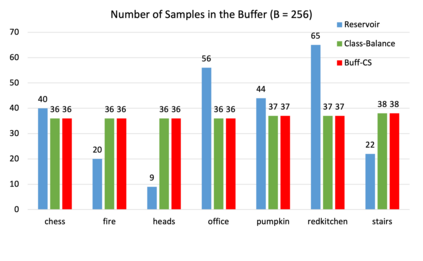

For several emerging technologies such as augmented reality, autonomous driving and robotics, visual localization is a critical component. Directly regressing camera pose/3D scene coordinates from the input image using deep neural networks has shown great potential. However, such methods assume a stationary data distribution with all scenes simultaneously available during training. In this paper, we approach the problem of visual localization in a continual learning setup -- whereby the model is trained on scenes in an incremental manner. Our results show that similar to the classification domain, non-stationary data induces catastrophic forgetting in deep networks for visual localization. To address this issue, a strong baseline based on storing and replaying images from a fixed buffer is proposed. Furthermore, we propose a new sampling method based on coverage score (Buff-CS) that adapts the existing sampling strategies in the buffering process to the problem of visual localization. Results demonstrate consistent improvements over standard buffering methods on two challenging datasets -- 7Scenes, 12Scenes, and also 19Scenes by combining the former scenes.

翻译:对于一些新兴技术,如增强现实、自主驱动和机器人,视觉定位是一个关键组成部分。 使用深神经网络从输入图像中直接退缩相机成形/ 3D场景坐标显示巨大的潜力。 但是,这种方法假定在培训期间同时提供所有场景的固定数据分布。 在本文中,我们通过不断学习的设置来解决视觉定位问题 -- -- 通过这种设置,模型以渐进的方式在场景上接受培训。我们的结果表明,与分类域相类似,非静止数据在视觉定位的深网络中引发灾难性的遗忘。为了解决这一问题,我们提议了基于固定缓冲的存储和重播图像的强大基线。此外,我们提出了基于分数(Buff-CS)的新的取样方法,使缓冲进程中现有的取样战略适应视觉定位问题。结果显示,在两个挑战性数据集 -- 7Scenes、12Scenes和19Scenes -- -- 的标准缓冲方法方面,通过合并以前的场景,在标准缓冲方法方面不断改进。

相关内容

Source: Apple - iOS 8