局部学习的特征选择:Local-Learning-Based Feature Selection

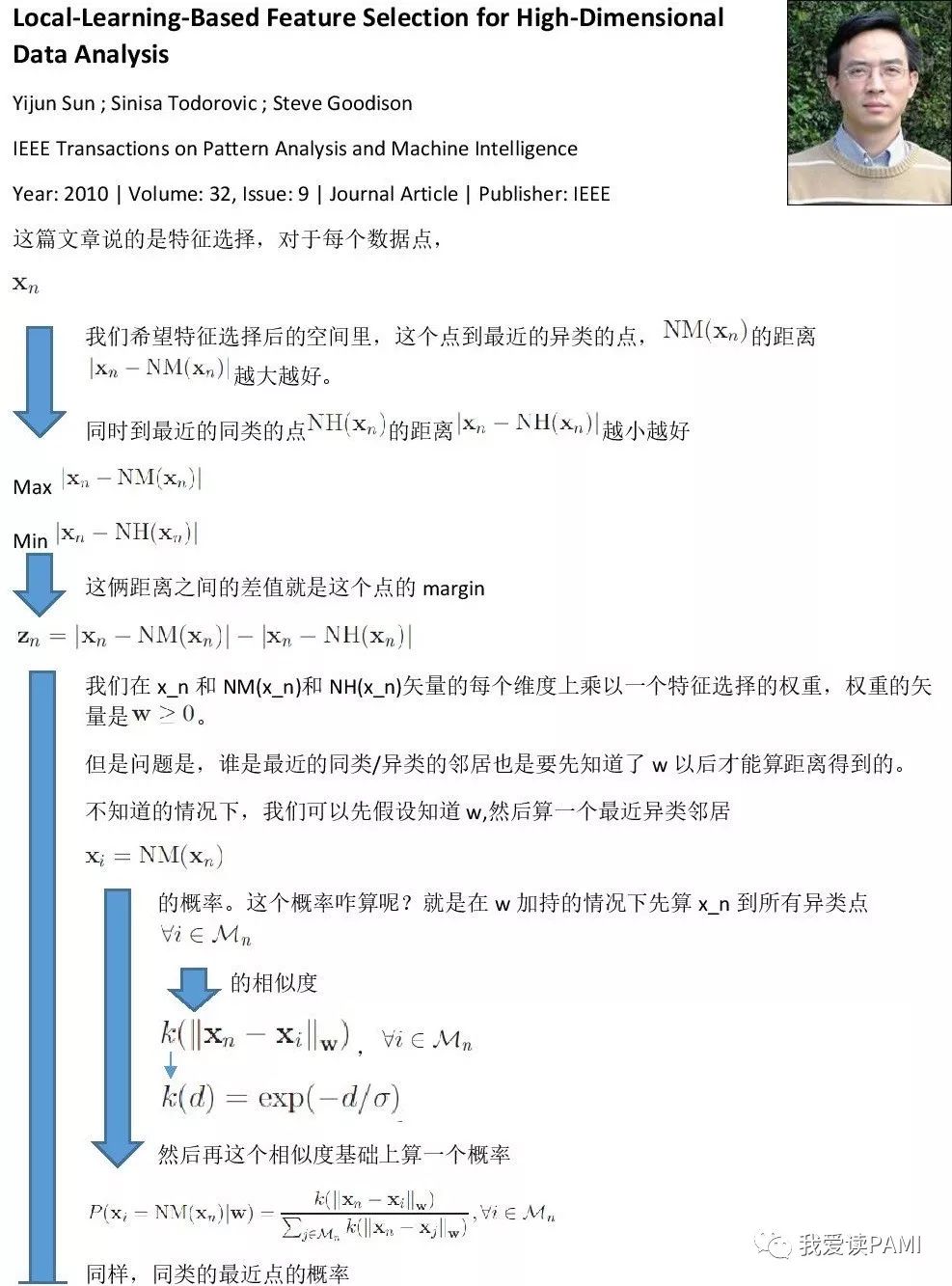

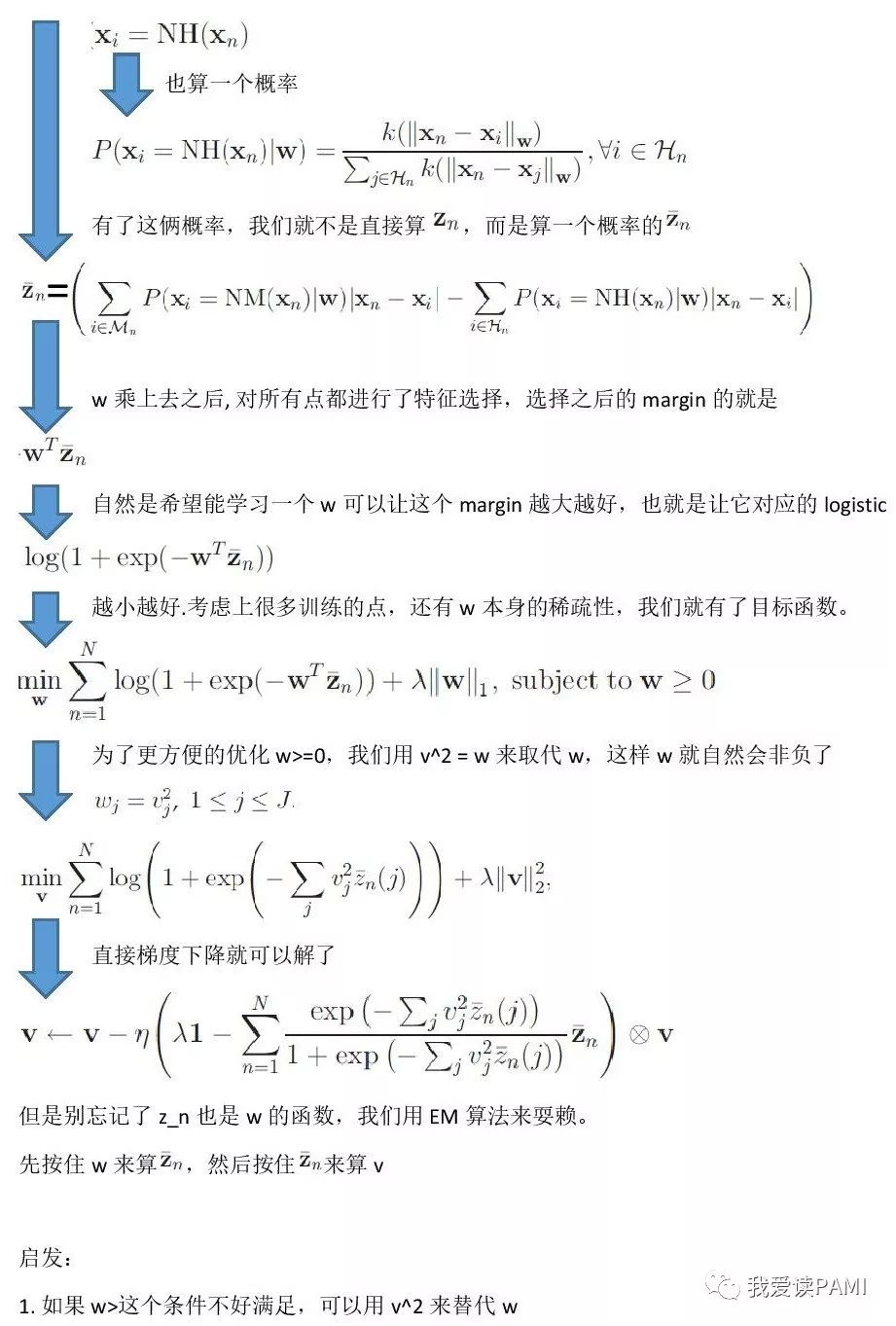

本文说的是如何选择特征可以让一个数据点离自己人越近越好,同时还能远离敌人。问题是,如何知道谁是离自己最近的自己人,谁是离自己最近的敌人呢?在黑暗之中,特征选择照亮方寸的空间,让你能选择自己人远离敌人。作者是前老板,现在在UB做教授的Yijun Sun博士。前台的小妹总是叫他太阳(sun)博士。

Local-Learning-Based FeatureSelection for High-Dimensional Data Analysis

Yijun Sun ; Sinisa Todorovic ; Steve Goodison

IEEE Transactions on Pattern Analysis and MachineIntelligence

Year: 2010 | Volume: 32, Issue: 9 | Journal Article |Publisher: IEEE

Local-Learning-Based FeatureSelection for High-Dimensional Data Analysis

Yijun Sun ; Sinisa Todorovic ; Steve Goodison

IEEE Transactions on Pattern Analysis and MachineIntelligence

Year: 2010 | Volume: 32, Issue: 9 | Journal Article |Publisher: IEEE

Local-Learning-Based FeatureSelection for High-Dimensional Data Analysis

Yijun Sun ; Sinisa Todorovic ; Steve Goodison

IEEE Transactions on Pattern Analysis and MachineIntelligence

Year: 2010 | Volume: 32, Issue: 9 | Journal Article |Publisher: IEEE

This paper considers feature selection for data classification in the presence of a huge number of irrelevant features. We propose a new feature-selection algorithm that addresses several major issues with prior work, including problems with algorithm implementation, computational complexity, and solution accuracy. The key idea is to decompose an arbitrarily complex nonlinear problem into a set of locally linear ones through local learning, and then learn feature relevance globally within the large margin framework. The proposed algorithm is based on well-established machine learning and numerical analysis techniques, without making any assumptions about the underlying data distribution. It is capable of processing many thousands of features within minutes on a personal computer while maintaining a very high accuracy that is nearly insensitive to a growing number of irrelevant features. Theoretical analyses of the algorithm's sample complexity suggest that the algorithm has a logarithmical sample complexity with respect to the number of features. Experiments on 11 synthetic and real-world data sets demonstrate the viability of our formulation of the feature-selection problem for supervised learning and the effectiveness of our algorithm.