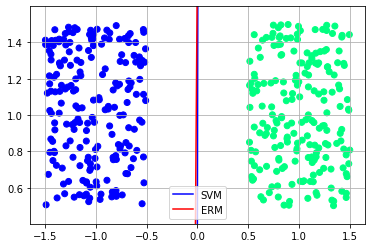

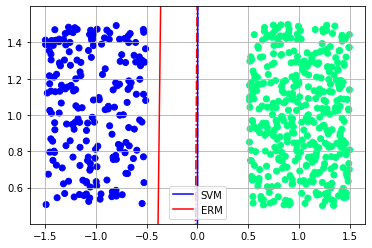

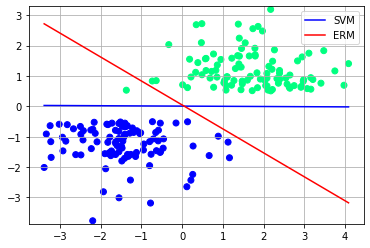

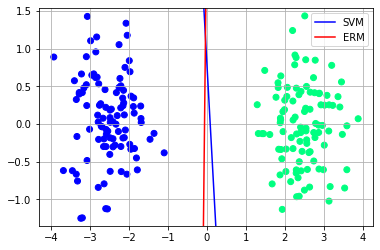

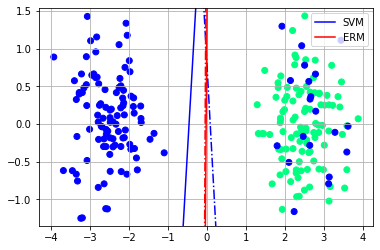

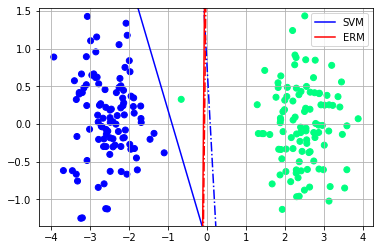

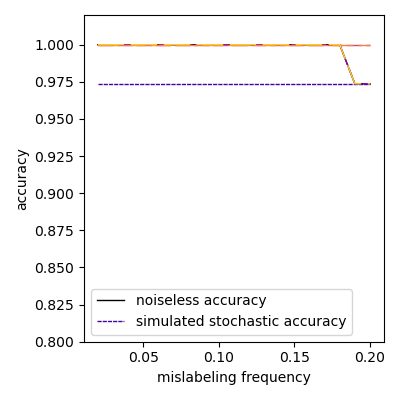

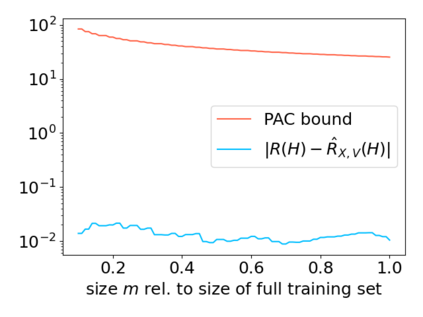

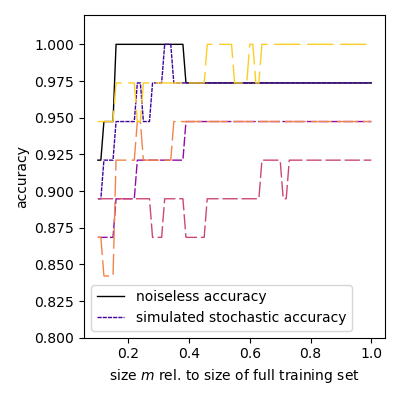

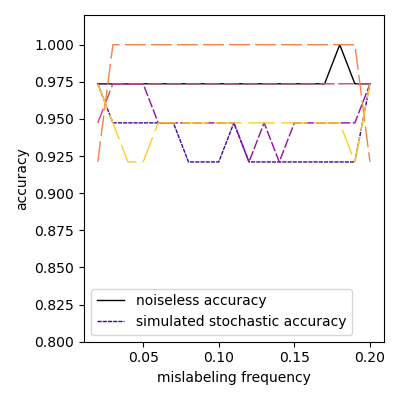

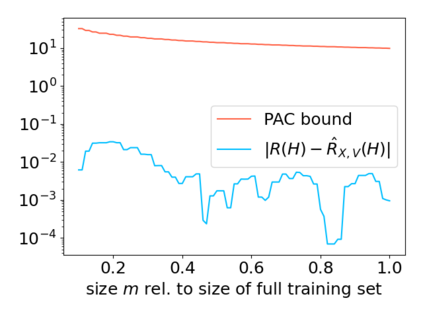

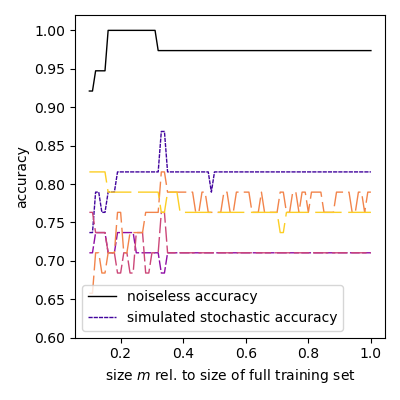

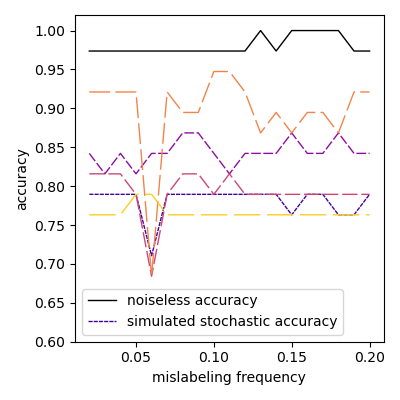

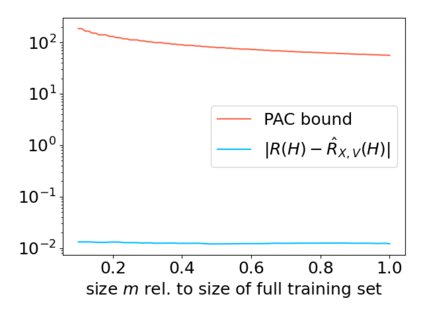

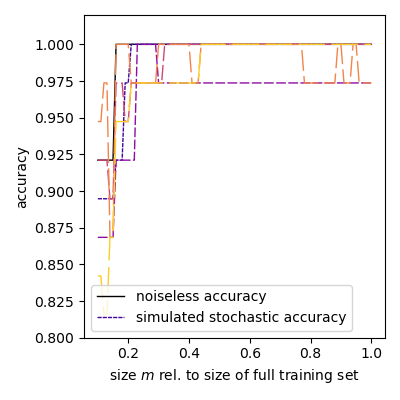

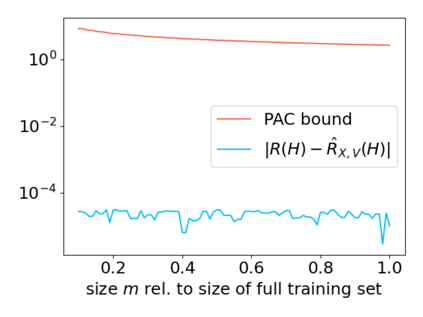

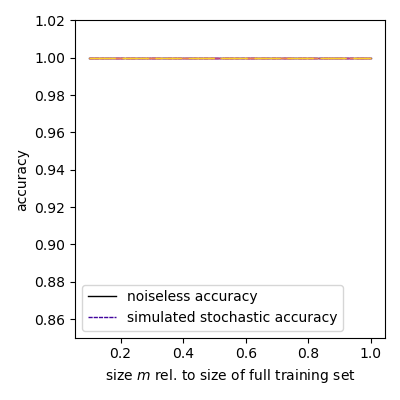

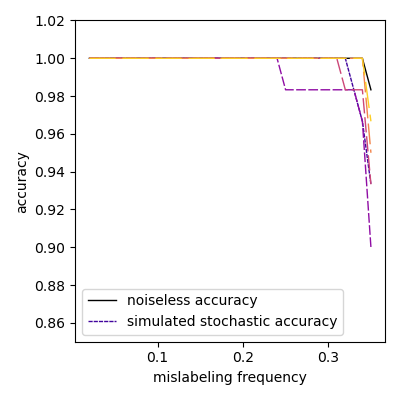

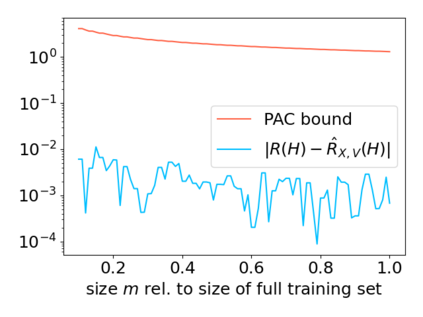

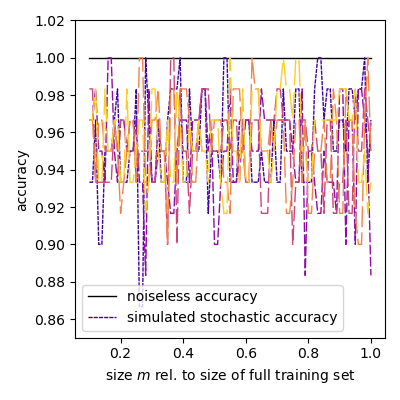

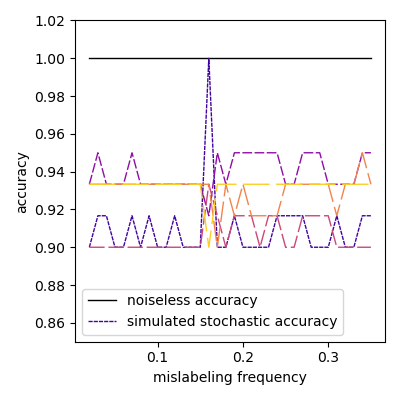

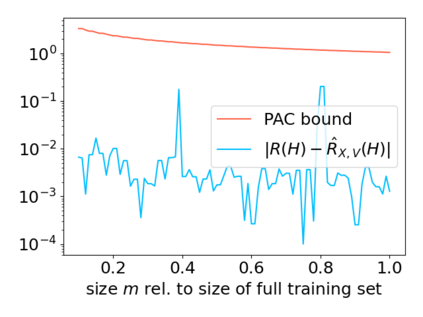

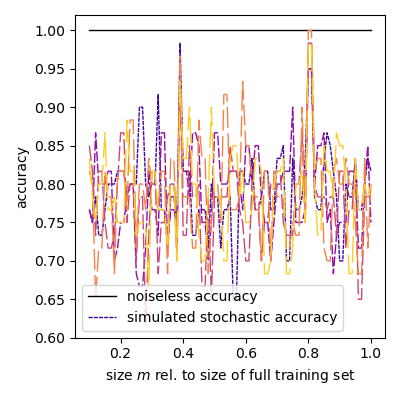

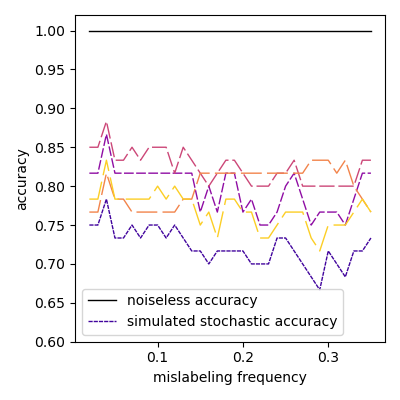

We investigate the functioning of a classifying biological neural network from the perspective of statistical learning theory, modelled, in a simplified setting, as a continuous-time stochastic recurrent neural network (RNN) with identity activation function. In the purely stochastic (robust) regime, we give a generalisation error bound that holds with high probability, thus showing that the empirical risk minimiser is the best-in-class hypothesis. We show that RNNs retain a partial signature of the paths they are fed as the unique information exploited for training and classification tasks. We argue that these RNNs are easy to train and robust and back these observations with numerical experiments on both synthetic and real data. We also exhibit a trade-off phenomenon between accuracy and robustness.

翻译:我们从统计学习理论的角度,对生物神经网络分类的功能进行调查,这种分类模式是简化的,是一种具有身份激活功能的连续时间随机神经网络(RNN),我们从统计学习理论的角度来调查生物神经网络的功能。在纯随机(robust)制度下,我们给出了一个总化错误,这种错误具有很高的概率,从而表明经验风险最小化是一流的假设。我们表明,RNN保留了他们作为培训和分类任务的独特信息所输入路径的部分签名。我们说,这些RNN很容易培训和稳健,并以合成数据和真实数据的数字实验来支持这些观测。我们还展示了准确性和稳健性之间的权衡现象。