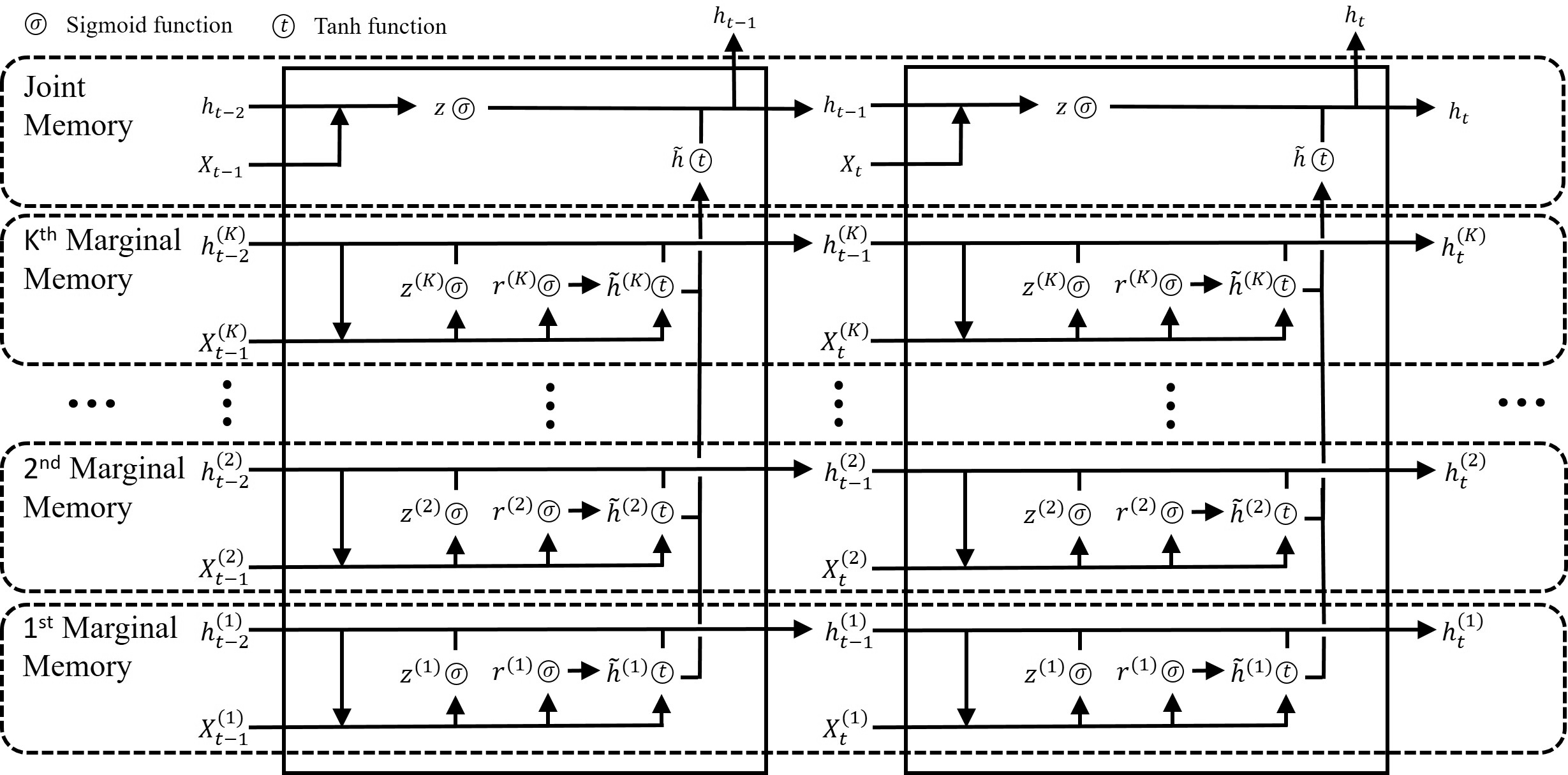

The essence of multivariate sequential learning is all about how to extract dependencies in data. These data sets, such as hourly medical records in intensive care units and multi-frequency phonetic time series, often time exhibit not only strong serial dependencies in the individual components (the "marginal" memory) but also non-negligible memories in the cross-sectional dependencies (the "joint" memory). Because of the multivariate complexity in the evolution of the joint distribution that underlies the data generating process, we take a data-driven approach and construct a novel recurrent network architecture, termed Memory-Gated Recurrent Networks (mGRN), with gates explicitly regulating two distinct types of memories: the marginal memory and the joint memory. Through a combination of comprehensive simulation studies and empirical experiments on a range of public datasets, we show that our proposed mGRN architecture consistently outperforms state-of-the-art architectures targeting multivariate time series.

翻译:多变量相继学习的本质在于如何提取数据依赖性。这些数据组,如特护单位和多频率超时时间序列的小时医疗记录等,往往时间不仅显示个别组成部分(“边际”记忆)的强烈连续依赖性,而且显示跨部门依赖(“联合”记忆)的不可忽略的记忆。由于数据生成过程所依据的联合分布的演进具有多变量复杂性,我们采取了数据驱动方法,并建立了一个新型的经常性网络结构,称为“记忆-Gate 经常网络”,其大门明确调节两种不同的记忆类型:边际记忆和联合记忆。通过综合模拟研究和一系列公共数据集实验的结合,我们显示我们拟议的MGRN结构始终比多变量时间序列的状态结构更完美。