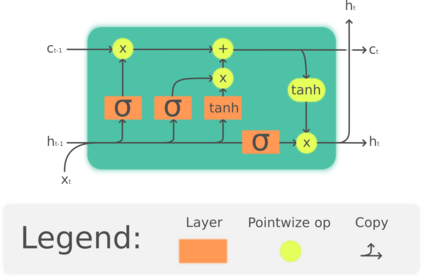

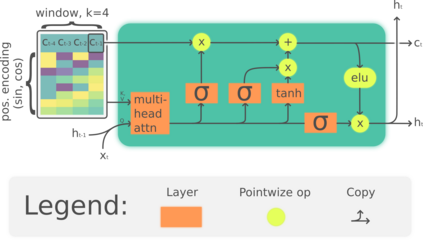

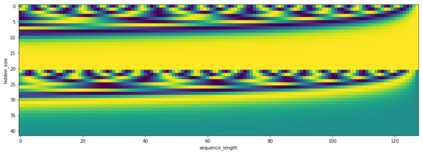

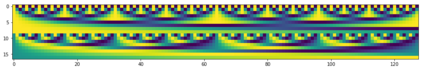

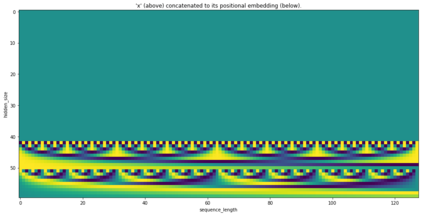

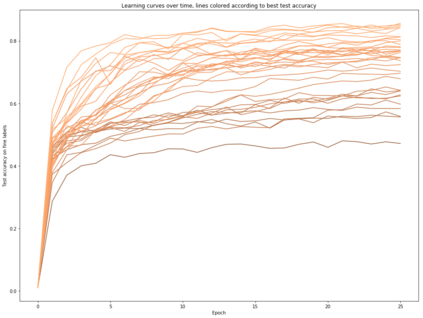

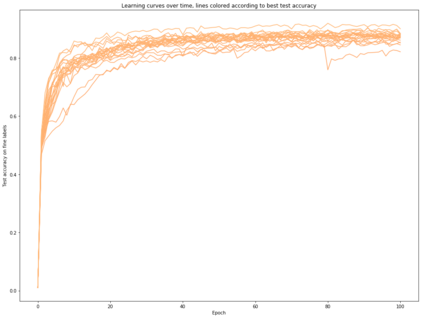

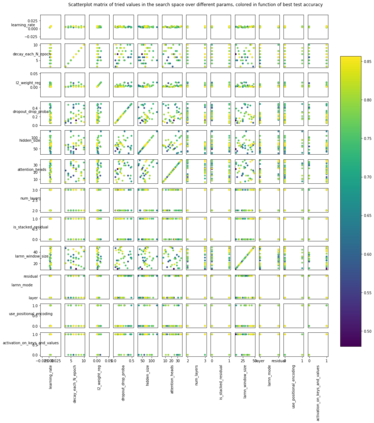

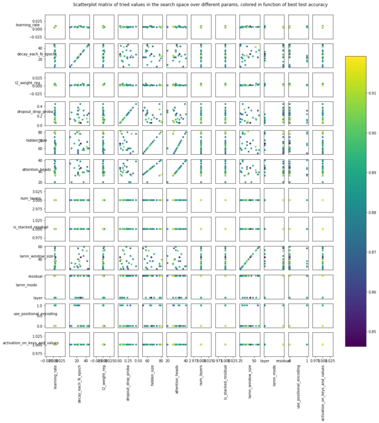

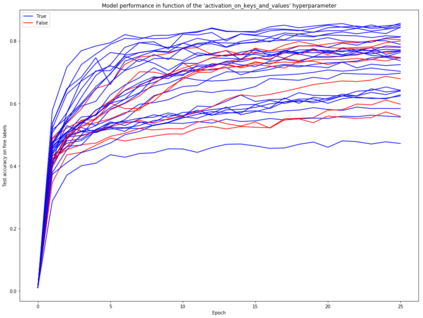

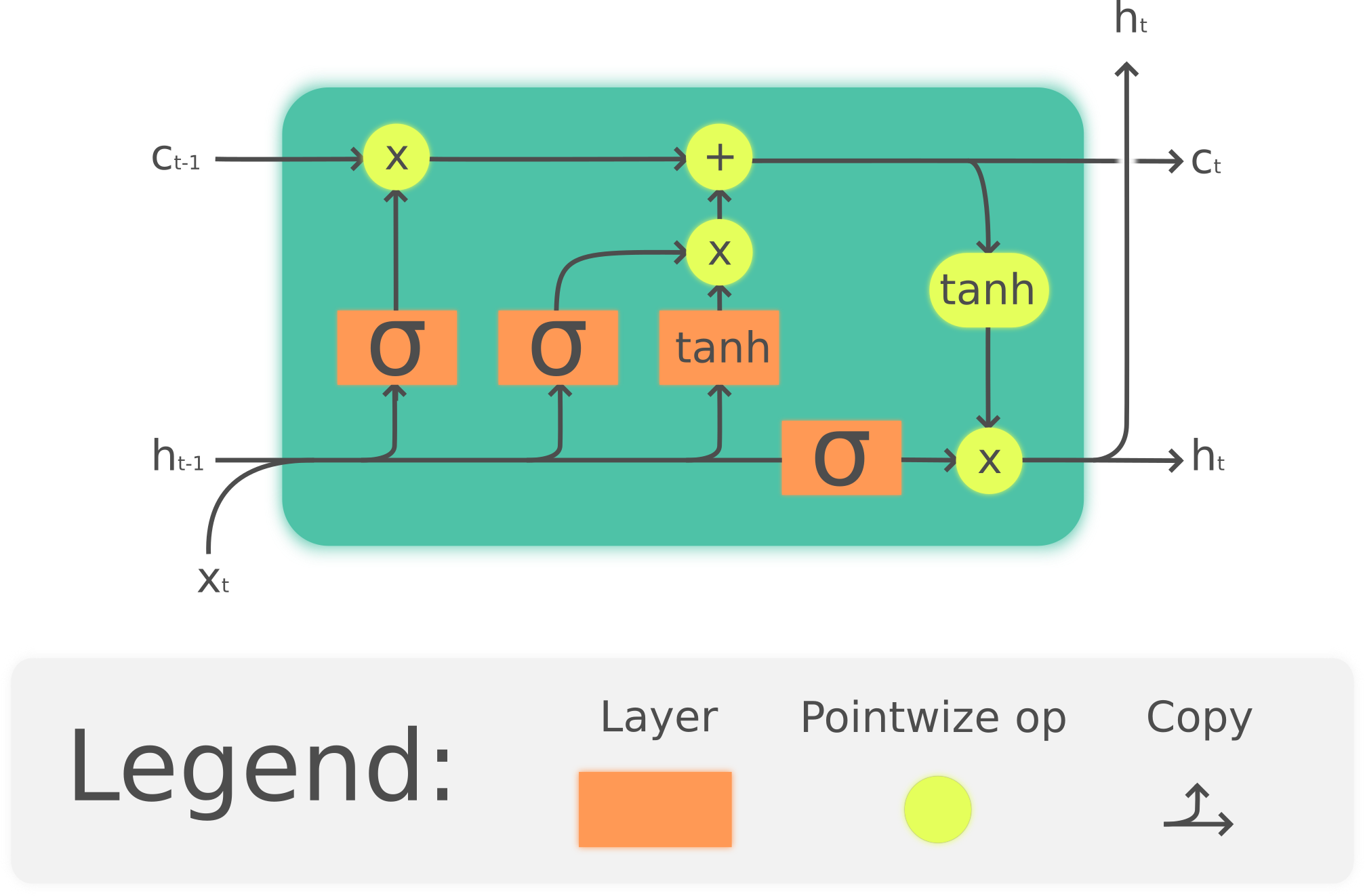

The Linear Attention Recurrent Neural Network (LARNN) is a recurrent attention module derived from the Long Short-Term Memory (LSTM) cell and ideas from the consciousness Recurrent Neural Network (RNN). Yes, it LARNNs. The LARNN uses attention on its past cell state values for a limited window size $k$. The formulas are also derived from the Batch Normalized LSTM (BN-LSTM) cell and the Transformer Network for its Multi-Head Attention Mechanism. The Multi-Head Attention Mechanism is used inside the cell such that it can query its own $k$ past values with the attention window. This has the effect of augmenting the rank of the tensor with the attention mechanism, such that the cell can perform complex queries to question its previous inner memories, which should augment the long short-term effect of the memory. With a clever trick, the LARNN cell with attention can be easily used inside a loop on the cell state, just like how any other Recurrent Neural Network (RNN) cell can be looped linearly through time series. This is due to the fact that its state, which is looped upon throughout time steps within time series, stores the inner states in a "first in, first out" queue which contains the $k$ most recent states and on which it is easily possible to add static positional encoding when the queue is represented as a tensor. This neural architecture yields better results than the vanilla LSTM cells. It can obtain results of 91.92% for the test accuracy, compared to the previously attained 91.65% using vanilla LSTM cells. Note that this is not to compare to other research, where up to 93.35% is obtained, but costly using 18 LSTM cells rather than with 2 to 3 cells as analyzed here. Finally, an interesting discovery is made, such that adding activation within the multi-head attention mechanism's linear layers can yield better results in the context researched hereto.

翻译:线性关注常规神经网络(LARNN) 是一个来自长期短期内存(LSTM) 的经常性关注模块, 它来自长期短期内存( LSTM) 单元格和意识内存( RNN) 2 的理念。 是的, LARNN 。 LARN 对有限的窗口大小的窗口内存( 美元) 使用其过去的单元格状态值。 公式还来自 Batch 正常 LSTM (BN- LSTM) 单元格和多主管注意机制的变换器网络。 多主管注意机制在单元格内被使用, 这样它就可以在关注窗口内查询自己的过去值$美元。 这有效果, 通过注意机制来提升 Excentor35 的等级。 LARNNN可以进行复杂的查询, LARNNN 过去的等级, LARNN可以对过去的内存信息进行复杂的查询, LARNT 和L 的内存取的内存取结果是更精确的。 这要归因于, 它在第一个阵列中, 它的内, 它的内存取的内存取结果是更精确的, 在最后一步内存中, 它的顺序里, 它会循环里, 它会循环里, 它会循环中, 它会比内存的内存取的内存取的内存的结果是更接近, 它会循环到内存, 。