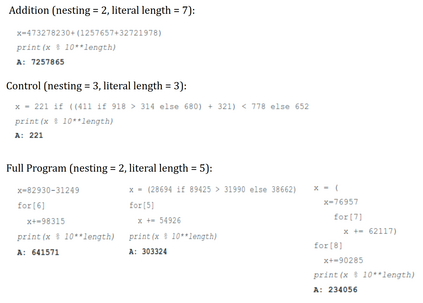

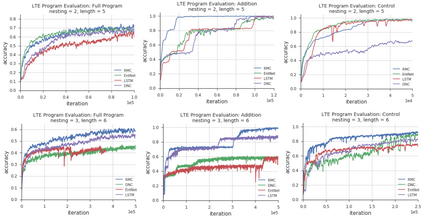

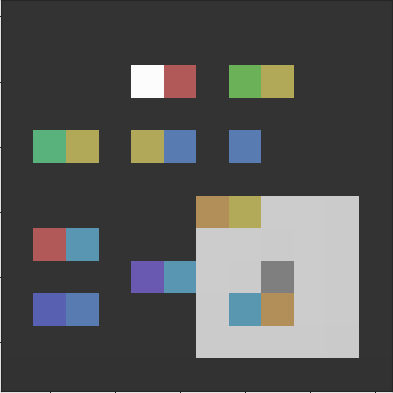

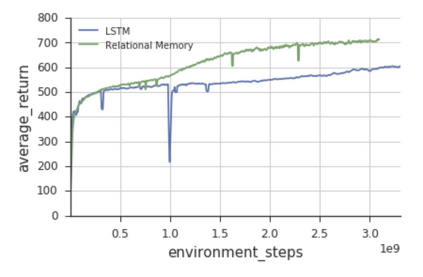

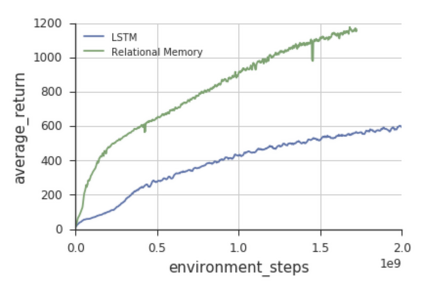

Memory-based neural networks model temporal data by leveraging an ability to remember information for long periods. It is unclear, however, whether they also have an ability to perform complex relational reasoning with the information they remember. Here, we first confirm our intuitions that standard memory architectures may struggle at tasks that heavily involve an understanding of the ways in which entities are connected -- i.e., tasks involving relational reasoning. We then improve upon these deficits by using a new memory module -- a \textit{Relational Memory Core} (RMC) -- which employs multi-head dot product attention to allow memories to interact. Finally, we test the RMC on a suite of tasks that may profit from more capable relational reasoning across sequential information, and show large gains in RL domains (e.g. Mini PacMan), program evaluation, and language modeling, achieving state-of-the-art results on the WikiText-103, Project Gutenberg, and GigaWord datasets.

翻译:以内存为基础的神经网络通过利用长期记忆信息的能力来模拟时间数据。 但是,还不清楚它们是否也有能力用它们记得的信息来进行复杂的关系推理。 在这里,我们首先确认我们的直觉,标准记忆结构可能会在大量涉及理解实体关联方式的任务上挣扎 -- -- 即涉及关系推理的任务。然后我们通过使用一个新的记忆模块 -- -- \ textit{关系内存核心}(RMC) -- -- 利用多头点产品关注来让记忆互动。最后,我们测试一套任务,这一系列任务可能受益于更有能力的相继信息关联推理,并显示在RL领域(如小太平洋人)、方案评估和语言建模方面的巨大收益,从而在WikitText-103、Gutenberg项目和GigaWord数据集上取得最新成果。