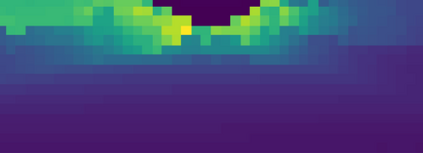

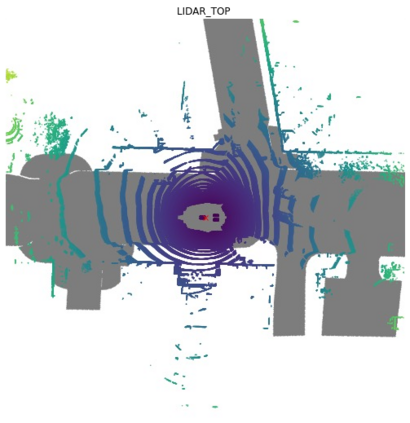

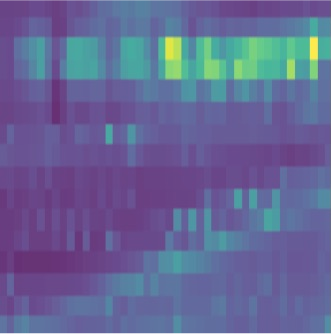

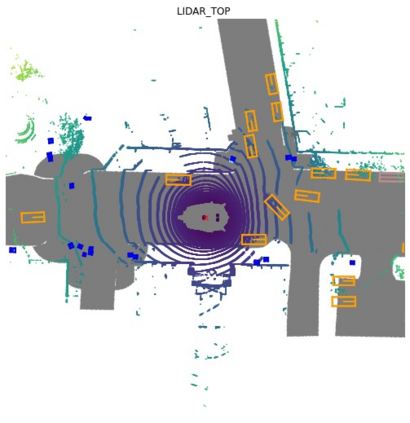

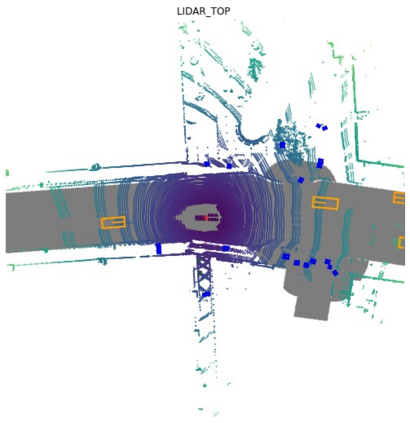

Multi-view camera-based 3D detection is a challenging problem in computer vision. Recent works leverage a pretrained LiDAR detection model to transfer knowledge to a camera-based student network. However, we argue that there is a major domain gap between the LiDAR BEV features and the camera-based BEV features, as they have different characteristics and are derived from different sources. In this paper, we propose Geometry Enhanced Masked Image Modeling (GeoMIM) to transfer the knowledge of the LiDAR model in a pretrain-finetune paradigm for improving the multi-view camera-based 3D detection. GeoMIM is a multi-camera vision transformer with Cross-View Attention (CVA) blocks that uses LiDAR BEV features encoded by the pretrained BEV model as learning targets. During pretraining, GeoMIM's decoder has a semantic branch completing dense perspective-view features and the other geometry branch reconstructing dense perspective-view depth maps. The depth branch is designed to be camera-aware by inputting the camera's parameters for better transfer capability. Extensive results demonstrate that GeoMIM outperforms existing methods on nuScenes benchmark, achieving state-of-the-art performance for camera-based 3D object detection and 3D segmentation.

翻译:基于多视角相机的三维检测是计算机视觉中一个具有挑战性的问题。最近的工作利用预训练的 LiDAR 检测模型将知识传递给基于相机的学生网络。然而,我们认为 LiDAR BEV 特征和基于相机 BEV 特征之间存在重大的域差异,因为它们具有不同的特征并来自不同的来源。在本文中,我们提出了基于几何增强的遮蔽图像建模 (GeoMIM) 以预训练微调范式来传递 LiDAR 模型的知识,以提高多视角基于相机的三维检测能力。GeoMIM 是一个多相机视觉变换器,具有交叉视图注意力 (CVA) 块,使用预训练 BEV 模型编码的 LiDAR BEV 特征作为学习目标。在预训练期间,GeoMIM 的解码器拥有完成密集的透视视图特征的语义分支和重构密集透视视图深度地图的几何分支。深度分支经过设计是相机感知的,通过输入相机的参数,以获得更好的传递能力。广泛的实验结果表明,GeoMIM 在 nuScenes 基准测试中优于现有方法,在相机三维物体检测和三维语义分割方面均取得了最先进的性能。