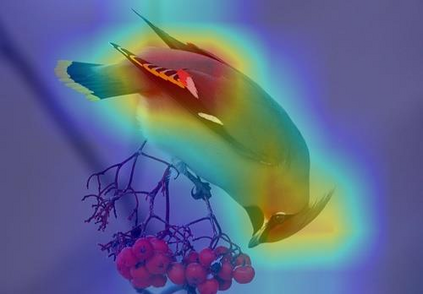

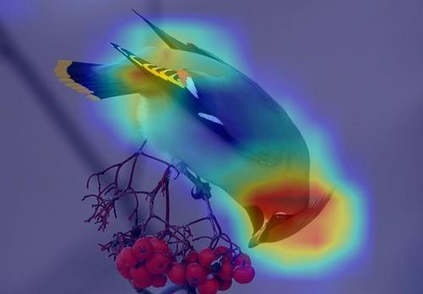

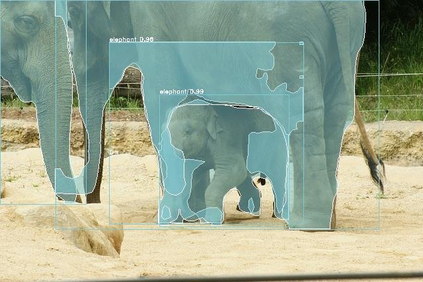

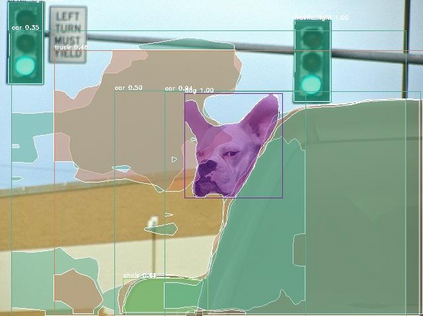

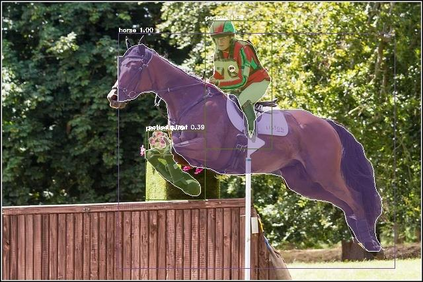

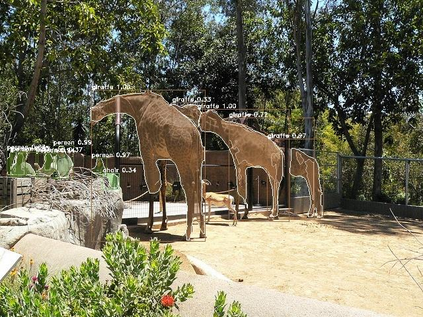

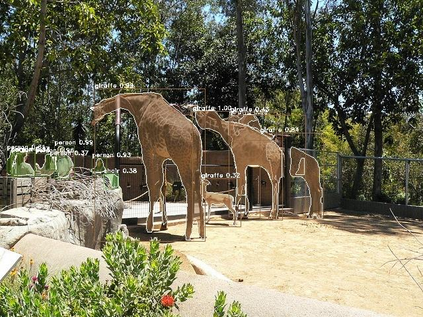

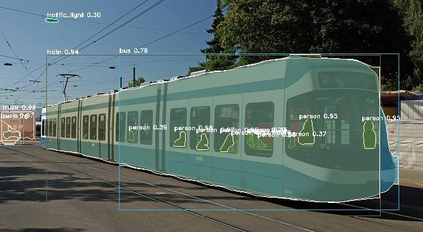

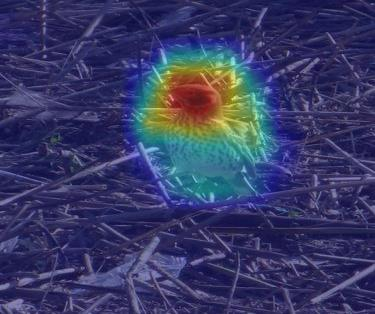

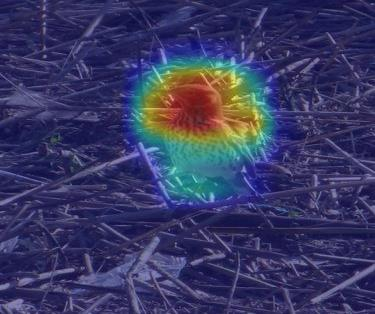

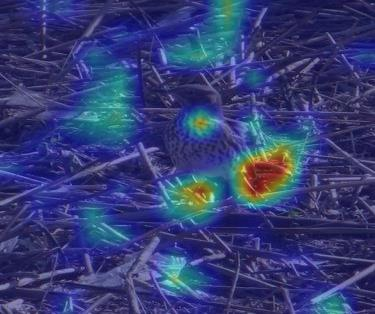

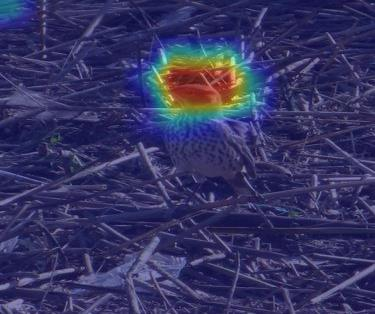

Most object recognition approaches predominantly focus on learning discriminative visual patterns while overlooking the holistic object structure. Though important, structure modeling usually requires significant manual annotations and therefore is labor-intensive. In this paper, we propose to "look into object" (explicitly yet intrinsically model the object structure) through incorporating self-supervisions into the traditional framework. We show the recognition backbone can be substantially enhanced for more robust representation learning, without any cost of extra annotation and inference speed. Specifically, we first propose an object-extent learning module for localizing the object according to the visual patterns shared among the instances in the same category. We then design a spatial context learning module for modeling the internal structures of the object, through predicting the relative positions within the extent. These two modules can be easily plugged into any backbone networks during training and detached at inference time. Extensive experiments show that our look-into-object approach (LIO) achieves large performance gain on a number of benchmarks, including generic object recognition (ImageNet) and fine-grained object recognition tasks (CUB, Cars, Aircraft). We also show that this learning paradigm is highly generalizable to other tasks such as object detection and segmentation (MS COCO). Project page: https://github.com/JDAI-CV/LIO.

翻译:多数对象识别方法主要侧重于学习歧视性的视觉模式,同时忽略整体对象结构。虽然重要,但结构建模通常需要大量的手工说明,因此是劳动密集型的。在本文中,我们提议通过将自我监督的视野纳入传统框架来“观察对象”(明确而内在地模拟对象结构),通过将自我监督的视野纳入传统框架来“观察对象”(明确而内在地模拟对象结构)。我们显示,在无需额外说明和推断速度的成本的情况下,可以大大加强识别骨干,以便进行更强有力的代表性学习。具体地说,我们首先提出一个目标扩展学习模块,以便根据同一类别中共有的视觉模式将对象本地化。然后我们设计一个空间背景学习模块,通过预测范围内的相对位置来模拟物体的内部结构。这两个模块在培训过程中很容易被连接到任何主干网络中,并在推论时间分离。广泛的实验表明,我们的外向定位定位和推断方法在许多基准上取得了很大的绩效收益,包括通用的物体识别(IMGNet)和精准的物体识别任务(C,C,航空器)。我们还表明,这种示范部分是高层次的示范/COMA/C。