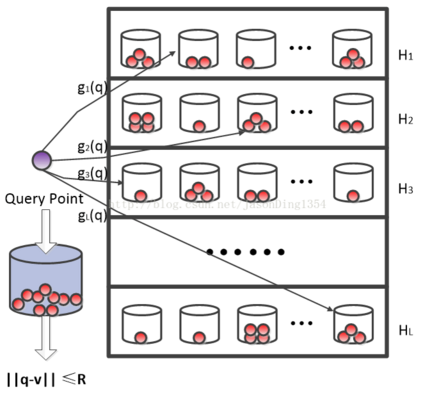

Zero-shot cross-modal retrieval (ZS-CMR) deals with the retrieval problem among heterogenous data from unseen classes. Typically, to guarantee generalization, the pre-defined class embeddings from natural language processing (NLP) models are used to build a common space. In this paper, instead of using an extra NLP model to define a common space beforehand, we consider a totally different way to construct (or learn) a common hamming space from an information-theoretic perspective. We term our model the Information-Theoretic Hashing (ITH), which is composed of two cascading modules: an Adaptive Information Aggregation (AIA) module; and a Semantic Preserving Encoding (SPE) module. Specifically, our AIA module takes the inspiration from the Principle of Relevant Information (PRI) to construct a common space that adaptively aggregates the intrinsic semantics of different modalities of data and filters out redundant or irrelevant information. On the other hand, our SPE module further generates the hashing codes of different modalities by preserving the similarity of intrinsic semantics with the element-wise Kullback-Leibler (KL) divergence. A total correlation regularization term is also imposed to reduce the redundancy amongst different dimensions of hash codes. Sufficient experiments on three benchmark datasets demonstrate the superiority of the proposed ITH in ZS-CMR. Source code is available in the supplementary material.

翻译:零点交叉模式检索( ZS- CMR) 处理来自隐蔽类的异式数据检索问题。 通常, 通常, 保证一般化, 自然语言处理模型预定义的分类嵌入器用于构建共同空间 。 在本文中, 我们不是使用额外的 NLP 模型来事先定义共同空间, 而是考虑用完全不同的方法从信息理论角度构建( 或学习) 一个共同的隐蔽空间。 我们用我们的模式命名信息理论散列( ITH ), 由两个串列模块组成: 适应性信息聚合模块; 和 自然语言处理模型预定义的分类嵌入模块 。 具体而言, 我们的 AIA 模块从相关信息原则( PRI) 中汲取灵感, 以适应方式将不同数据模式的内在稳定性和过滤器过滤器的内在特性整合出多余或不相关的信息。 另一方面, 我们的 SPE 模块进一步生成了不同模式的代码, 其不同方式的代码, 是通过维护内在的变现标准 。