【泡泡一分钟】LIMO:激光和单目相机融合的视觉里程计

每天一分钟,带你读遍机器人顶级会议文章

标题:LIMO: Lidar-Monocular Visual Odometry

作者:Johannes Graeter, Alexander Wilczynski, Martin Lauer

来源:2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

编译:陈世浪

审核:颜青松

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

自动驾驶中高级功能强烈依赖于精确的运动估计,自动驾驶强大算法已经被开发出来。然而,他们的绝大多数集中在双目图像或纯激光雷达测量。在视觉定位中,相机和激光雷达的结合是一种很有前景的技术,但目前这种技术大多无人问津。

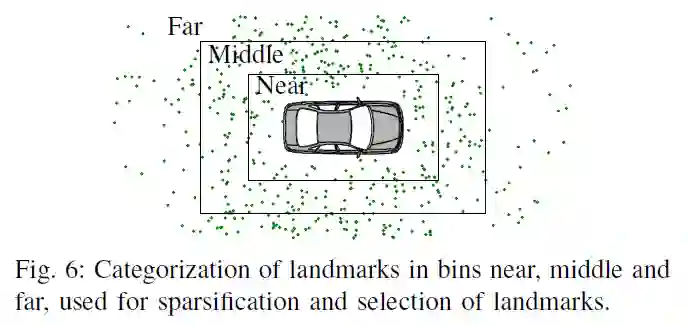

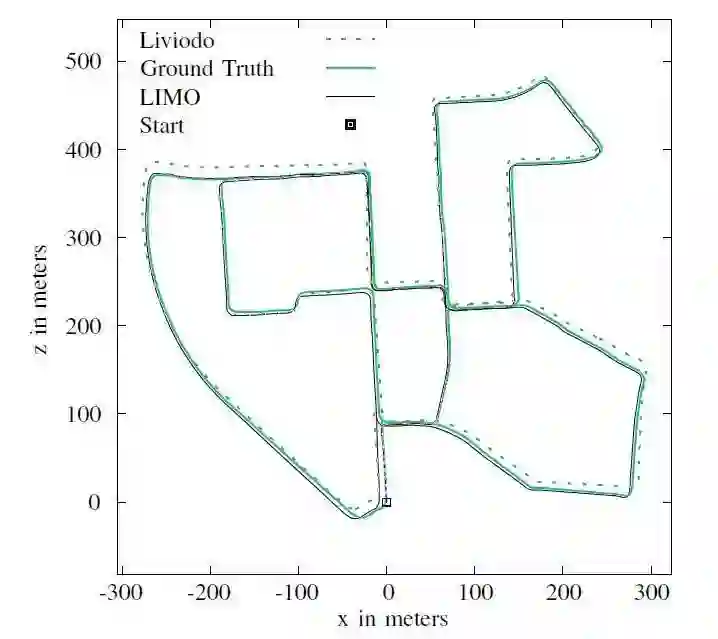

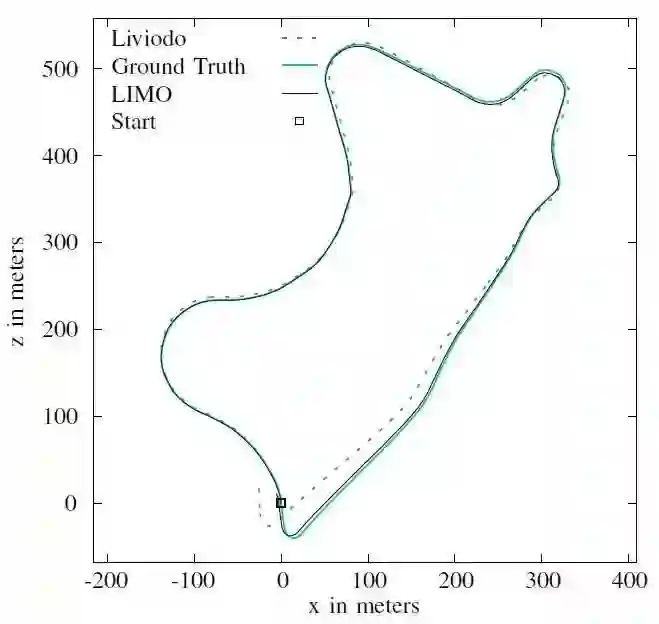

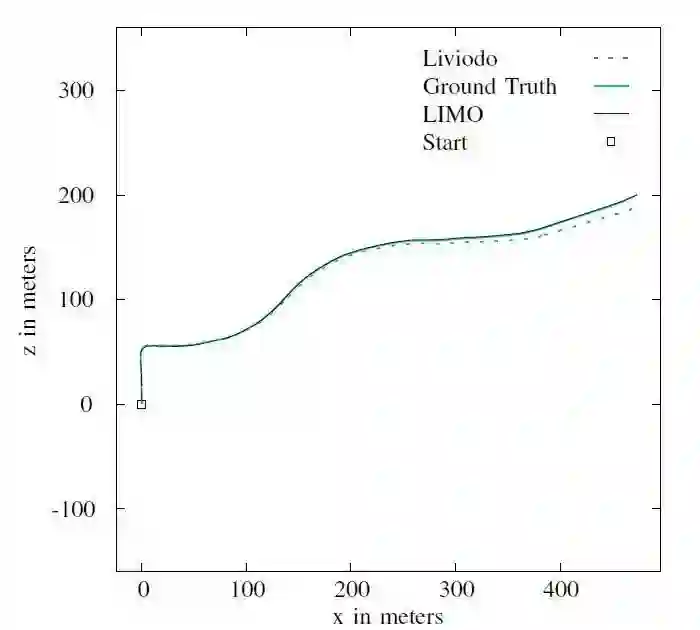

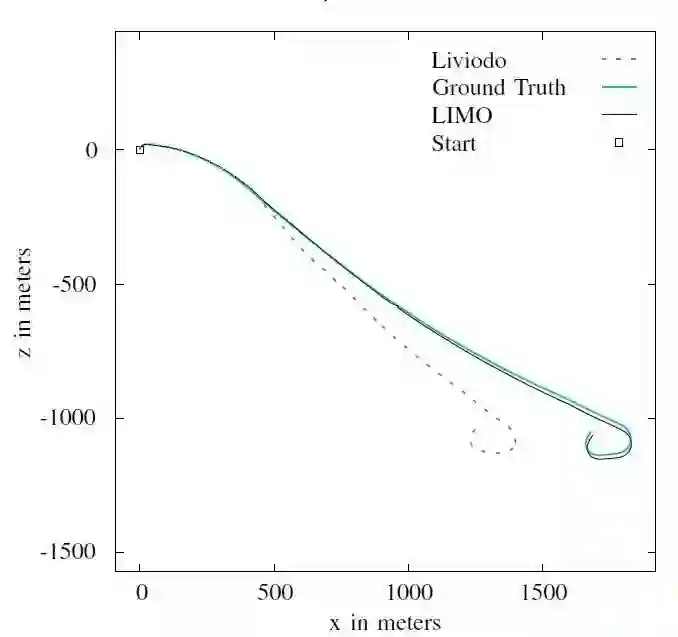

在本研究中,我们提出了一种针对摄像机特征轨迹的激光雷达深度提取算法,并利用基于鲁棒关键帧的BA算法来估计运动。语义标记用于植被地标的离群剔除和加权,该传感器组合的能力在具有竞争力的KITTI数据集上得到了验证,排名在前15位。

代码已经开源:https://github.com/johannes-graeter/limo

Abstract

Higher level functionality in autonomous driving depends strongly on a precise motion estimate of the vehicle.Powerful algorithms have been developed. However, their great majority focuses on either binocular imagery or pure LIDAR measurements. The promising combination of camera and LIDAR for visual localization has mostly been unattended. In this work we fill this gap, by proposing a depth extraction al orithm from LIDAR measurements for camera feature tracks and estimating motion by robustified keyframe based Bundle Adjustment. Semantic labeling is used for outlier rejection and weighting of vegetation landmarks.The capability of this sensor combination is demonstrated on the competitive KITTI dataset, achieving a placement among the top 15. The code is released to the community.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com