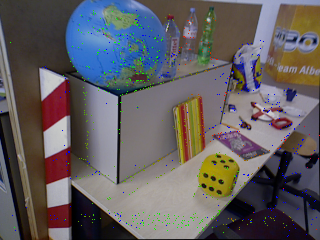

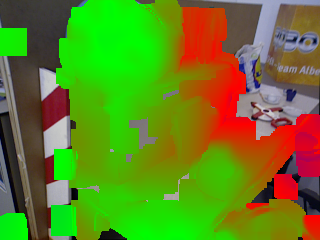

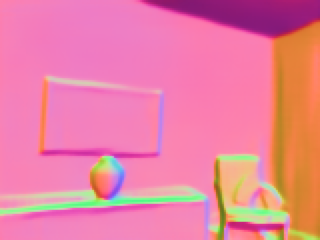

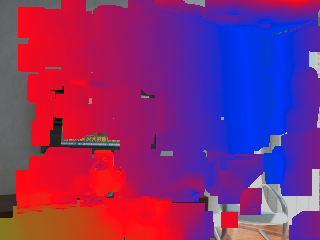

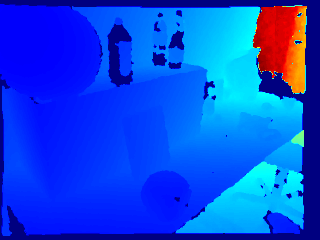

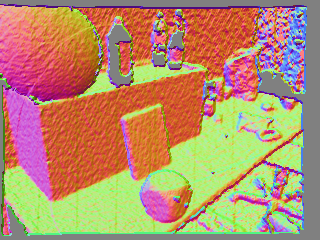

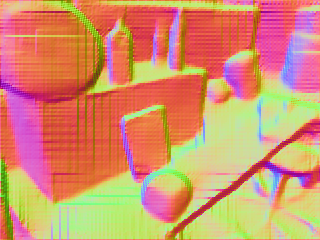

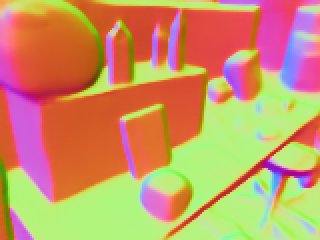

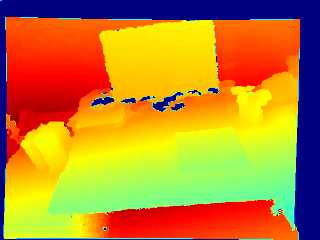

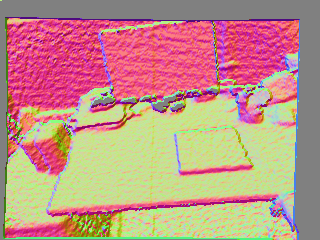

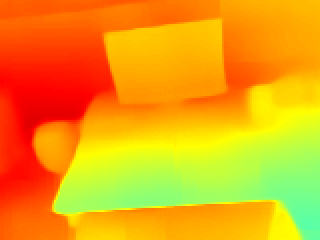

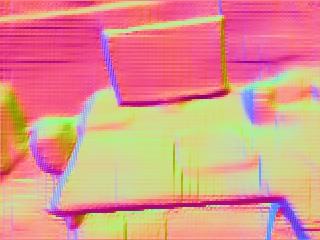

In this paper, we proposed a new deep learning based dense monocular SLAM method. Compared to existing methods, the proposed framework constructs a dense 3D model via a sparse to dense mapping using learned surface normals. With single view learned depth estimation as prior for monocular visual odometry, we obtain both accurate positioning and high quality depth reconstruction. The depth and normal are predicted by a single network trained in a tightly coupled manner.Experimental results show that our method significantly improves the performance of visual tracking and depth prediction in comparison to the state-of-the-art in deep monocular dense SLAM.

翻译:与现有方法相比,拟议框架通过利用已学的表面常态进行稀疏至密密的绘图,构建了密度3D模型。以单一的视角了解了单眼视觉测量之前的深度估计,我们获得了准确定位和高质量的深度重建。深度和正常度由经过密切训练的单一网络预测。实验结果表明,与深单眼密集的SLAM相比,我们的方法大大改进了视觉跟踪和深度预测的性能。