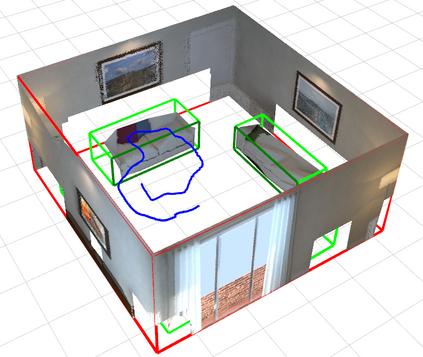

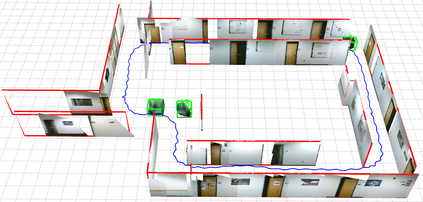

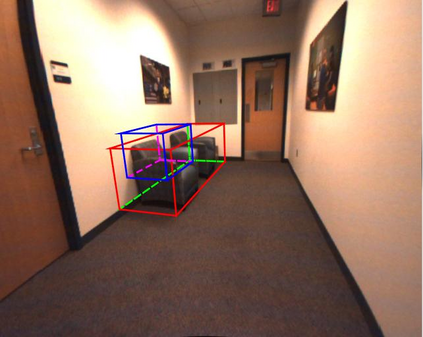

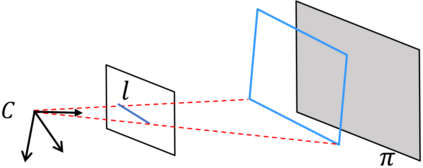

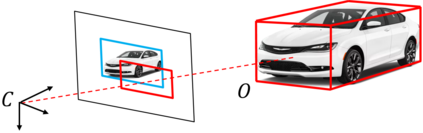

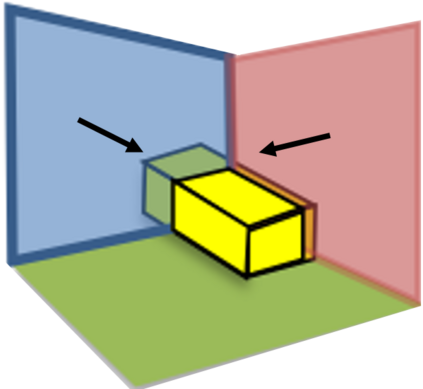

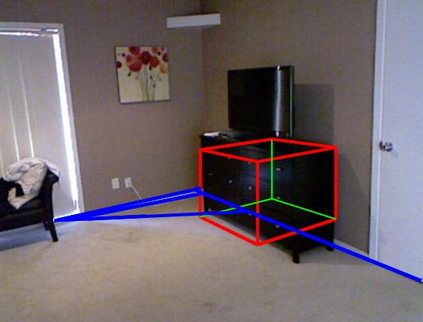

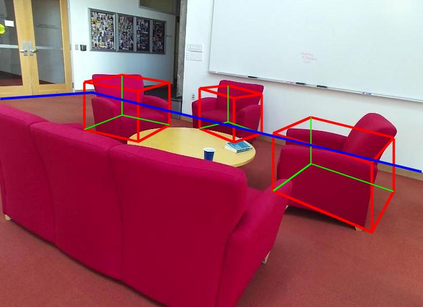

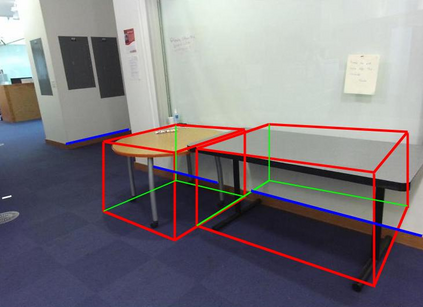

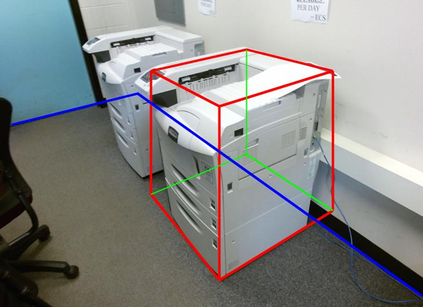

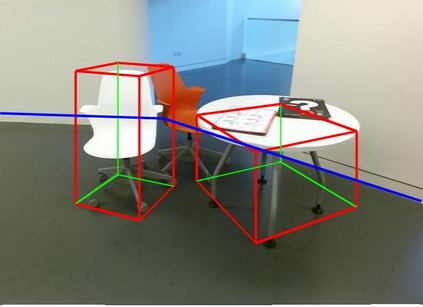

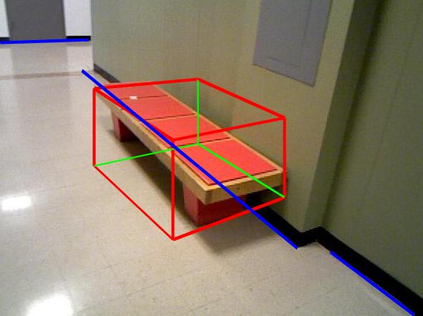

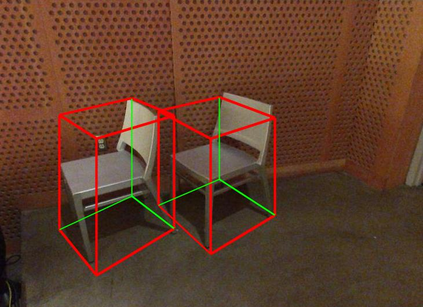

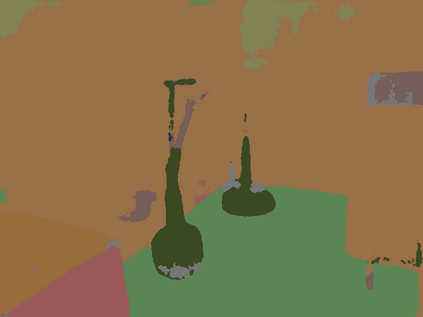

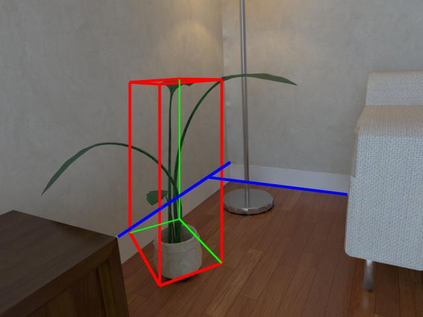

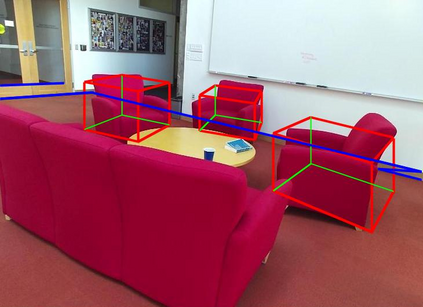

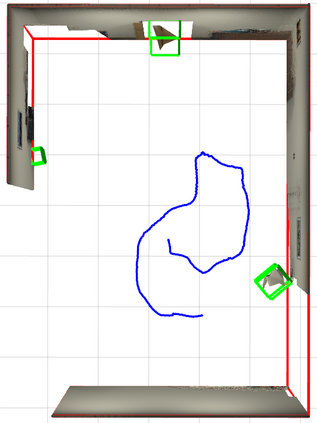

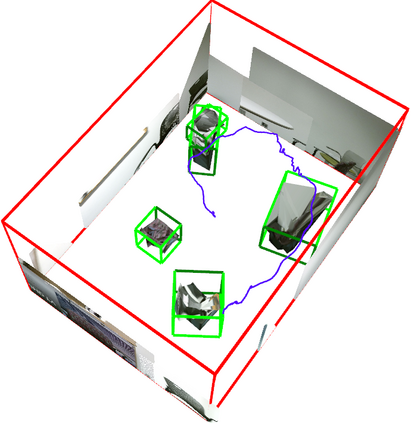

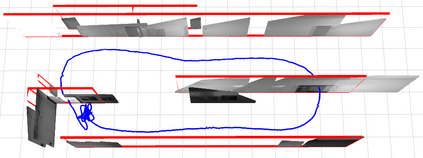

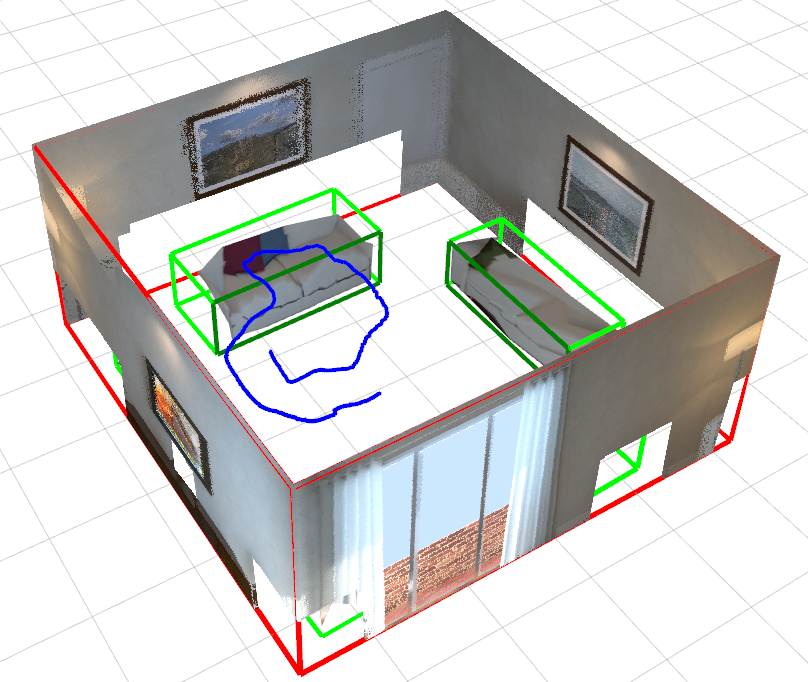

We present a monocular Simultaneous Localization and Mapping (SLAM) using high level object and plane landmarks, in addition to points. The resulting map is denser, more compact and meaningful compared to point only SLAM. We first propose a high order graphical model to jointly infer the 3D object and layout planes from single image considering occlusions and semantic constraints. The extracted cuboid object and layout planes are further optimized in a unified SLAM framework. Objects and planes can provide more semantic constraints such as Manhattan and object supporting relationships compared to points. Experiments on various public and collected datasets including ICL NUIM and TUM mono show that our algorithm can improve camera localization accuracy compared to state-of-the-art SLAM and also generate dense maps in many structured environments.

翻译:除了点数之外,我们还利用高水平天体和平面标志,展示了单方同声成像和绘图(SLAM),由此绘制的地图比仅点数更稠密、更紧凑、更有意义。我们首先提出了一个高顺序的图形模型,以考虑到隔离和语义限制,从单一图像中联合推断三维天体和布局平面。在统一的天体成像框架下,提取的幼体和布局平面将进一步优化。物体和飞机可以提供比点数更多的语义限制,如曼哈顿和物体支持关系。对各种公众和收集的数据集,包括ICL NUIM和TUM单轨的实验显示,我们的算法可以提高摄影机与最先进的SLM的定位精确度,并在许多结构环境中生成密度的地图。