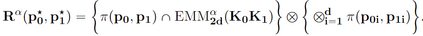

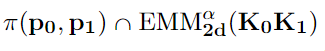

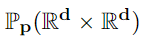

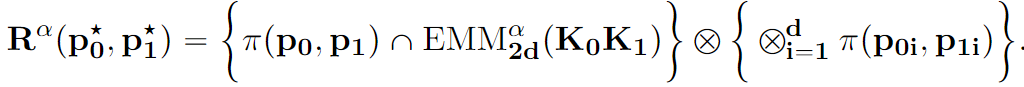

Flexible Bayesian models are typically constructed using limits of large parametric models with a multitude of parameters that are often uninterpretable. In this article, we offer a novel alternative by constructing an exponentially tilted empirical likelihood carefully designed to concentrate near a parametric family of distributions of choice with respect to a novel variant of the Wasserstein metric, which is then combined with a prior distribution on model parameters to obtain a robustified posterior. The proposed approach finds applications in a wide variety of robust inference problems, where we intend to perform inference on the parameters associated with the centering distribution in presence of outliers. Our proposed transport metric enjoys great computational simplicity, exploiting the Sinkhorn regularization for discrete optimal transport problems, and being inherently parallelizable. We demonstrate superior performance of our methodology when compared against state-of-the-art robust Bayesian inference methods. We also demonstrate equivalence of our approach with a nonparametric Bayesian formulation under a suitable asymptotic framework, testifying to its flexibility. The constrained entropy maximization that sits at the heart of our likelihood formulation finds its utility beyond robust Bayesian inference; an illustration is provided in a trustworthy machine learning application.

翻译:灵活的贝叶斯模型通常使用大量参数的大型参数模型的极限构建,这些参数通常是不可解释的。本文提出了一种全新的选择,通过构建一个具有指数倾斜的经验似然,该经验似然经过精心设计,可与选择的参数分布族相对应地聚集在一起,并结合对模型参数的先验分布,以获得鲁棒后验分布。所提出的方法适用于广泛的鲁棒推断问题,其中我们想要在存在异常值的情况下进行对中心分布相关参数的推断。我们提出的传输度量具有非常简单的计算方法,利用Sinkhorn正则化来求解离散最优传输问题,并且具有固有的并行化特性。我们展示了当与最先进的鲁棒贝叶斯推断方法进行比较时,我们的方法具有更优的性能。我们还证明,我们的方法在适当的渐近情况下与非参数贝叶斯公式等价,证明了其灵活性。围绕我们的可能性公式的受限熵最大化超越了鲁棒贝叶斯推断;在一个值得信赖的机器学习应用程序中进行了演示。