题目

生成式对抗网络先验贝叶斯推断,Bayesian Inference with Generative Adversarial Network Priors

关键字

生成对抗网络,贝叶斯推断,深度学习,人工智能,计算物理学,图像处理

简介

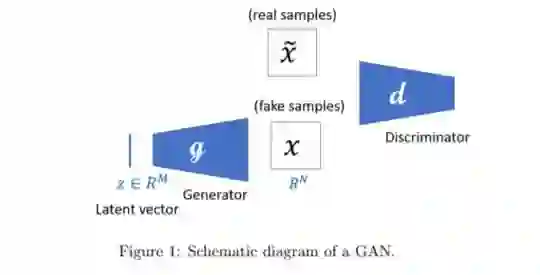

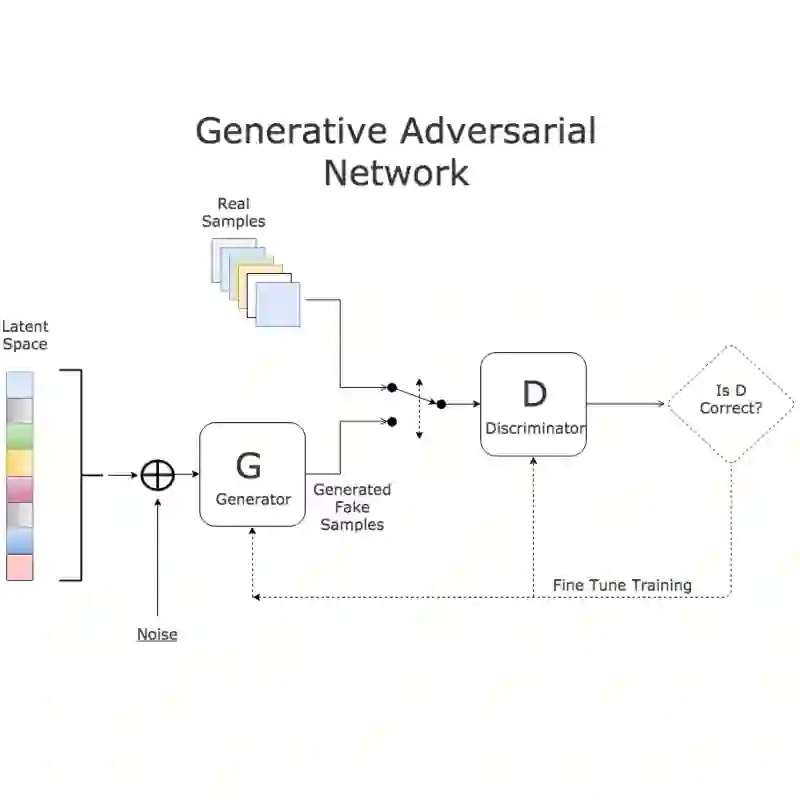

当两者通过物理模型链接时,贝叶斯推断被广泛用于根据相关场的测量来推断并量化感兴趣场的不确定性。尽管有许多应用,贝叶斯推理在推断具有大维离散表示和/或具有难以用数学表示的先验分布的字段时仍面临挑战。在本手稿中,我们考虑使用对抗性生成网络(GAN)来应对这些挑战。 GAN是一种深层神经网络,具有学习给定字段的多个样本所隐含的分布的能力。一旦对这些样本进行了训练,GAN的生成器组件会将低维潜矢量的iid组件映射到目标场分布的近似值。在这项工作中,我们演示了如何将这种近似分布用作贝叶斯更新中的先验,以及它如何解决与表征复杂的先验分布和推断字段的大范围相关的挑战。我们通过将其应用于热噪声问题中的热传导问题中的推断和量化初始温度场中的不确定性的问题,论证了该方法的有效性,该问题由稍后的温度噪声测量得出。

作者

Dhruv Patel, Assad A Oberai

成为VIP会员查看完整内容

相关内容

专知会员服务

36+阅读 · 2019年11月12日