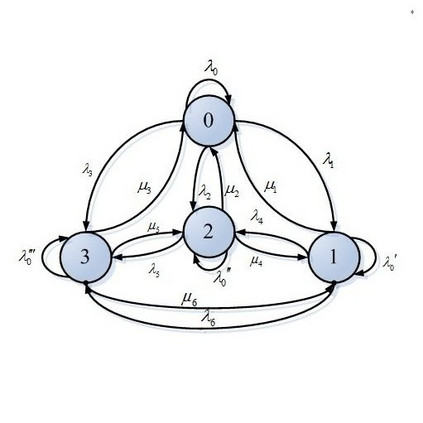

We prove that the sum of $t$ boolean-valued random variables sampled by a random walk on a regular expander converges in total variation distance to a discrete normal distribution at a rate of $O(\lambda/t^{1/2-o(1)})$, where $\lambda$ is the second largest eigenvalue of the random walk matrix in absolute value. To the best of our knowledge, among known Berry-Esseen bounds for Markov chains, our result is the first to show convergence in total variation distance, and is also the first to incorporate a linear dependence on expansion $\lambda$. In contrast, prior Markov chain Berry-Esseen bounds showed a convergence rate of $O(1/\sqrt{t})$ in weaker metrics such as Kolmogorov distance. Our result also improves upon prior work in the pseudorandomness literature, which showed that the total variation distance is $O(\lambda)$ when the approximating distribution is taken to be a binomial distribution. We achieve the faster $O(\lambda/t^{1/2-o(1)})$ convergence rate by generalizing the binomial distribution to discrete normals of arbitrary variance. We specifically construct discrete normals using a random walk on an appropriate 2-state Markov chain. Our bound can therefore be viewed as a regularity lemma that reduces the study of arbitrary expanders to a small class of particularly simple expanders.

翻译:暂无翻译