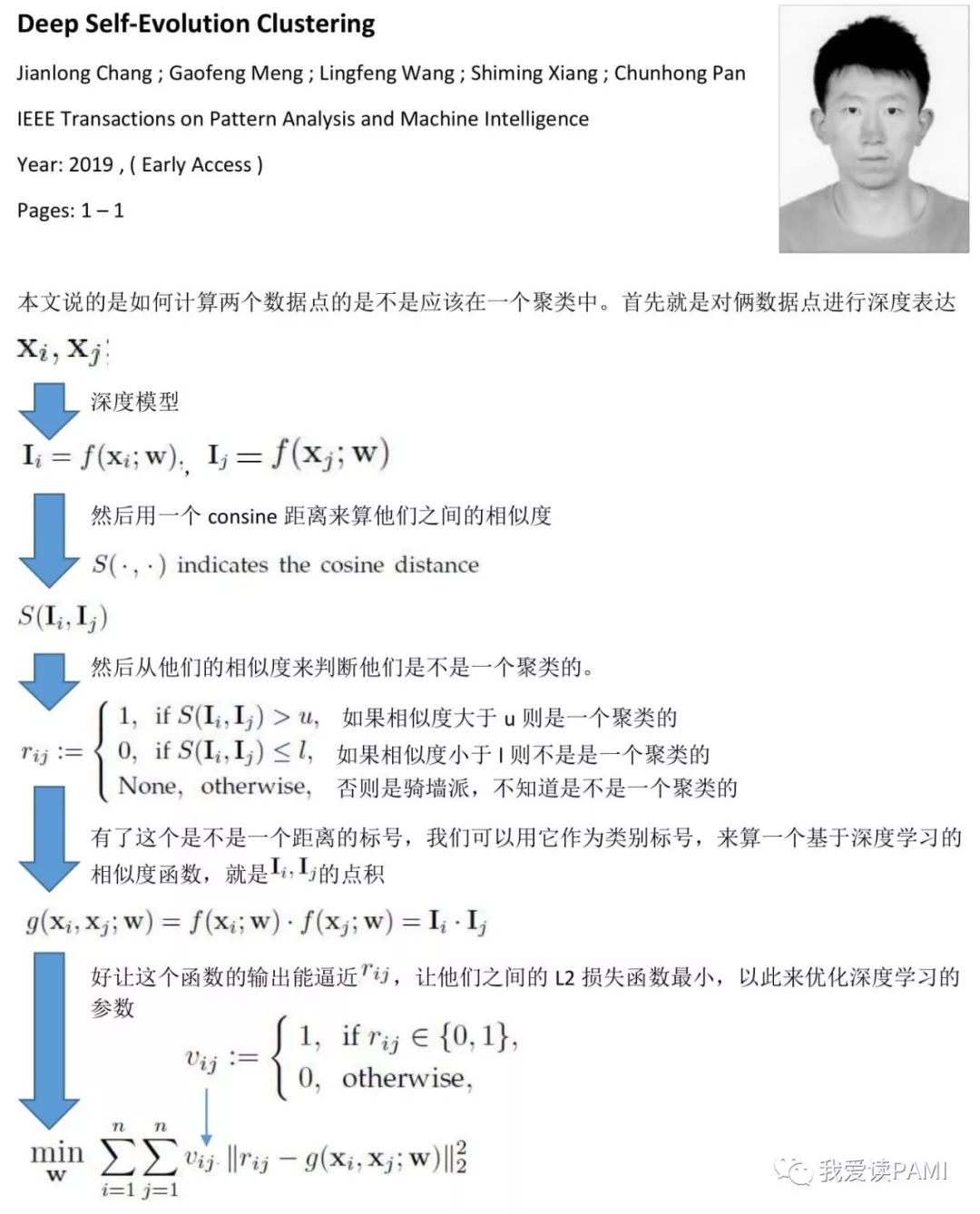

深度自进化聚类:Deep Self-Evolution Clustering

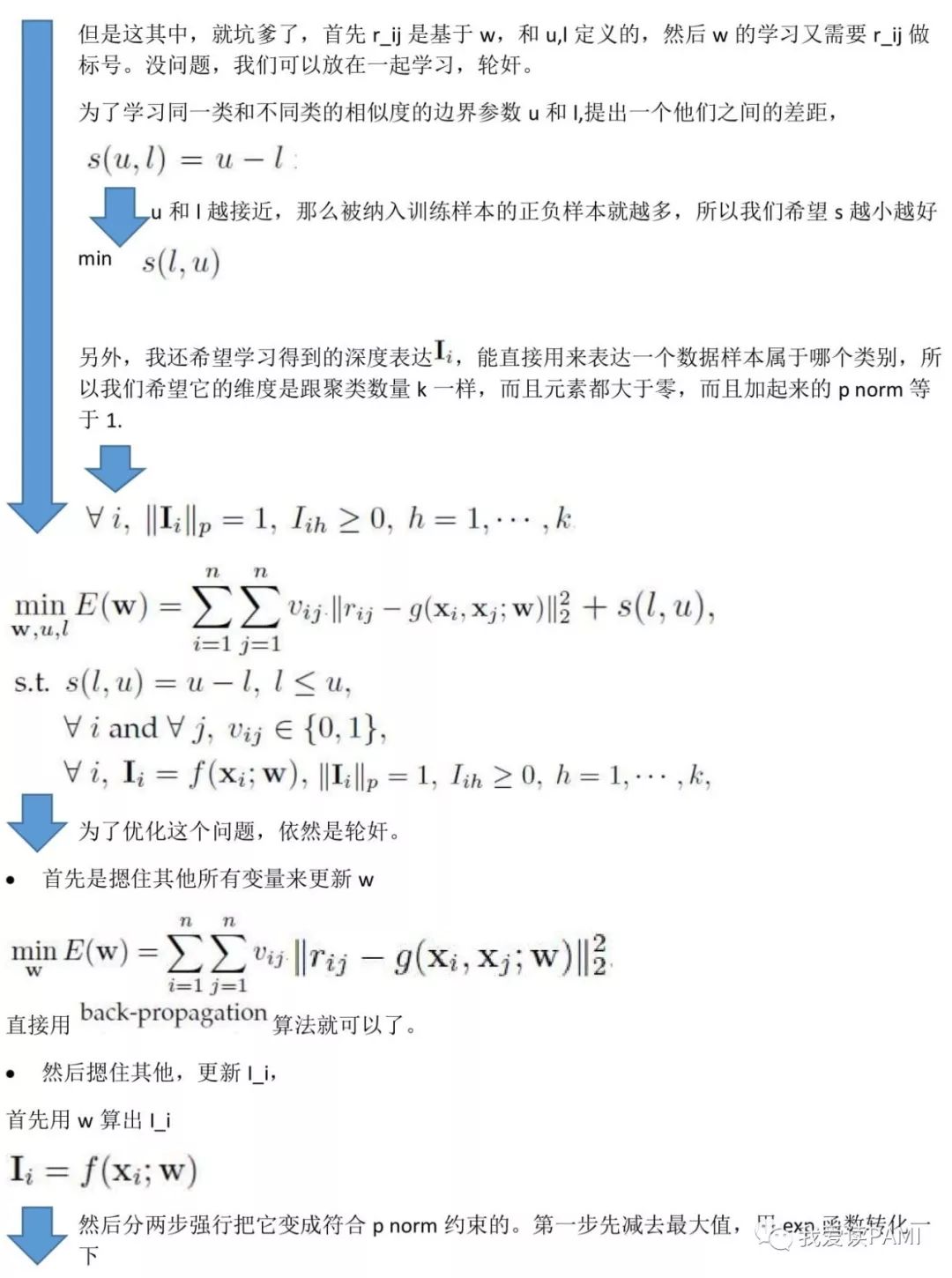

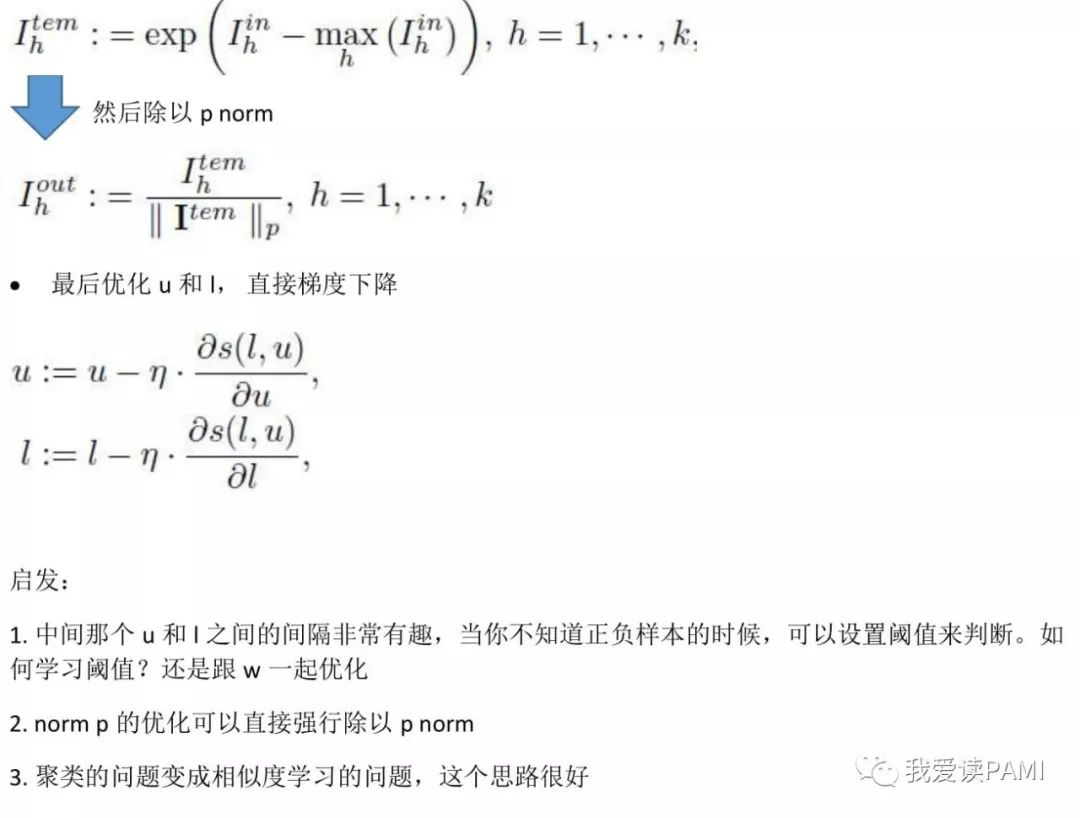

本文说的是聚类,但是转化成了相似度对比的问题。首先就是用个深度模型来判断是不是相似,而模型学习的标号又是通过相似度加俩阈值得到的,最终通过一起来学习模型参数和阈值而进行聚类。作者是自动化所的大神Jianlong Chang。

Deep Self-Evolution Clustering

Jianlong Chang ; Gaofeng Meng ; Lingfeng Wang ; Shiming Xiang ; Chunhong Pan

IEEE Transactions on Pattern Analysis and Machine Intelligence

Year: 2019 , ( Early Access )

Pages: 1 – 1

Clustering is a crucial but challenging task in pattern analysis and machine learning. Existing methods often ignore

the combination between representation learning and clustering. To tackle this problem, we reconsider the clustering task from its

definition to develop Deep Self-Evolution Clustering (DSEC) to jointly learn representations and cluster data. For this purpose, the

clustering task is recast as a binary pairwise-classification problem to estimate whether pairwise patterns are similar. Specifically,

similarities between pairwise patterns are defined by the dot product between indicator features which are generated by a deep

neural network (DNN). To learn informative representations for clustering, clustering constraints are imposed on the indicator

features to represent specific concepts with specific representations. Since the ground-truth similarities are unavailable in

clustering, an alternating iterative algorithm called Self-Evolution Clustering Training (SECT) is presented to select similar and

dissimilar pairwise patterns and to train the DNN alternately. Consequently, the indicator features tend to be one-hot vectors and

the patterns can be clustered by locating the largest response of the learned indicator features. Extensive experiments strongly

evidence that DSEC outperforms current models on twelve popular image, text and audio datasets consistently