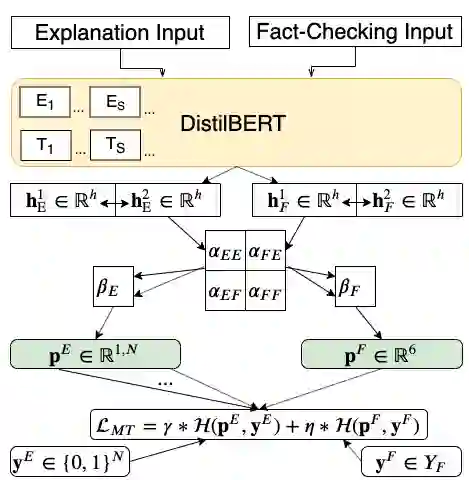

Most existing work on automated fact checking is concerned with predicting the veracity of claims based on metadata, social network spread, language used in claims, and, more recently, evidence supporting or denying claims. A crucial piece of the puzzle that is still missing is to understand how to automate the most elaborate part of the process -- generating justifications for verdicts on claims. This paper provides the first study of how these explanations can be generated automatically based on available claim context, and how this task can be modelled jointly with veracity prediction. Our results indicate that optimising both objectives at the same time, rather than training them separately, improves the performance of a fact checking system. The results of a manual evaluation further suggest that the informativeness, coverage and overall quality of the generated explanations are also improved in the multi-task model.

翻译:自动化事实检查的现有工作大多涉及预测基于元数据、社交网络分布、索赔中使用的语言以及最近支持或拒绝索赔的证据的索赔的真实性。目前仍然缺少的关键难题是了解如何使程序最精细的部分自动化,为索赔判决提供理由。本文件首次研究了如何根据现有索赔情况自动提出这些解释,以及如何将这项任务与真实性预测结合起来。我们的结果表明,同时优化两个目标而不是单独培训这两个目标,可以改进事实检查系统的绩效。人工评估的结果进一步表明,多任务模型也提高了所产生解释的信息、覆盖面和总体质量。