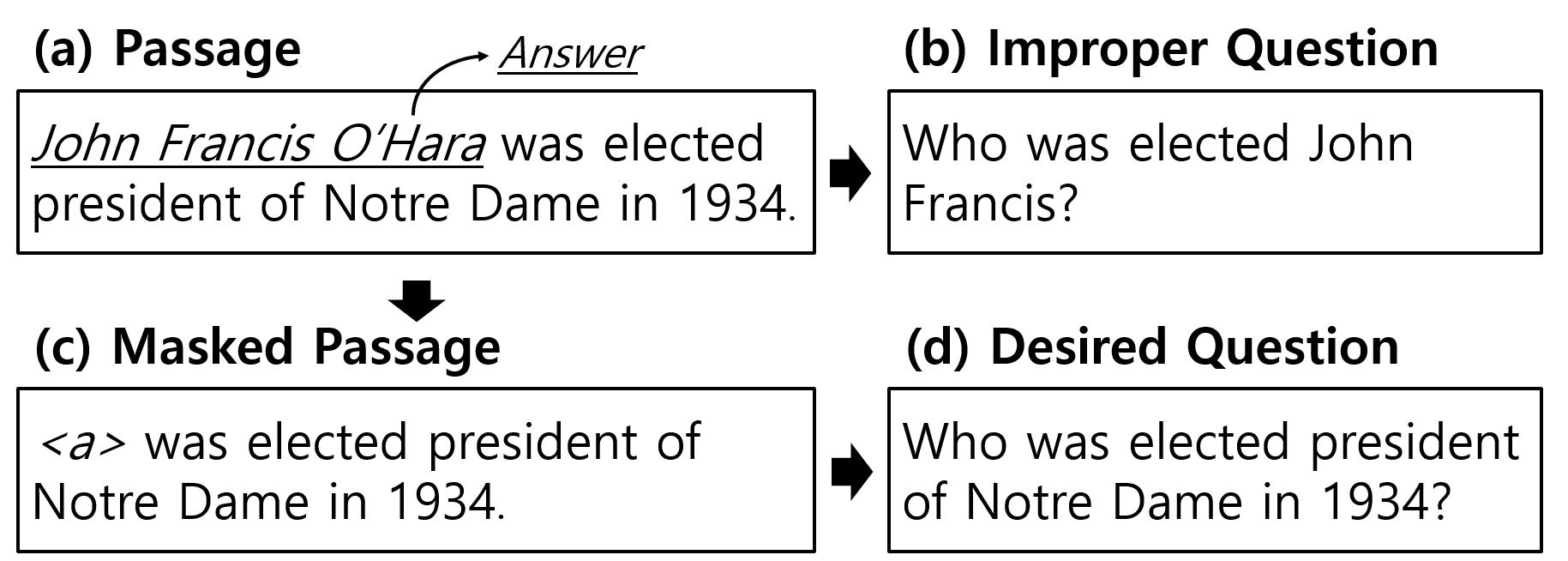

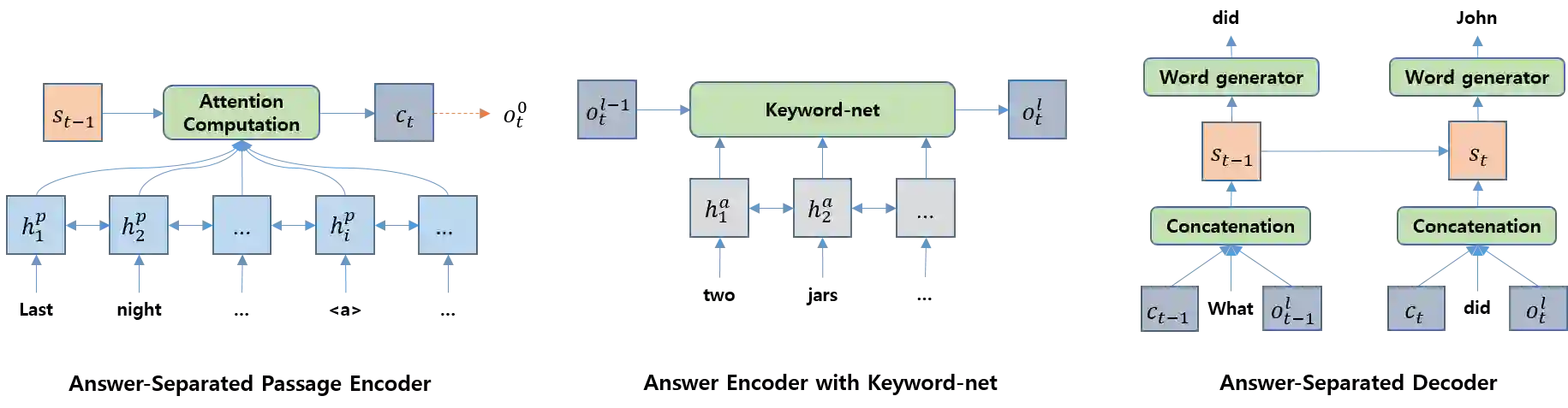

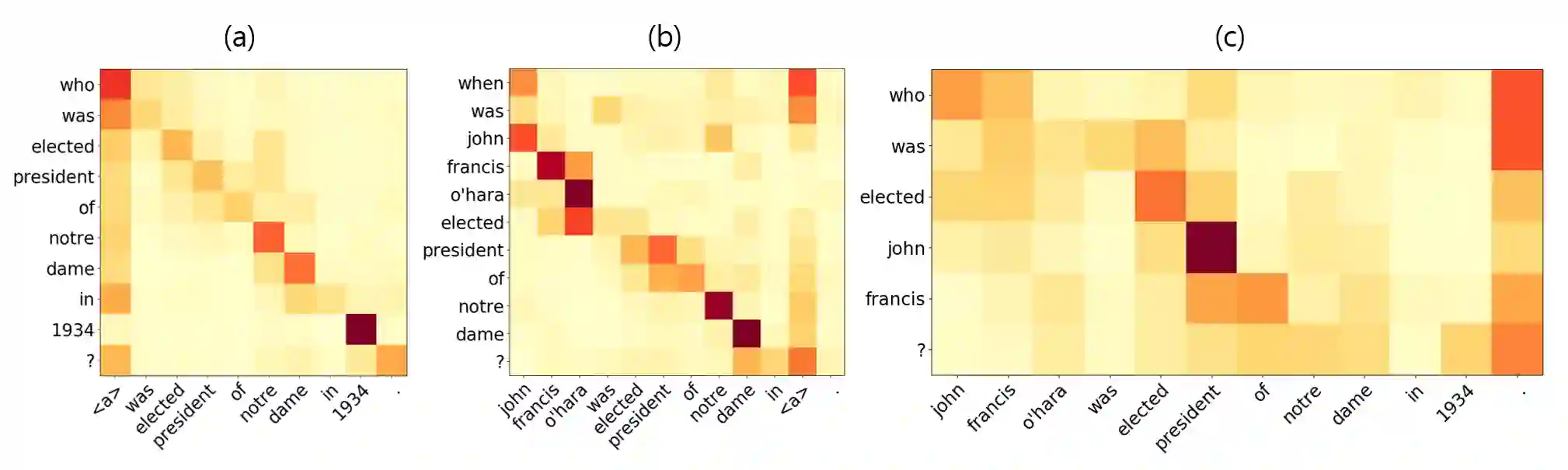

Neural question generation (NQG) is the task of generating a question from a given passage with deep neural networks. Previous NQG models suffer from a problem that a significant proportion of the generated questions include words in the question target, resulting in the generation of unintended questions. In this paper, we propose answer-separated seq2seq, which better utilizes the information from both the passage and the target answer. By replacing the target answer in the original passage with a special token, our model learns to identify which interrogative word should be used. We also propose a new module termed keyword-net, which helps the model better capture the key information in the target answer and generate an appropriate question. Experimental results demonstrate that our answer separation method significantly reduces the number of improper questions which include answers. Consequently, our model significantly outperforms previous state-of-the-art NQG models.

翻译:神经问题生成( NQG) 是用深神经网络生成一个特定通道产生的问题的任务。 以前的 NQG 模型遇到一个问题, 大部分生成的问题包括问题目标中的单词, 从而产生出意外的问题 。 在本文中, 我们提议以回答分开的后继2seq, 更好地使用通道和目标答案中的信息 。 通过将原始段落中的目标回答替换为特殊符号, 我们的模型学会了确定应该使用哪个词来进行质询 。 我们还提议了一个名为关键字网的新模块, 帮助模型更好地捕捉目标答案中的关键信息, 并产生一个合适的问题 。 实验结果显示, 我们的答案分离方法大大减少了包含答案的不适当问题的数量 。 因此, 我们的模型大大超越了先前的“ QG ” 模式 。