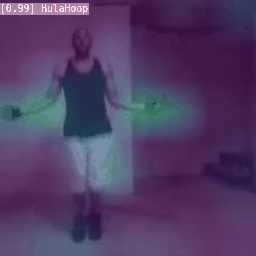

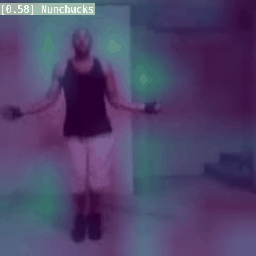

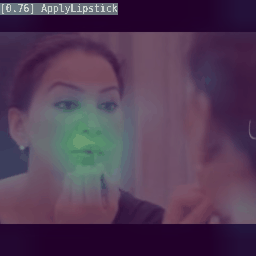

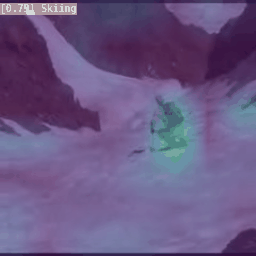

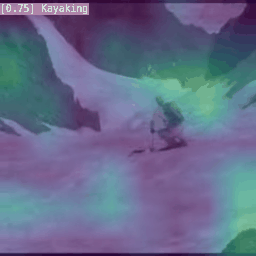

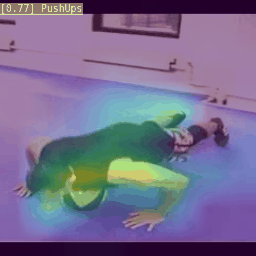

We present MoDist as a novel method to explicitly distill motion information into self-supervised video representations. Compared to previous video representation learning methods that mostly focus on learning motion cues implicitly from RGB inputs, we show that the representation learned with our MoDist method focus more on foreground motion regions and thus generalizes better to downstream tasks. To achieve this, MoDist enriches standard contrastive learning objectives for RGB video clips with a cross-modal learning objective between a Motion pathway and a Visual pathway. We evaluate MoDist on several datasets for both action recognition (UCF101/HMDB51/SSv2) as well as action detection (AVA), and demonstrate state-of-the-art self-supervised performance on all datasets. Furthermore, we show that MoDist representation can be as effective as (in some cases even better than) representations learned with full supervision. Given its simplicity, we hope MoDist could serve as a strong baseline for future research in self-supervised video representation learning.

翻译:我们把MoDist作为一种新颖的方法,明确将运动信息提炼成自我监督的视频演示。与以往的视频演示方法相比,我们主要侧重于从RGB输入中隐含的学习运动提示,我们显示,我们用MoDist方法学到的演示方法更多地侧重于前景运动区域,从而更概括地概括到下游任务。为了实现这一点,MoDist丰富了RGB视频剪片的标准对比学习目标,在动态路径和视觉路径之间建立了跨模式学习目标。我们评价了用于行动识别(UCF101/HMDDD51/SSv2)以及行动探测(AVA)的若干数据集的模拟,并展示了所有数据集上最先进的自我监督性表现。此外,我们显示,MDist的演示方法可以像(在某些情况下甚至比)在全面监督下学习的演示一样有效。我们希望MoDist可以作为未来在自我监督的视频演示学习中进行研究的强有力基线。