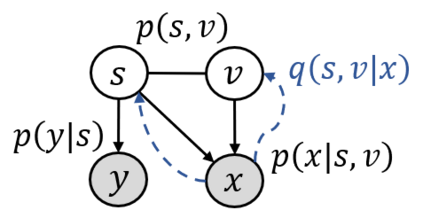

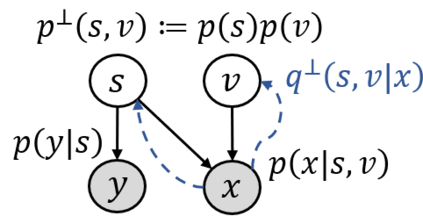

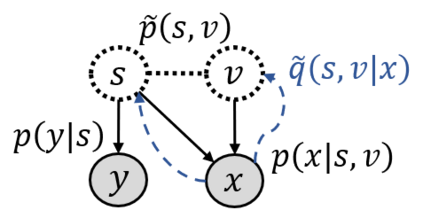

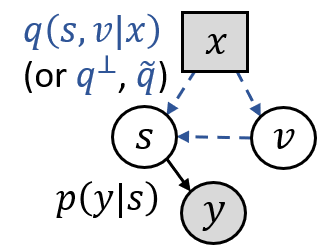

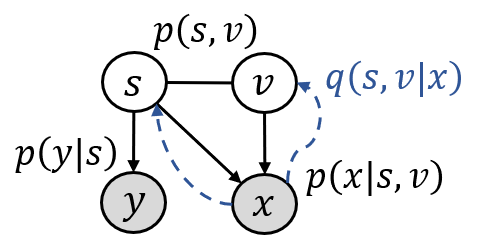

Conventional supervised learning methods, especially deep ones, are found to be sensitive to out-of-distribution (OOD) examples, largely because the learned representation mixes the semantic factor with the variation factor due to their domain-specific correlation, while only the semantic factor causes the output. To address the problem, we propose a Causal Semantic Generative model (CSG) based on a causal reasoning so that the two factors are modeled separately, and develop methods for OOD prediction from a single training domain, which is common and challenging. The methods are based on the causal invariance principle, with a novel design for both efficient learning and easy prediction. Theoretically, we prove that under certain conditions, CSG can identify the semantic factor by fitting training data, and this semantic-identification guarantees the boundedness of OOD generalization error and the success of adaptation. Empirical study shows improved OOD performance over prevailing baselines.

翻译:常规监督的学习方法,特别是深层方法,被认为对分配外(OOD)的例子十分敏感,这主要是因为所学的表述方式将语义因素与因具体领域相关关系而产生的变异因素混为一谈,而只有语义因素才是产出的原因。为了解决这个问题,我们提议了一个基于因果关系推理的Causal Semantic General模型(CSG),以便将这两个因素分开建模,并从一个共同和具有挑战性的培训领域制定OOOD预测方法。这些方法基于因果变异原则,为高效学习和简单预测设计了新颖的设计。理论上,我们证明在某些条件下,CSG可以通过适当的培训数据来识别语义因素,而这种语义识别方式保证OOD一般错误和适应成功之间的界限。 Epirical研究显示OOD的性能比现有的基线有所改善。