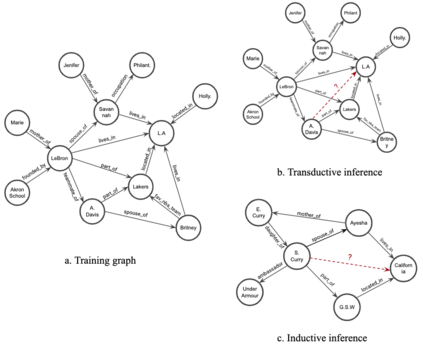

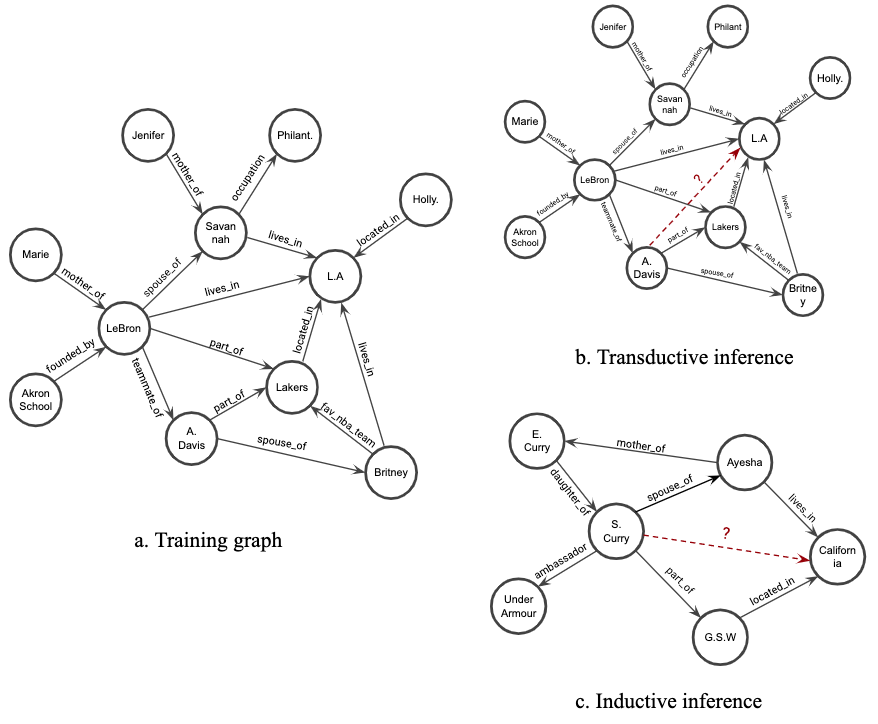

The dominant paradigm for relation prediction in knowledge graphs involves learning and operating on latent representations (i.e., embeddings) of entities and relations. However, these embedding-based methods do not explicitly capture the compositional logical rules underlying the knowledge graph, and they are limited to the transductive setting, where the full set of entities must be known during training. Here, we propose a graph neural network based relation prediction framework, GraIL, that reasons over local subgraph structures and has a strong inductive bias to learn entity-independent relational semantics. Unlike embedding-based models, GraIL is naturally inductive and can generalize to unseen entities and graphs after training. We provide theoretical proof and strong empirical evidence that GraIL can represent a useful subset of first-order logic and show that GraIL outperforms existing rule-induction baselines in the inductive setting. We also demonstrate significant gains obtained by ensembling GraIL with various knowledge graph embedding methods in the transductive setting, highlighting the complementary inductive bias of our method.

翻译:知识图中关系预测的主要范式是学习和操作实体和关系的潜在表象(即嵌入式),但是,这些嵌入式方法并不明确反映知识图背后的构成逻辑规则,这些方法仅限于传输环境,在培训期间必须了解全套实体。在这里,我们提出了一个基于图形神经网络的关系预测框架Grail,其原因超越了当地子图结构,在学习实体独立关系语义方面存在着强烈的感应偏差。与嵌入式模型不同,GraIL自然具有感知性,在培训后可以概括到看不见的实体和图表。我们提供了理论证据和有力的经验证据,证明GraIL可以代表一阶逻辑的有用分类,并表明GraIL在感应式环境中超越了现有的规则引入基线。我们还展示了通过将Grail与各种知识图形嵌入式方法融合在传输环境中所取得的重大收益,突出了我们方法的辅助性演化偏差。