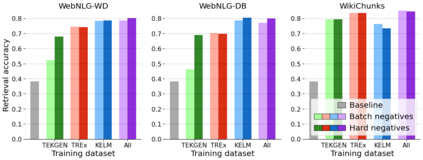

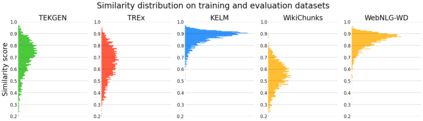

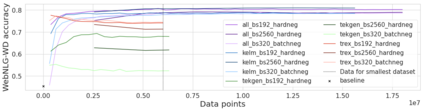

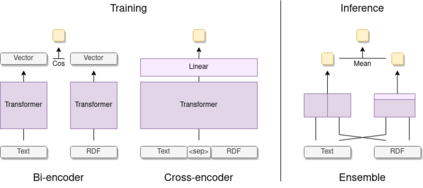

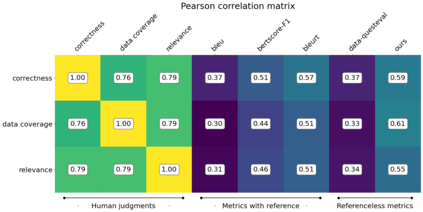

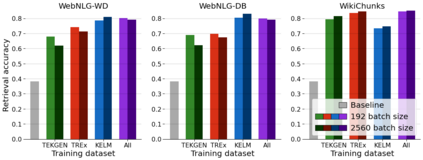

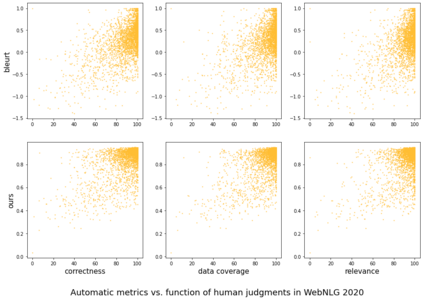

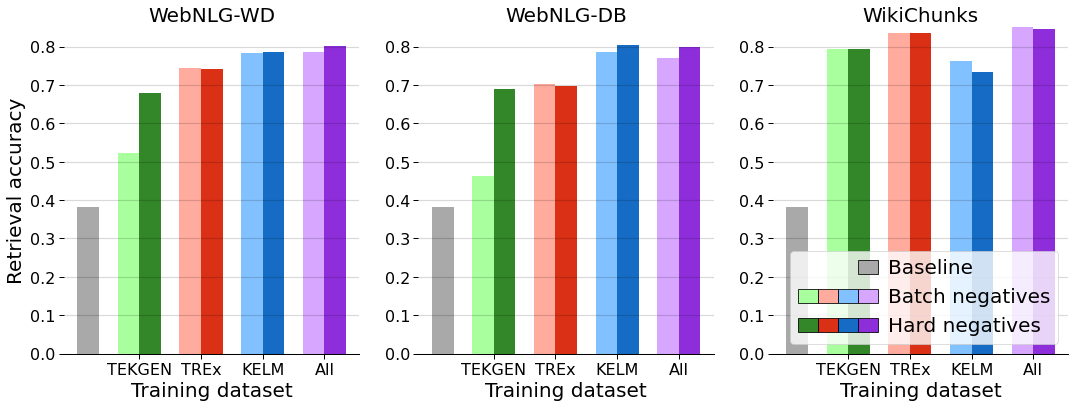

A key feature of neural models is that they can produce semantic vector representations of objects (texts, images, speech, etc.) ensuring that similar objects are close to each other in the vector space. While much work has focused on learning representations for other modalities, there are no aligned cross-modal representations for text and knowledge base (KB) elements. One challenge for learning such representations is the lack of parallel data, which we use contrastive training on heuristics-based datasets and data augmentation to overcome, training embedding models on (KB graph, text) pairs. On WebNLG, a cleaner manually crafted dataset, we show that they learn aligned representations suitable for retrieval. We then fine-tune on annotated data to create EREDAT (Ensembled Representations for Evaluation of DAta-to-Text), a similarity metric between English text and KB graphs. EREDAT outperforms or matches state-of-the-art metrics in terms of correlation with human judgments on WebNLG even though, unlike them, it does not require a reference text to compare against.

翻译:神经模型的一个关键特征是,它们能够产生物体(文字、图像、语音等)的语体矢量表达方式,确保矢量空间中类似的物体彼此接近。虽然许多工作侧重于其他模式的学习表达方式,但对于文本和知识基础要素没有统一的跨模式表达方式。了解这种表达方式的一个挑战是缺乏平行数据,我们使用基于超自然的数据集和数据增强的对比性培训来克服这些数据,在(KB图、文字)对配对上的培训嵌入模型。在WebNLG上,一个更清洁的手工手工制作数据集,我们显示它们学习了适合检索的一致表达方式。我们随后对附加说明的数据进行了微调,以创建EREDAT(Data-Text评价集合式表达方式),一种类似英文文本和KB图的类似度量度。ERDAT在与WebNLG上人类判断的相关性方面,超越或匹配了最新技术度指标。尽管与它们不同,我们并不要求参考文本来对比。</s>