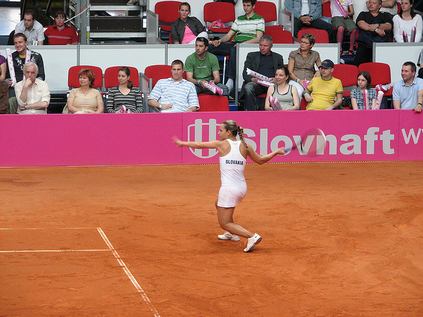

Visual dialogue is a challenging task that needs to extract implicit information from both visual (image) and textual (dialogue history) contexts. Classical approaches pay more attention to the integration of the current question, vision knowledge and text knowledge, despising the heterogeneous semantic gaps between the cross-modal information. In the meantime, the concatenation operation has become de-facto standard to the cross-modal information fusion, which has a limited ability in information retrieval. In this paper, we propose a novel Knowledge-Bridge Graph Network (KBGN) model by using graph to bridge the cross-modal semantic relations between vision and text knowledge in fine granularity, as well as retrieving required knowledge via an adaptive information selection mode. Moreover, the reasoning clues for visual dialogue can be clearly drawn from intra-modal entities and inter-modal bridges. Experimental results on VisDial v1.0 and VisDial-Q datasets demonstrate that our model outperforms exiting models with state-of-the-art results.

翻译:视觉对话是一项具有挑战性的任务,需要从视觉(图像)和文字(对话历史)背景中提取隐含的信息。古典方法更加关注当前问题、视觉知识和文字知识的整合,排除跨模式信息之间的不同语义差距。与此同时,交汇作业已经成为跨模式信息集成的“地貌标准”,而交叉模式信息集成能力有限。在本文中,我们提出一个新的“知识-布里奇图表网络”模型(KBGN),方法是使用图表弥合微小颗粒中的视觉和文字知识之间的跨模式语义关系,以及通过适应性信息选择模式重新获取所需的知识。此外,视觉对话的推理线索可以清楚地从内部模式实体和相互模式桥梁中提取。 VisDial v1.0 和 VisDial-Q 数据集的实验结果表明,我们的模型超越了以最新结果推出的模式。