© 作者|王晓磊 机构|中国人民大学

研究方向 | 对话式信息获取 ****

****本文整理了2022年以来发表在顶级会议上的大语言模型相关论文。

导读

去年底,OpenAI 推出的 ChatGPT 在短短数月内已经风靡全球。这个基于 GPT-3.5 的大型语言模型,具备惊人的自然语言生成和理解能力,可以像人类一样进行对话、翻译、摘要等任务。由于其优秀的表现,ChatGPT 及其背后的大型语言模型迅速成为人工智能领域的热门话题,吸引了广大科研人员和开发者的关注和参与。 本文整理了 2022 年在各大顶会(ACL、EMNLP、ICLR、ICML、NeurIPS等)发表的和大型语言模型相关的论文,共计 100 篇。论文列表已经同步更新到 Github仓库(https://github.com/RUCAIBox/Top-conference-paper-list),欢迎大家关注和 Star。

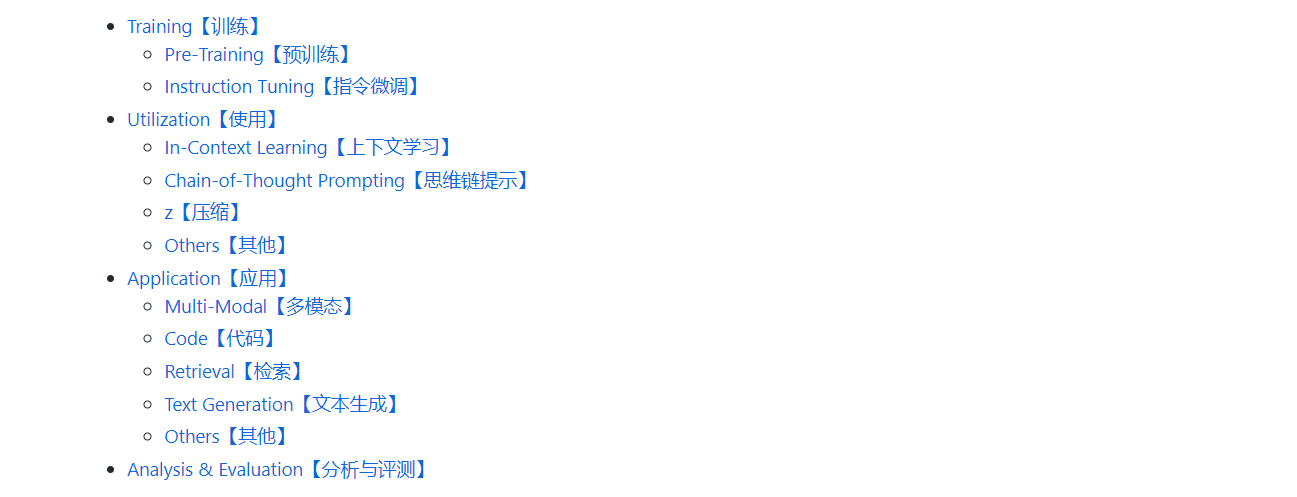

Catalog(目录)

-

Training【训练】

-

Pre-Training【预训练】

-

Instruction Tuning【指令微调】

-

Utilization【使用】

-

In-Context Learning【上下文学习】

-

Chain-of-Thought Prompting【思维链提示】

-

Compression【压缩】

-

Others【其他】

-

Application【应用】

-

Multi-Modal【多模态】

-

Code【代码】

-

Retrieval【检索】

-

Text Generation【文本生成】

-

Others【其他】

-

Analysis & Evaluation【分析与评测】

Training【训练】

Pre-Training【预训练】

- UL2: Unifying Language Learning Paradigms

- Learning to Grow Pretrained Models for Efficient Transformer Training

- Efficient Large Scale Language Modeling with Mixtures of Experts

- Knowledge-in-Context: Towards Knowledgeable Semi-Parametric Language Models

- CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis

- InCoder: A Generative Model for Code Infilling and Synthesis

- CodeBPE: Investigating Subtokenization Options for Large Language Model Pretraining on Source Code

- CodeRetriever: A Large Scale Contrastive Pre-Training Method for Code Search

- UniMax: Fairer and More Effective Language Sampling for Large-Scale Multilingual Pretraining

- GLM-130B: An Open Bilingual Pre-trained Model

- When FLUE Meets FLANG: Benchmarks and Large Pretrained Language Model for Financial Domain

Instruction Tuning【指令微调】

- What Makes Instruction Learning Hard? An Investigation and a New Challenge in a Synthetic Environment

- InstructDial: Improving Zero and Few-shot Generalization in Dialogue through Instruction Tuning

- Learning Instructions with Unlabeled Data for Zero-Shot Cross-Task Generalization

- Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks

- Boosting Natural Language Generation from Instructions with Meta-Learning

- Help me write a Poem - Instruction Tuning as a Vehicle for Collaborative Poetry Writing

- Multitask Instruction-based Prompting for Fallacy Recognition

- Not All Tasks Are Born Equal: Understanding Zero-Shot Generalization

- HypeR: Multitask Hyper-Prompted Training Enables Large-Scale Retrieval Generalization

Utilization【使用】

In-Context Learning【上下文学习】

- What learning algorithm is in-context learning? Investigations with linear models

- Ask Me Anything: A simple strategy for prompting language models

- Large Language Models are Human-Level Prompt Engineers

- Using Both Demonstrations and Language Instructions to Efficiently Learn Robotic Tasks

- kNN Prompting: Beyond-Context Learning with Calibration-Free Nearest Neighbor Inference

- Guess the Instruction! Flipped Learning Makes Language Models Stronger Zero-Shot Learners

- Selective Annotation Makes Language Models Better Few-Shot Learners

- Active Example Selection for In-Context Learning

- Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

- In-Context Learning for Few-Shot Dialogue State Tracking

- Few-Shot Anaphora Resolution in Scientific Protocols via Mixtures of In-Context Experts

- ProGen: Progressive Zero-shot Dataset Generation via In-context Feedback

- Controllable Dialogue Simulation with In-context Learning

- Thinking about GPT-3 In-Context Learning for Biomedical IE? Think Again

- XRICL: Cross-lingual Retrieval-Augmented In-Context Learning for Cross-lingual Text-to-SQL Semantic Parsing

- On the Compositional Generalization Gap of In-Context Learning

- Towards In-Context Non-Expert Evaluation of Reflection Generation for Counselling Conversations

- Towards Few-Shot Identification of Morality Frames using In-Context Learning

Chain-of-Thought Prompting【思维链提示】

- ReAct: Synergizing Reasoning and Acting in Language Models

- Selection-Inference: Exploiting Large Language Models for Interpretable Logical Reasoning

- Neuro-Symbolic Procedural Planning with Commonsense Prompting

- Language Models Are Greedy Reasoners: A Systematic Formal Analysis of Chain-of-Thought

- PINTO: Faithful Language Reasoning Using Prompt-Generated Rationales

- Decomposed Prompting: A Modular Approach for Solving Complex Tasks

- Complexity-Based Prompting for Multi-step Reasoning

- Automatic Chain of Thought Prompting in Large Language Models

- Compositional Semantic Parsing with Large Language Models

- Self-Consistency Improves Chain of Thought Reasoning in Language Models

- Least-to-Most Prompting Enables Complex Reasoning in Large Language Models

- Entailer: Answering Questions with Faithful and Truthful Chains of Reasoning

- Iteratively Prompt Pre-trained Language Models for Chain of Thought

- ConvFinQA: Exploring the Chain of Numerical Reasoning in Conversational Finance Question Answering

- Induced Natural Language Rationales and Interleaved Markup Tokens Enable Extrapolation in Large Language Models

Compression【压缩】

- Understanding and Improving Knowledge Distillation for Quantization Aware Training of Large Transformer Encoders

- The Optimal BERT Surgeon: Scalable and Accurate Second-Order Pruning for Large Language Models

- AlphaTuning: Quantization-Aware Parameter-Efficient Adaptation of Large-Scale Pre-Trained Language Models

Others【其他】

- BBTv2: Towards a Gradient-Free Future with Large Language Models

- Compositional Task Representations for Large Language Models

- Just Fine-tune Twice: Selective Differential Privacy for Large Language Models

Application【应用】

Multi-Modal【多模态】

- Visual Classification via Description from Large Language Models

- Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language

- Plug-and-Play VQA: Zero-shot VQA by Conjoining Large Pretrained Models with Zero Training

Code【代码】

- DocPrompting: Generating Code by Retrieving the Docs

- Planning with Large Language Models for Code Generation

- CodeT: Code Generation with Generated Tests

- Language Models Can Teach Themselves to Program Better

Retrieval【检索】

- Promptagator: Few-shot Dense Retrieval From 8 Examples

- Recitation-Augmented Language Models

- Generate rather than Retrieve: Large Language Models are Strong Context Generators

- QUILL: Query Intent with Large Language Models using Retrieval Augmentation and Multi-stage Distillation

Text Generation【文本生成】

- Generating Sequences by Learning to Self-Correct

- RankGen: Improving Text Generation with Large Ranking Models

- Eliciting Knowledge from Large Pre-Trained Models for Unsupervised Knowledge-Grounded Conversation

Others【其他】

- Systematic Rectification of Language Models via Dead-end Analysis

- Reward Design with Language Models

- Bidirectional Language Models Are Also Few-shot Learners

- Composing Ensembles of Pre-trained Models via Iterative Consensus

- Binding Language Models in Symbolic Languages

- Mind's Eye: Grounded Language Model Reasoning through Simulation

Analysis & Evaluation【分析与评测】

- WikiWhy: Answering and Explaining Cause-and-Effect Questions

- ROSCOE: A Suite of Metrics for Scoring Step-by-Step Reasoning

- Quantifying Memorization Across Neural Language Models

- Mass-Editing Memory in a Transformer

- Multi-lingual Evaluation of Code Generation Models

- STREET: A MULTI-TASK STRUCTURED REASONING AND EXPLANATION BENCHMARK

- Leveraging Large Language Models for Multiple Choice Question Answering

- Broken Neural Scaling Laws

- Language models are multilingual chain-of-thought reasoners

- Language Models are Realistic Tabular Data Generators

- Task Ambiguity in Humans and Language Models

- Discovering Latent Knowledge in Language Models Without Supervision

- Prompting GPT-3 To Be Reliable

- Large language models are few-shot clinical information extractors

- How Large Language Models are Transforming Machine-Paraphrase Plagiarism

- Neural Theory-of-Mind? On the Limits of Social Intelligence in Large LMs

- SLING: Sino Linguistic Evaluation of Large Language Models

- A Systematic Investigation of Commonsense Knowledge in Large Language Models

- Lexical Generalization Improves with Larger Models and Longer Training

- What do Large Language Models Learn beyond Language?

- Probing for Understanding of English Verb Classes and Alternations in Large Pre-trained Language Models

成为VIP会员查看完整内容

相关内容

Arxiv

0+阅读 · 2023年5月19日

Arxiv

0+阅读 · 2023年5月19日

Arxiv

0+阅读 · 2023年5月18日

Arxiv

33+阅读 · 2023年2月18日

Arxiv

11+阅读 · 2019年11月4日