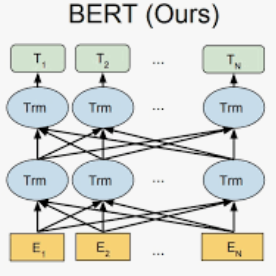

Transformer-based language models have achieved significant success in various domains. However, the data-intensive nature of the transformer architecture requires much labeled data, which is challenging in low-resource scenarios (i.e., few-shot learning (FSL)). The main challenge of FSL is the difficulty of training robust models on small amounts of samples, which frequently leads to overfitting. Here we present Mask-BERT, a simple and modular framework to help BERT-based architectures tackle FSL. The proposed approach fundamentally differs from existing FSL strategies such as prompt tuning and meta-learning. The core idea is to selectively apply masks on text inputs and filter out irrelevant information, which guides the model to focus on discriminative tokens that influence prediction results. In addition, to make the text representations from different categories more separable and the text representations from the same category more compact, we introduce a contrastive learning loss function. Experimental results on public-domain benchmark datasets demonstrate the effectiveness of Mask-BERT.

翻译:以变压器为基础的语言模型在不同领域取得了巨大成功,然而,变压器结构的数据密集性质要求大量标签数据,这在低资源情景(即少见学习(FSL))中具有挑战性。FSL的主要挑战是难以对少量样本进行强健模型的培训,这往往导致过于匹配。这里我们介绍一个简单和模块化的框架,帮助基于BERT的架构解决FSL问题。提议的方法与现有的FSL战略,如快速调试和元学习,根本不同。核心思想是有选择地在文本输入上使用掩码,过滤无关的信息,引导模型侧重于影响预测结果的歧视性符号。此外,为了使不同类别的文本表达更加相互分离,以及同一类别的文字表达方式更加一致,我们引入了一个对比性学习损失功能。关于公共领域基准数据集的实验结果显示了Mask-BERT的有效性。</s>