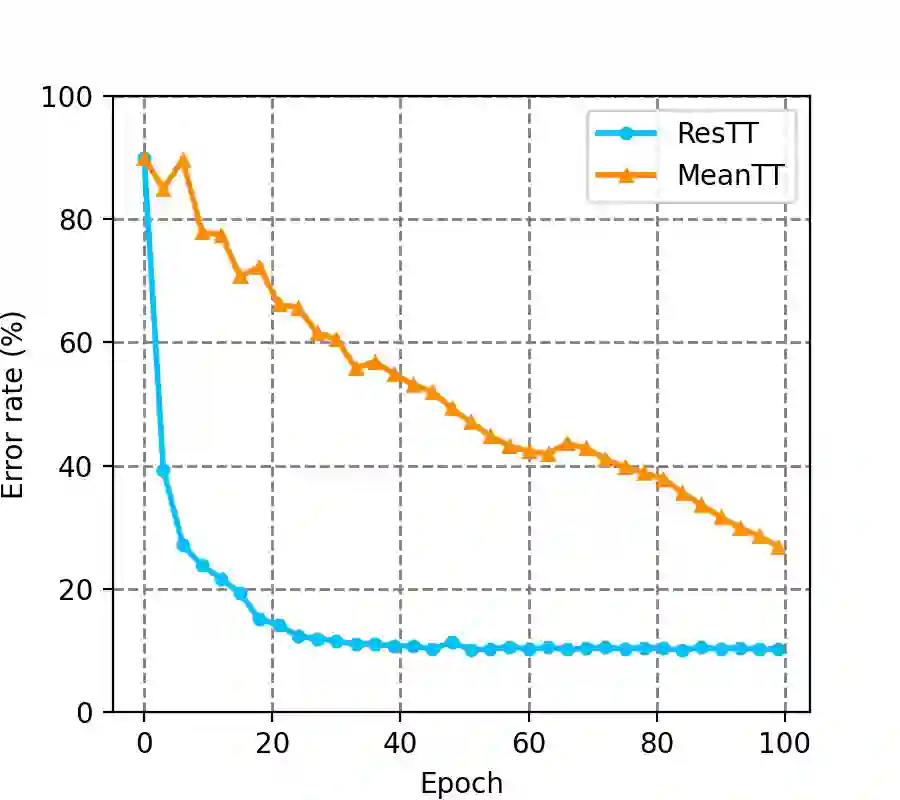

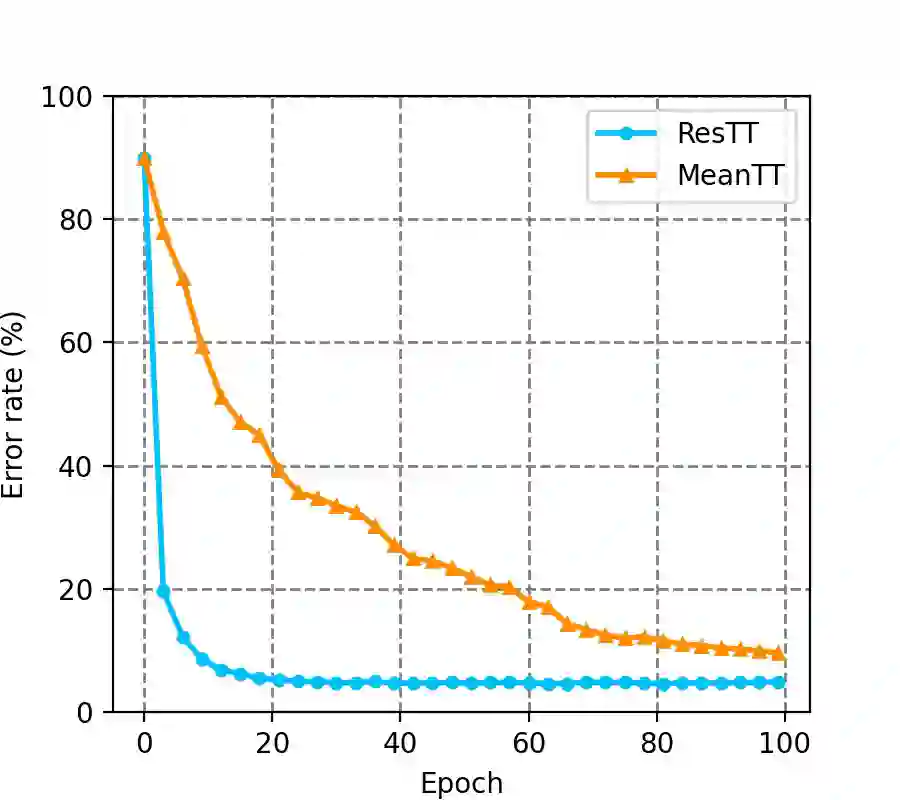

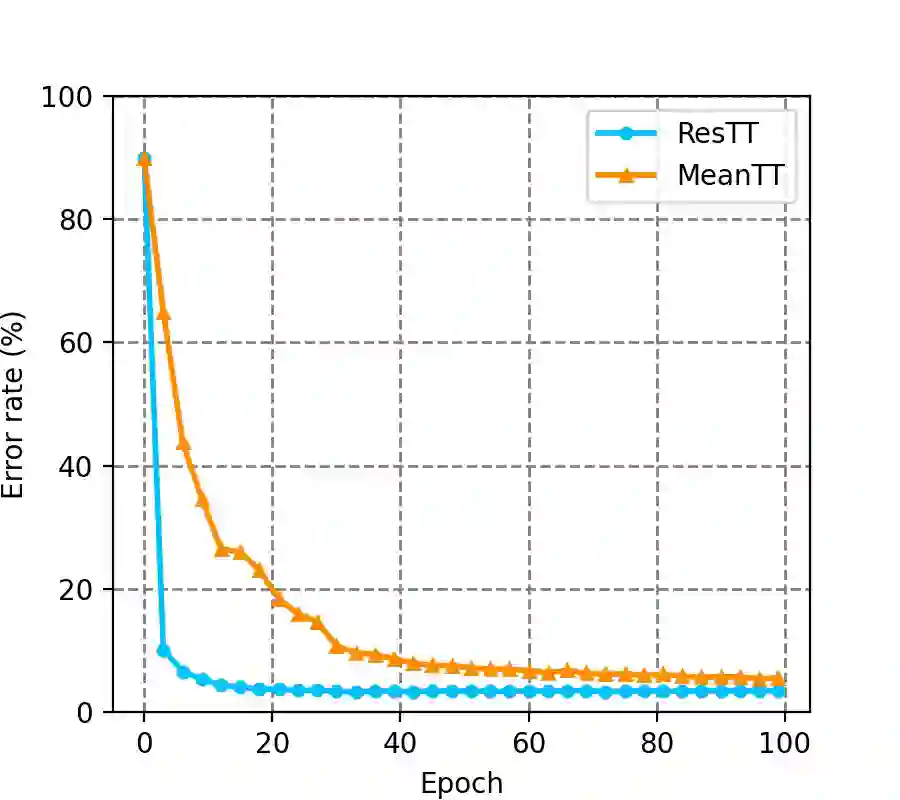

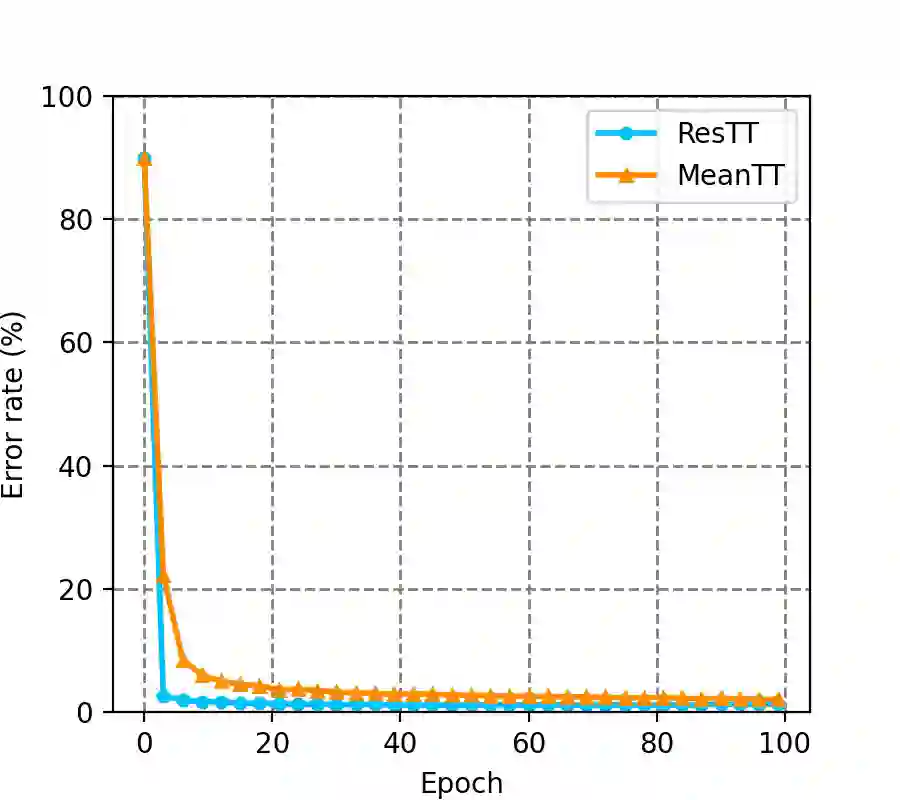

Tensor Train (TT) approach has been successfully applied in the modelling of the multilinear interaction of features. Nevertheless, the existing models lack flexibility and generalizability, as they only model a single type of high-order correlation. In practice, multiple multilinear correlations may exist within the features. In this paper, we present a novel Residual Tensor Train (ResTT) which integrates the merits of TT and residual structure to capture the multilinear feature correlations, from low to higher orders, within the same model. In particular, we prove that the fully-connected layer in neural networks and the Volterra series can be taken as special cases of ResTT. Furthermore, we derive the rule for weight initialization that stabilizes the training of ResTT based on a mean-field analysis. We prove that such a rule is much more relaxed than that of TT, which means ResTT can easily address the vanishing and exploding gradient problem that exists in the current TT models. Numerical experiments demonstrate that ResTT outperforms the state-of-the-art tensor network approaches, and is competitive with the benchmark deep learning models on MNIST and Fashion-MNIST datasets.

翻译:然而,现有的模型缺乏灵活性和可概括性,因为它们只是模拟一种单一类型的高阶相关关系。实际上,这些特征中可能存在多种多线性关联。在本文件中,我们提出了一个新颖的残余温度和残余结构(ResTT)方法,将TT的优点和残余结构结合起来,以在同一模型中捕捉从低到高的多线性特征关联。特别是,我们证明神经网络和伏尔泰拉系列中完全相连的层可以作为ResTT的特例。此外,我们提出了加权初始化规则,该规则稳定了基于中位分析的ResTT的培训。我们证明,这样的规则比TT规则宽松得多,这意味着ResTT可以很容易地解决目前T模式中存在的消失和爆炸梯度问题。Numerical实验表明,ResTTT超越了State-art Extaror 网络方法,而且与MNMIS 和FASimis的深层次学习模型具有竞争力。