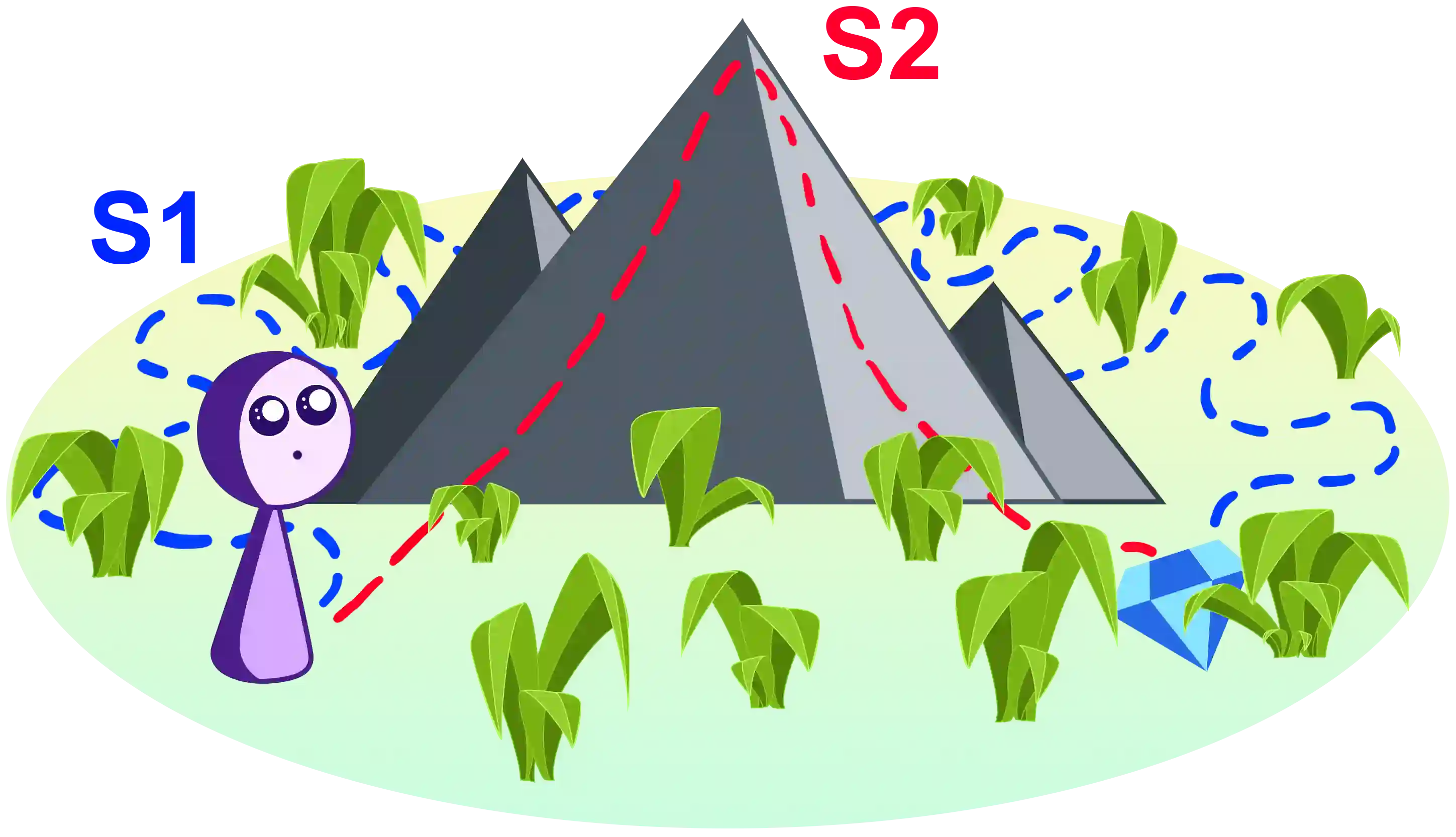

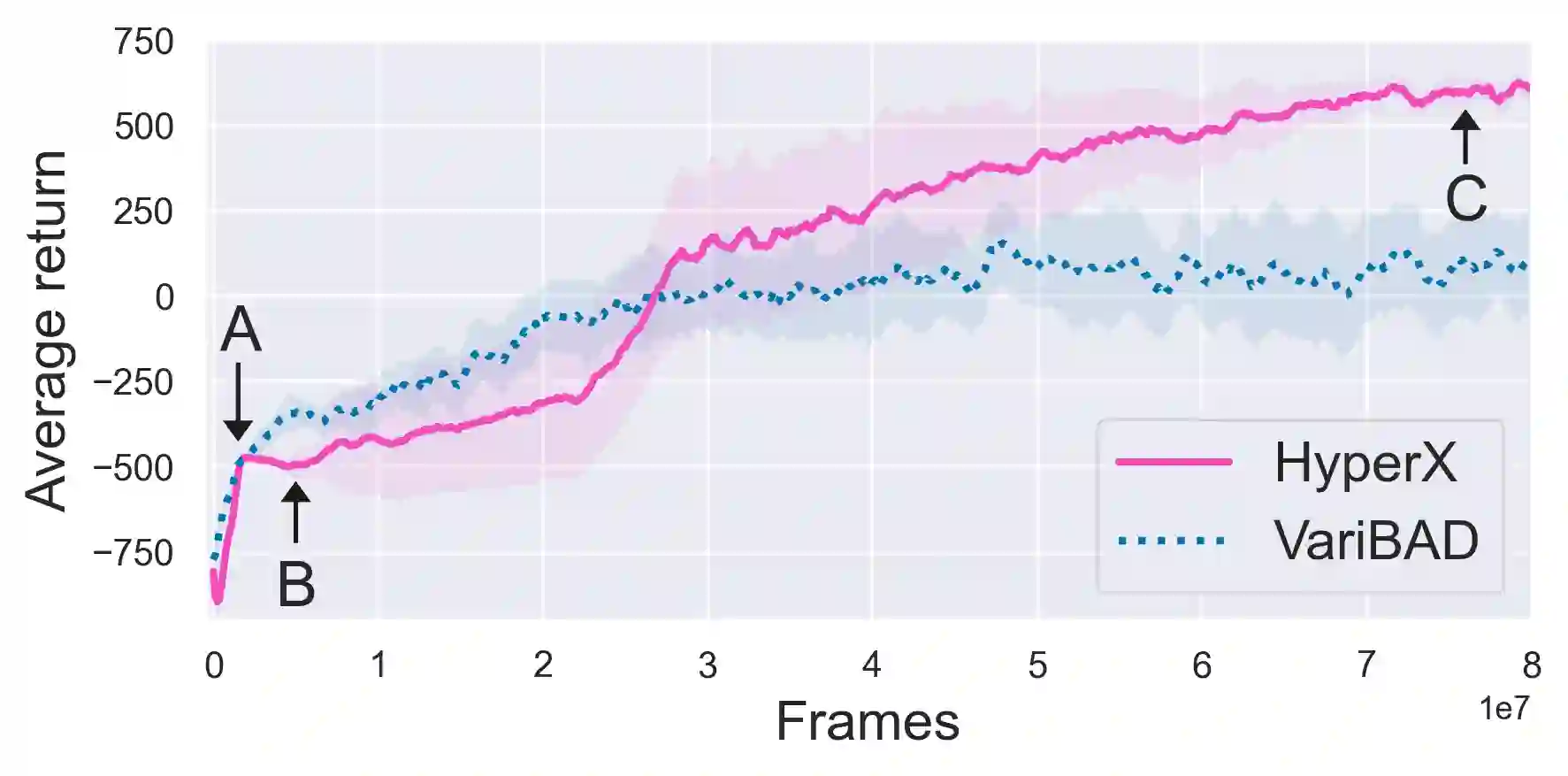

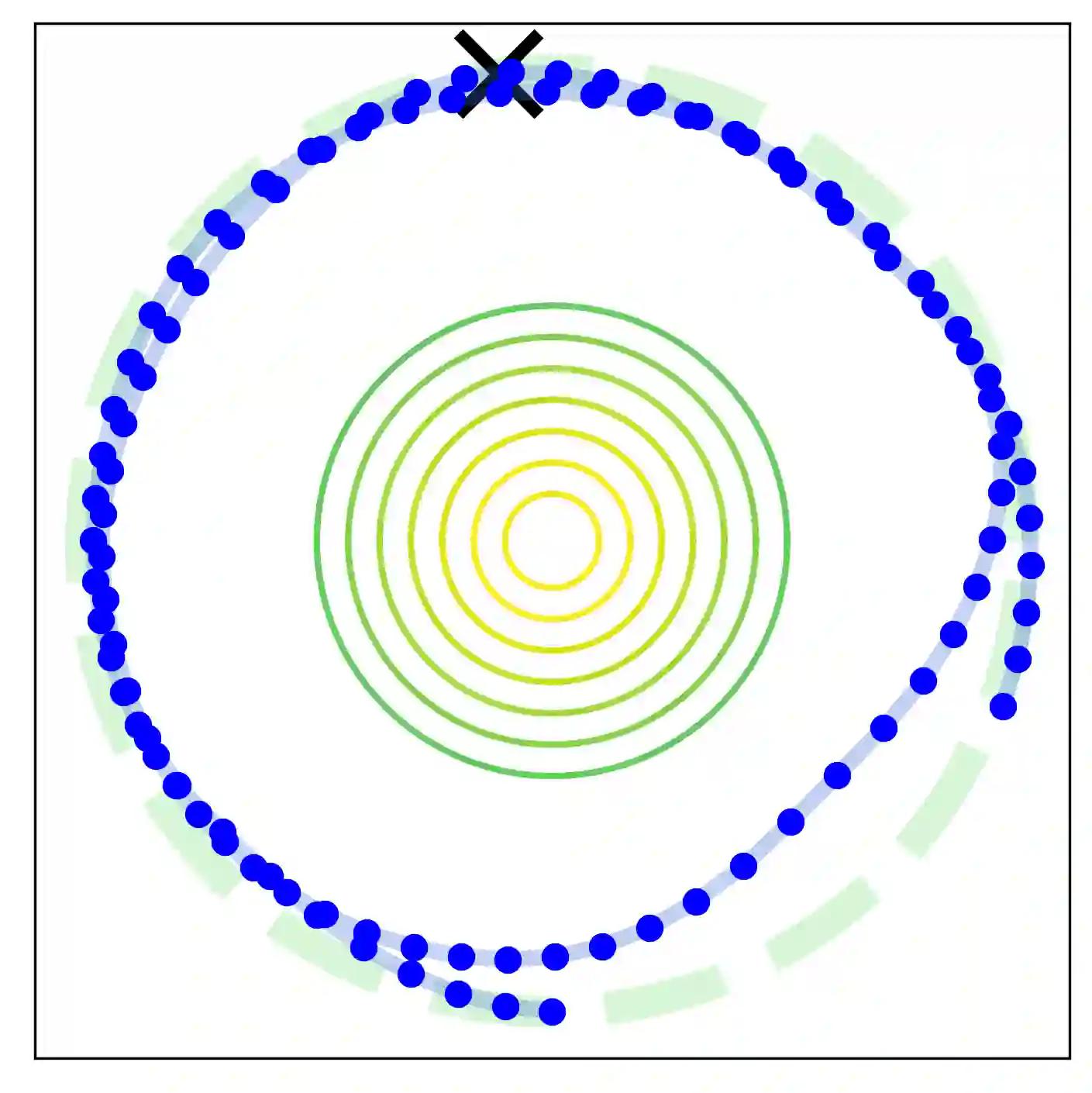

To rapidly learn a new task, it is often essential for agents to explore efficiently -- especially when performance matters from the first timestep. One way to learn such behaviour is via meta-learning. Many existing methods however rely on dense rewards for meta-training, and can fail catastrophically if the rewards are sparse. Without a suitable reward signal, the need for exploration during meta-training is exacerbated. To address this, we propose HyperX, which uses novel reward bonuses for meta-training to explore in approximate hyper-state space (where hyper-states represent the environment state and the agent's task belief). We show empirically that HyperX meta-learns better task-exploration and adapts more successfully to new tasks than existing methods.

翻译:要迅速学习新任务,代理人往往必须高效率地探索 -- -- 特别是在从第一个时间步开始需要业绩的时候。学习这种行为的方法之一是元化学习。许多现有方法都依赖于对元培训的密集奖励,如果没有适当的奖励信号,在元培训期间进行勘探的需要就会更加严重。为了解决这个问题,我们提议HyperX,它使用新颖的元培训奖励奖金来探索大约超国家空间(超国家代表环境状况和代理人的任务信念 ) 。我们从经验上表明HyperX元的元利恩比现有方法更成功的任务探索和适应新任务。