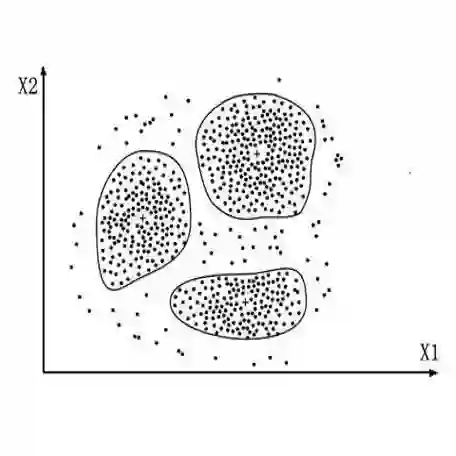

Distributional text clustering delivers semantically informative representations and captures the relevance between each word and semantic clustering centroids. We extend the neural text clustering approach to text classification tasks by inducing cluster centers via a latent variable model and interacting with distributional word embeddings, to enrich the representation of tokens and measure the relatedness between tokens and each learnable cluster centroid. The proposed method jointly learns word clustering centroids and clustering-token alignments, achieving the state of the art results on multiple benchmark datasets and proving that the proposed cluster-token alignment mechanism is indeed favorable to text classification. Notably, our qualitative analysis has conspicuously illustrated that text representations learned by the proposed model are in accord well with our intuition.

翻译:分发文本组群可以提供语义信息,捕捉每个字和语义组群之间的相关性。我们将神经文本组群方法扩大到文本分类任务,通过潜伏变量模型引导集束中心,并与分布式文字嵌入系统互动,以丰富象征的表示方式,并衡量代号与每个可学习类集体的关联性。建议的方法是联合学习单词组群和组群对齐,实现多个基准数据集的最新结果,并证明拟议的组群对齐机制确实有利于文本分类。值得注意的是,我们的质量分析清楚地表明,拟议模式所学的文字表述与我们的直觉非常吻合。