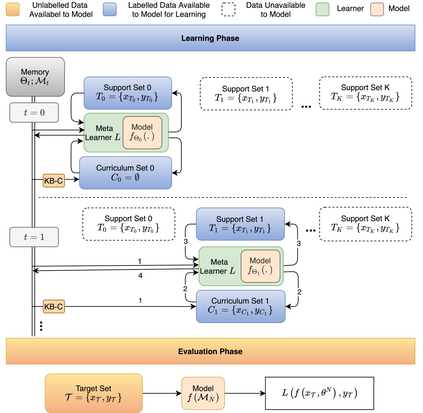

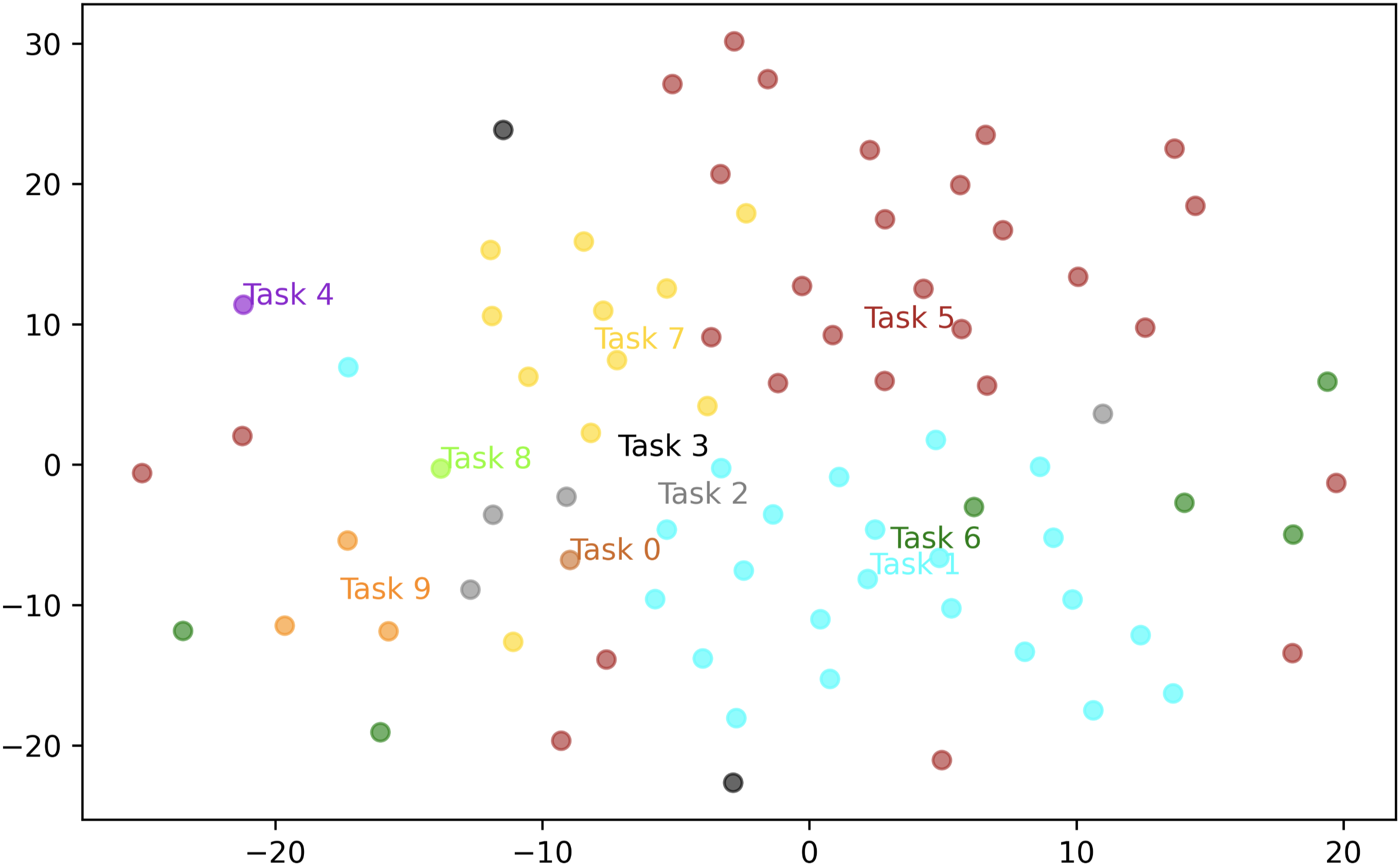

Continual relation extraction is an important task that focuses on extracting new facts incrementally from unstructured text. Given the sequential arrival order of the relations, this task is prone to two serious challenges, namely catastrophic forgetting and order-sensitivity. We propose a novel curriculum-meta learning method to tackle the above two challenges in continual relation extraction. We combine meta learning and curriculum learning to quickly adapt model parameters to a new task and to reduce interference of previously seen tasks on the current task. We design a novel relation representation learning method through the distribution of domain and range types of relations. Such representations are utilized to quantify the difficulty of tasks for the construction of curricula. Moreover, we also present novel difficulty-based metrics to quantitatively measure the extent of order-sensitivity of a given model, suggesting new ways to evaluate model robustness. Our comprehensive experiments on three benchmark datasets show that our proposed method outperforms the state-of-the-art techniques. The code is available at the anonymous GitHub repository: https://github.com/wutong8023/AAAI_CML.

翻译:持续关系提取是一项重要任务,重点是从没有结构的文本中逐步提取新的事实。鉴于关系的先后到来顺序,这项任务容易遇到两个严重挑战,即灾难性的遗忘和秩序敏感性。我们提议采用新的课程-元学习方法,以解决上述两个在持续关系提取方面的挑战。我们把元学习和课程学习结合起来,以便迅速使模型参数适应新的任务,并减少以前所看到的任务对当前任务的干扰。我们通过分配领域和范围关系类型,设计一种新的关系代表学习方法。这种表达方法被用来量化课程建设任务的困难。此外,我们还提出了新的基于困难的衡量标准,以量化某一模式的秩序敏感性程度,建议新的方法来评价模型的稳健性。我们在三个基准数据集上的全面实验表明,我们拟议的方法超越了最新技术。该代码可在匿名的GitHub存放处查阅:https://github.com/wutong8023/AAI_CML。

相关内容

Source: Apple - iOS 8