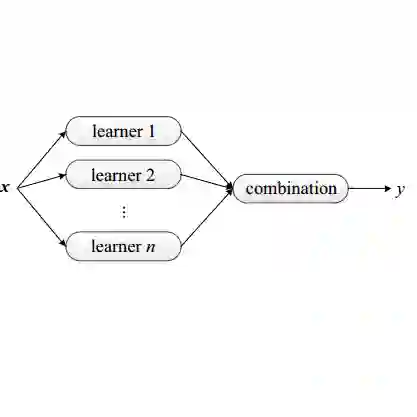

Deep learning has contributed greatly to many successes in artificial intelligence in recent years. Today, it is possible to train models that have thousands of layers and hundreds of billions of parameters. Large-scale deep models have achieved great success, but the enormous computational complexity and gigantic storage requirements make it extremely difficult to implement them in real-time applications. On the other hand, the size of the dataset is still a real problem in many domains. Data are often missing, too expensive, or impossible to obtain for other reasons. Ensemble learning is partially a solution to the problem of small datasets and overfitting. However, ensemble learning in its basic version is associated with a linear increase in computational complexity. We analyzed the impact of the ensemble decision-fusion mechanism and checked various methods of sharing the decisions including voting algorithms. We used the modified knowledge distillation framework as a decision-fusion mechanism which allows in addition compressing of the entire ensemble model into a weight space of a single model. We showed that knowledge distillation can aggregate knowledge from multiple teachers in only one student model and, with the same computational complexity, obtain a better-performing model compared to a model trained in the standard manner. We have developed our own method for mimicking the responses of all teachers at the same time, simultaneously. We tested these solutions on several benchmark datasets. In the end, we presented a wide application use of the efficient multi-teacher knowledge distillation framework. In the first example, we used knowledge distillation to develop models that could automate corrosion detection on aircraft fuselage. The second example describes detection of smoke on observation cameras in order to counteract wildfires in forests.

翻译:近年来,深层学习极大地促进了人工智能方面的许多成功。今天,有可能对具有数千层和数千亿参数的模型进行培训。大型深层模型取得了巨大成功,但巨大的计算复杂性和庞大的存储要求使得在实时应用程序中很难实施这些模型。另一方面,数据集的规模在许多领域仍然是一个真正的问题。数据往往缺乏、太昂贵或由于其他原因无法获得。整合学习是解决小型数据集和过度装配问题的部分办法。然而,基本版本的混合学习与计算复杂性的线性增长相关联。我们分析了混合决定集集机制的影响,并检查了包括投票算法在内的各种决策共享方法。我们使用经修改的知识蒸馏框架作为决定添加机制,从而能够将整个组合模型压缩成一个单一模型的重量空间。我们显示,知识蒸馏可以将多个教师的知识汇集在一个学生模型中,并且以相同的计算复杂度观测模型来收集多层数据。我们用了一个更好的计算模型来进行测试,我们用这些模型来进行测试。我们用这些模型来进行测试,我们用一个更好的标准的模型来测量。我们用一些标准模型来测试。我们用一些标准模型来测试。