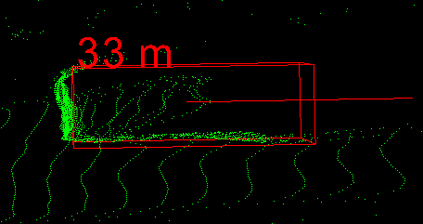

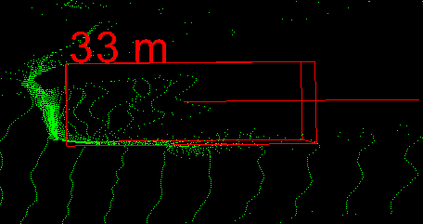

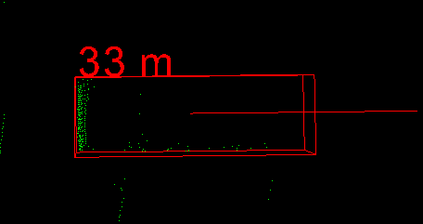

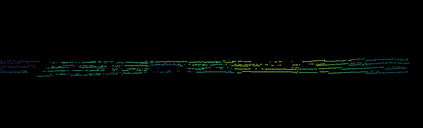

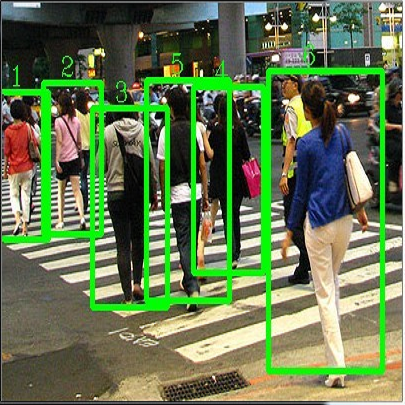

The ability to accurately detect and localize objects is recognized as being the most important for the perception of self-driving cars. From 2D to 3D object detection, the most difficult is to determine the distance from the ego-vehicle to objects. Expensive technology like LiDAR can provide a precise and accurate depth information, so most studies have tended to focus on this sensor showing a performance gap between LiDAR-based methods and camera-based methods. Although many authors have investigated how to fuse LiDAR with RGB cameras, as far as we know there are no studies to fuse LiDAR and stereo in a deep neural network for the 3D object detection task. This paper presents SLS-Fusion, a new approach to fuse data from 4-beam LiDAR and a stereo camera via a neural network for depth estimation to achieve better dense depth maps and thereby improves 3D object detection performance. Since 4-beam LiDAR is cheaper than the well-known 64-beam LiDAR, this approach is also classified as a low-cost sensors-based method. Through evaluation on the KITTI benchmark, it is shown that the proposed method significantly improves depth estimation performance compared to a baseline method. Also, when applying it to 3D object detection, a new state of the art on low-cost sensor based method is achieved.

翻译:精确探测和定位天体的能力被认为是对自我驾驶汽车感知而言最重要的。 从 2D 到 3D 对象探测,最困难的是确定自我飞行器与天体之间的距离。LIDAR这样的昂贵技术可以提供准确和准确的深度信息,因此大多数研究往往侧重于这个传感器,显示以LiDAR为基础的方法和以相机为基础的方法之间的性能差距。虽然许多作者调查了如何将LIDAR与RGB摄像机连接起来,但据我们所知,在3D 对象探测任务的一个深神经网络中,没有研究将LIDAR和立体装置引信作为3D 对象探测任务所需的深神经网络。本文展示了SLS-Fusion、4光谱LIDAR的引信数据的新办法和通过神经网络的立体照相机来进行深度估计,以达到更密的深度地图,从而改进3D 物体探测的性能。由于4Beam LiDAR比众所周知的64Beam LDAR更便宜,因此这种方法也被归类为以低成本的传感器为基础的方法。通过对KITTI 基准评估,在KITTI 3 基准测深时显示采用新的性能方法。