【泡泡一分钟】基于3D激光雷达地图的立体相机定位

每天一分钟,带你读遍机器人顶级会议文章

标题:Stereo Camera Localization in 3D LiDAR Maps

来源:2018 IEEE/RSJ International Conference on Intelligent Robots and Systems

翻译:王凯东

审核:陈世浪,颜青松

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

随着3D激光雷达传感器的到来,即时地图构建(SLAM)技术蓬勃发展,精确的3D地图更加容易获得,许多人将注意力放在已经获得的3D地图的定位上。本文中提出了一个轻便的新型只由相机进行视觉定位的算法,该算法是基于之前已经获得的3D激光雷达地图。

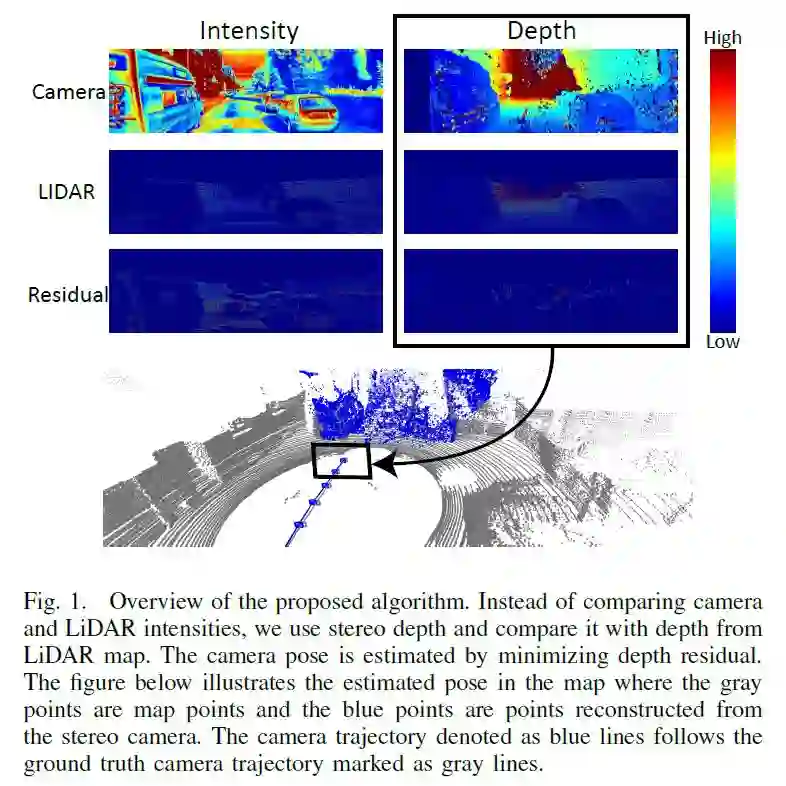

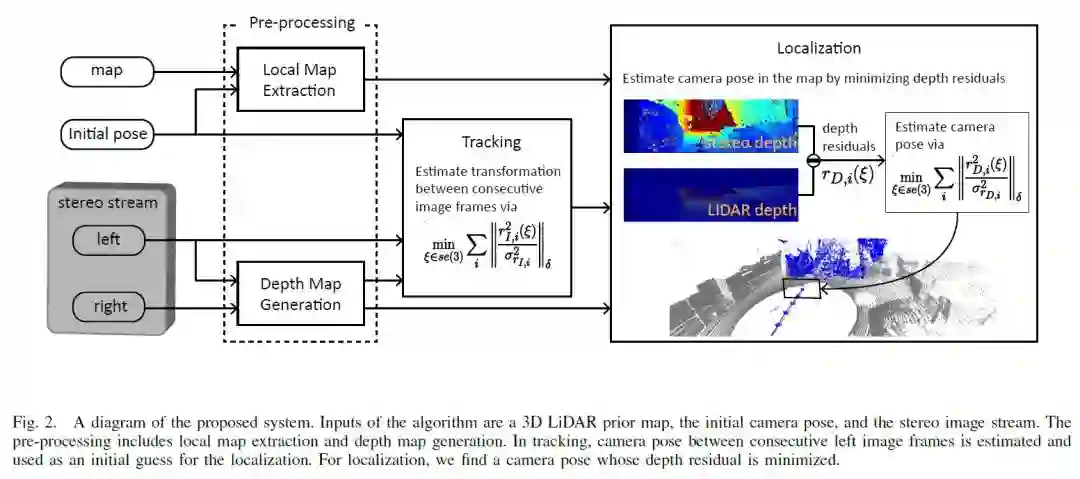

我们的目标是在GPS信号失效的地方用城市街景实现符合日常应用准确度的全球定位系统。通过利用立体相机,立体视差地图中的深度信息与3D激光雷达地图匹配。通过最小化深度残余,相机姿态六个自由度的信息可以被估计。通过可以提供较为准确的初步估计的视觉寻迹技术,深度残余可以很好地应用于相机姿态估计。在线测试表明,我们的方法平均定位错误与最先进的方法相当,我们用KITTI数据集证实了我们的方法是一个独立的定位系统,并且在应用我们自己的数据集时可以在SLAM框架中作为一个独立模块。

Abstract

As simultaneous localization and mapping (SLAM) techniques have flourished with the advent of 3D Light Detection and Ranging (LiDAR) sensors, accurate 3D maps are readily available. Many researchers turn their attention to localization in a previously acquired 3D map. In this paper, we propose a novel and lightweight camera-only visual positioning algorithm that involves localization within prior 3D LiDAR maps. We aim to achieve the consumer level global positioning system (GPS) accuracy using vision within the urban environment, where GPS signal is unreliable. Via exploiting a stereo camera, depth from the stereo disparity map is matched with 3D LiDAR maps. A full six degree of freedom (DOF) camera pose is estimated via minimizing depth residual. Powered by visual tracking that provides a good initial guess for the localization, the proposed depth residual is successfully applied for camera pose estimation. Our method runs online, as the average localization error is comparable to ones resulting from state-of-the-art approaches. We validate the proposed method as a stand-alone localizer using KITTI dataset and as a module in the SLAM framework using our own dataset.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号(paopaorobot_slam)。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com