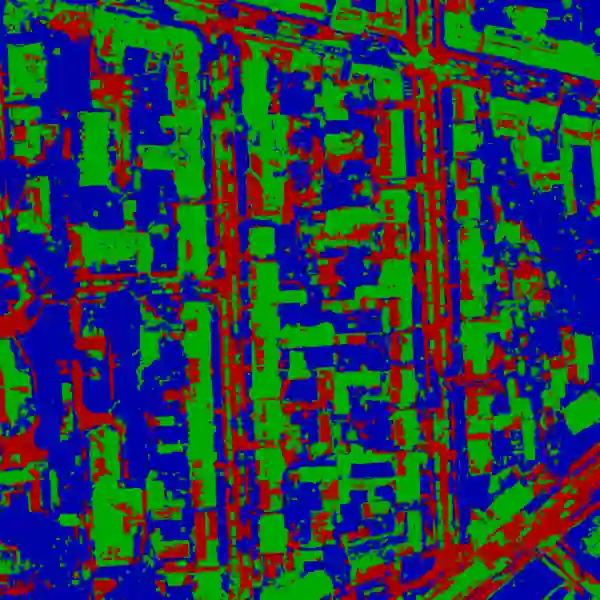

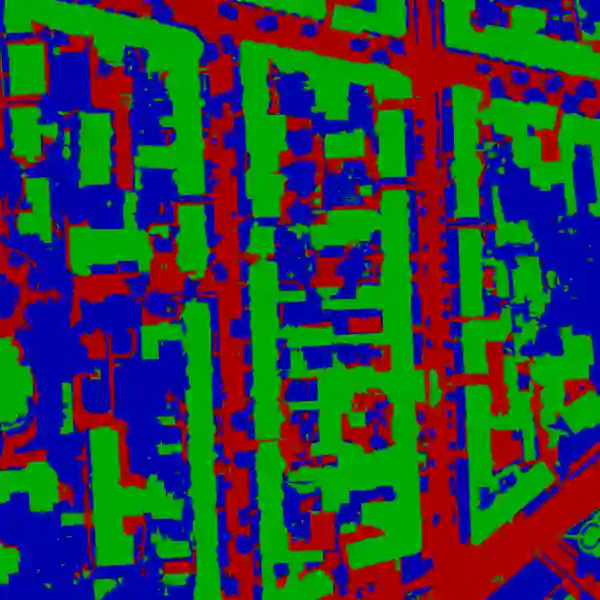

We present a new method that learns to segment and cluster images without labels of any kind. A simple loss based on information theory is used to extract meaningful representations directly from raw images. This is achieved by maximising mutual information of images known to be related by spatial proximity or randomized transformations, which distills their shared abstract content. Unlike much of the work in unsupervised deep learning, our learned function outputs segmentation heatmaps and discrete classifications labels directly, rather than embeddings that need further processing to be usable. The loss can be formulated as a convolution, making it the first end-to-end unsupervised learning method that learns densely and efficiently for semantic segmentation. Implemented using realistic settings on generic deep neural network architectures, our method attains superior performance on COCO-Stuff and ISPRS-Potsdam for segmentation and STL for clustering, beating state-of-the-art baselines.

翻译:我们展示了一种在没有任何标签的情况下学习分解和分组图像的新方法。 基于信息理论的简单损失被用于直接从原始图像中提取有意义的表达方式。 实现这一目的的途径是,通过空间相近或随机变换而发现相关图像的相互信息最大化,这种变换会蒸发其共享的抽象内容。 与未经监督的深层学习中的许多工作不同,我们学习的函数输出分解热图和离散分类标签直接嵌入需要进一步处理才能使用的分类。 损失可以被描述成一个演化过程, 使它成为第一个在语义分解方面快速和高效学习的端到端、 不受监督的学习方法。 在通用的深层神经网络结构上,我们的方法在COCO-Stuff和ISPRS-Potsdam的分解和用于组合、击打最先进的基线的STL上取得了优异性表现。