NOTE: The setup and example code in this README are for training GANs on single GPU. The models are smaller than the ones used in the papers. Please go to link if you are looking for how to reproduce the results in the papers.

Official Chainer implementation for conditional image generation on ILSVRC2012 dataset (ImageNet) with spectral normalization and projection discrimiantor.

Demo movies

Consecutive category morphing movies:

(5x5 panels 128px images) https://www.youtube.com/watch?v=q3yy5Fxs7Lc

(10x10 panels 128px images) https://www.youtube.com/watch?v=83D_3WXpPjQ

Other materials

Generated images

from the model trained on all ImageNet images (1K categories), 128px

from the model trained on dog and cat images (143 categories), 128px

Pretrained models

Movies

4 corners category morph.

References

Takeru Miyato, Toshiki Kataoka, Masanori Koyama, Yuichi Yoshida. Spectral Normalization for Generative Adversarial Networks. ICLR2018. OpenReview

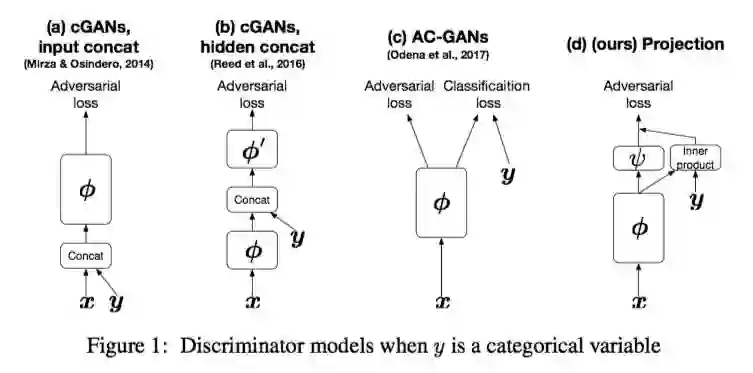

Takeru Miyato, Masanori Koyama. cGANs with Projection Discriminator. ICLR2018. OpenReview

Setup

Install required python libraries:

pip install -r requirements.txt

Download ImageNet dataset:

Please download ILSVRC2012 dataset from http://image-net.org/download-images

Preprocess dataset:

cd datasets

IMAGENET_TRAIN_DIR=/path/to/imagenet/train/

PREPROCESSED_DATA_DIR=/path/to/save_dir/

bash preprocess.sh $IMAGENET_TRAIN_DIR $PREPROCESSED_DATA_DIR

# Make the list of image-label pairs for all images (1000 categories, 1281167 images).

python imagenet.py $PREPROCESSED_DATA_DIR

# Make the list of image-label pairs for dog and cat images (143 categories, 180373 images).

puthon imagenet_dog_and_cat.py $PREPROCESSED_DATA_DIRDownload inception model:

python source/inception/download.py --outfile=datasets/inception_model

Training examples

Spectral normalization + projection discriminator for 64x64 dog and cat images:

LOGDIR = /path/to/logdir

CONFIG = configs/sn_projection_dog_and_cat_64.yml

python train.py --config=$CONFIG --results_dir=$LOGDIR --data_dir=$PREPROCESSED_DATA_DIRSpectral normalization + projection discriminator for 64x64 all ImageNet images:

LOGDIR = /path/to/logdir

CONFIG = configs/sn_projection_64.yml

python train.py --config=$CONFIG --results_dir=$LOGDIR --data_dir=$PREPROCESSED_DATA_DIREvaluation

Calculate inception score (with the original OpenAI implementation)

python evaluations/calc_inception_score.py --config=$CONFIG --snapshot=${LOGDIR}/ResNetGenerator_<iterations>.npz --results_dir=${LOGDIR}/inception_score --splits=10 --tf

Generate images and save them in ${LOGDIR}/gen_images

python evaluations/gen_images.py --config=$CONFIG --snapshot=${LOGDIR}/ResNetGenerator_<iterations>.npz --results_dir=${LOGDIR}/gen_images