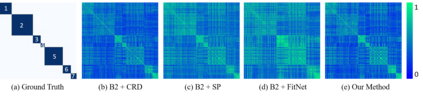

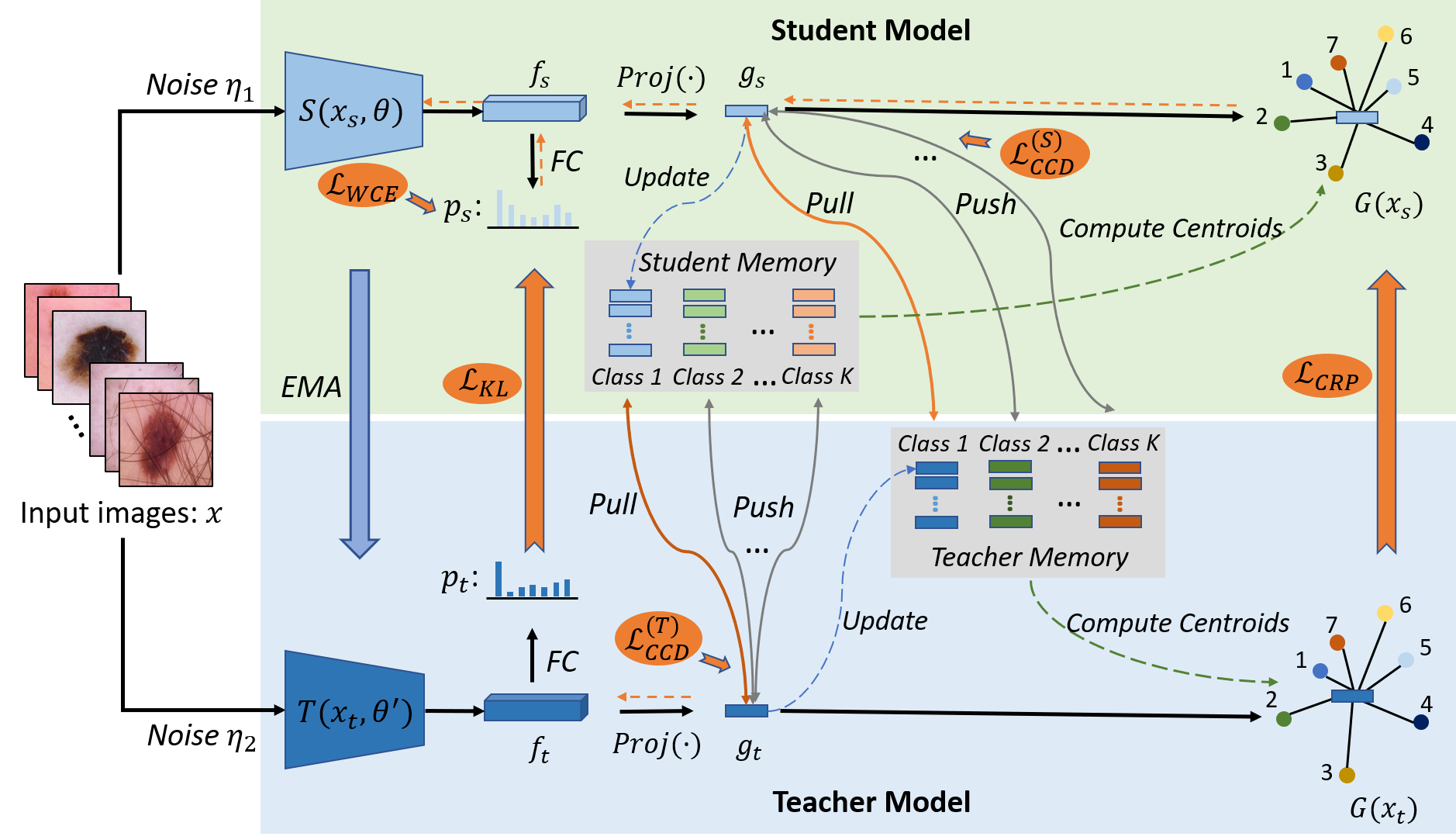

The amount of medical images for training deep classification models is typically very scarce, making these deep models prone to overfit the training data. Studies showed that knowledge distillation (KD), especially the mean-teacher framework which is more robust to perturbations, can help mitigate the over-fitting effect. However, directly transferring KD from computer vision to medical image classification yields inferior performance as medical images suffer from higher intra-class variance and class imbalance. To address these issues, we propose a novel Categorical Relation-preserving Contrastive Knowledge Distillation (CRCKD) algorithm, which takes the commonly used mean-teacher model as the supervisor. Specifically, we propose a novel Class-guided Contrastive Distillation (CCD) module to pull closer positive image pairs from the same class in the teacher and student models, while pushing apart negative image pairs from different classes. With this regularization, the feature distribution of the student model shows higher intra-class similarity and inter-class variance. Besides, we propose a Categorical Relation Preserving (CRP) loss to distill the teacher's relational knowledge in a robust and class-balanced manner. With the contribution of the CCD and CRP, our CRCKD algorithm can distill the relational knowledge more comprehensively. Extensive experiments on the HAM10000 and APTOS datasets demonstrate the superiority of the proposed CRCKD method.

翻译:研究显示,知识蒸馏(KD),特别是更能适应扰动的中等教师框架(CCD)能够帮助减轻过度配制效应。然而,直接将KD从计算机视觉转到医学图像分类会降低性能,因为医疗图象受到较高阶级内部差异和阶级不平衡的影响。为了解决这些问题,我们建议采用一种新的分类保留差异性知识蒸馏(CRCKD)算法(CRCKD),该算法采用常用的教师平均模型作为监管者。具体地说,我们建议采用新的分类制对比蒸馏(KD)模块,从教师和学生模型的同一班级中拉近正面的图像配对,同时将负面图像配对从不同班级推开。随着这种规范化,学生模型的特征分布显示较高的阶级内部相似性和跨阶级差异。此外,我们提议采用CREMRevil(CRP)损失来将教师关系提升教师关系,而CRIS(CR)100级的高级和高级水平,可以更牢固地展示CREM(C)和CRC(C)系统)的升级方法。