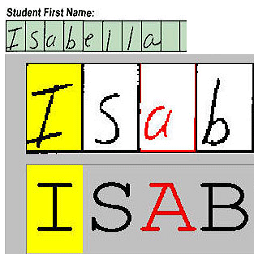

Degraded images commonly exist in the general sources of character images, leading to unsatisfactory character recognition results. Existing methods have dedicated efforts to restoring degraded character images. However, the denoising results obtained by these methods do not appear to improve character recognition performance. This is mainly because current methods only focus on pixel-level information and ignore critical features of a character, such as its glyph, resulting in character-glyph damage during the denoising process. In this paper, we introduce a novel generic framework based on glyph fusion and attention mechanisms, i.e., CharFormer, for precisely recovering character images without changing their inherent glyphs. Unlike existing frameworks, CharFormer introduces a parallel target task for capturing additional information and injecting it into the image denoising backbone, which will maintain the consistency of character glyphs during character image denoising. Moreover, we utilize attention-based networks for global-local feature interaction, which will help to deal with blind denoising and enhance denoising performance. We compare CharFormer with state-of-the-art methods on multiple datasets. The experimental results show the superiority of CharFormer quantitatively and qualitatively.

翻译:字符图像的一般来源中通常存在退化图像,导致字符识别结果不令人满意。现有的方法致力于恢复退化的字符图像。然而,这些方法所获得的去除结果似乎并没有提高字符识别性能。这主要是因为目前的方法只侧重于像素级信息,忽视了字符的关键特征,例如其格字,在去除过程中造成字符格损坏。在本文件中,我们引入了一个基于胶合和注意机制的新颖通用框架,即Charformer, 用于精确恢复字符图像而不改变其内在的格字。与现有框架不同,Charformer引入了一个平行的目标任务,以获取额外信息并将其注入图像去污骨,这将在字符图像去除过程中保持字符格的一致性。此外,我们利用基于注意的网络进行全球-地方特征互动,这将有助于处理盲分解和增强去音性性。我们将CharFormer与多数据设置方面的最新方法进行比较。实验结果显示Charmer的定性和定性。